CONTENT OF THE MARKET PATTERNS DASHBOARD PAGES

Price & Volume Highlights

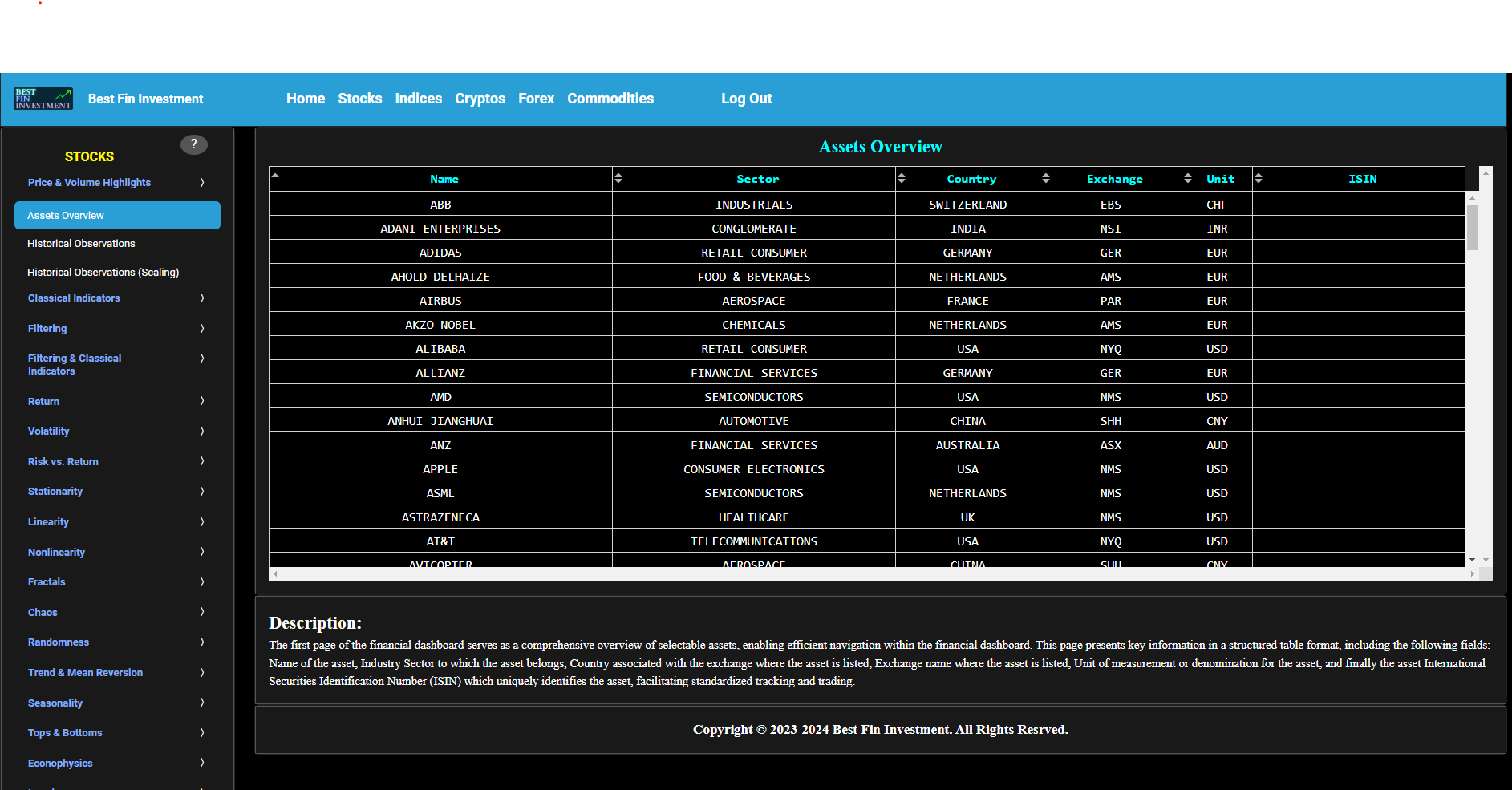

Assets Overview

This first page of the market patterns dashboard serves as a comprehensive overview of selectable assets, enabling efficient navigation within the dashboard's subsequent pages. The current page presents key information in a structured table format, including the following fields: name of the asset, asset ticker, country associated with the exchange where the asset is listed, industry sector to which the asset belongs, exchange name where the asset is listed, and unit of measurement or denomination for the asset.

Price & Volume Highlights

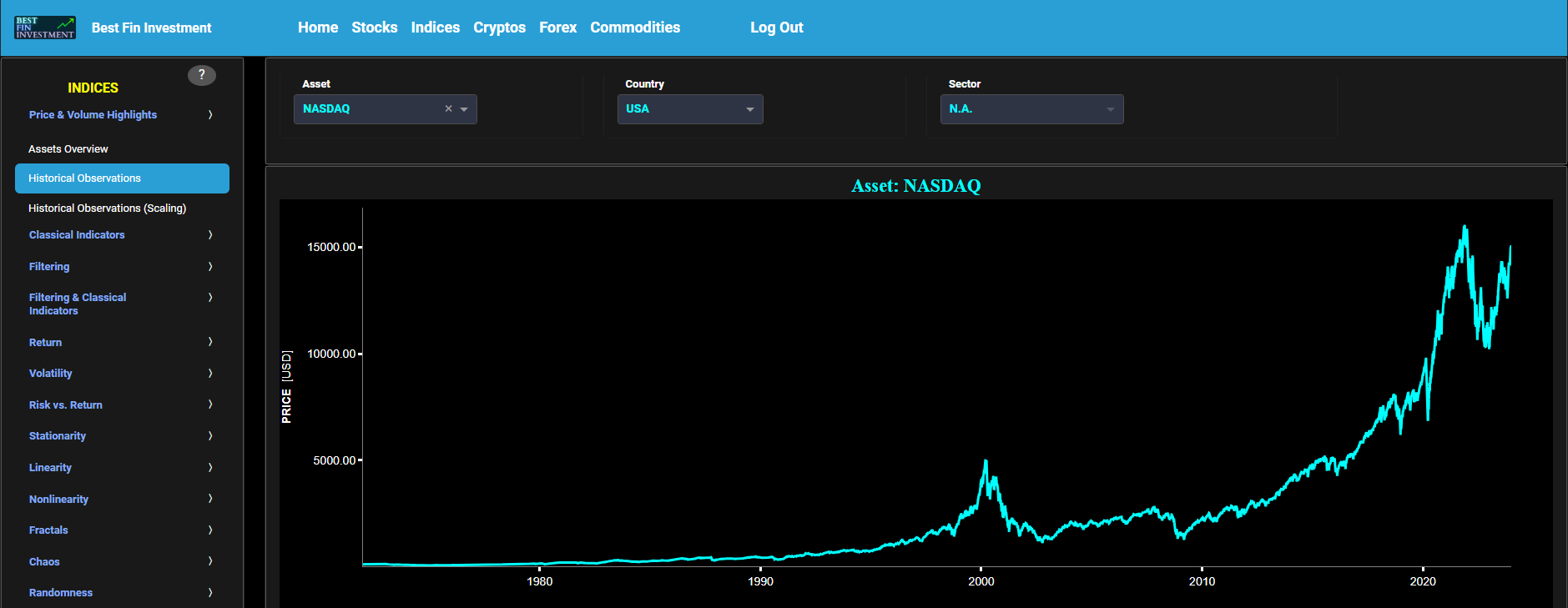

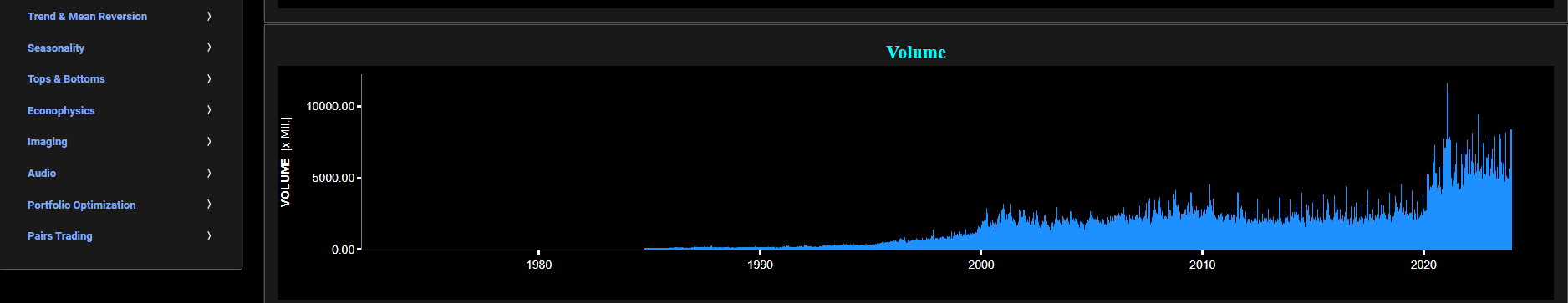

Historical Observations

This page visualizes the full historical data range of a selected asset, based upon daily close prices. In the menu bar (located just above the graphs), you can also see the associated asset country and asset sector (wherever applicable). A price and volume graph will appear after the selection of an asset, or the selection of a country followed by the selection of an available asset within that country.

Price & Volume Highlights

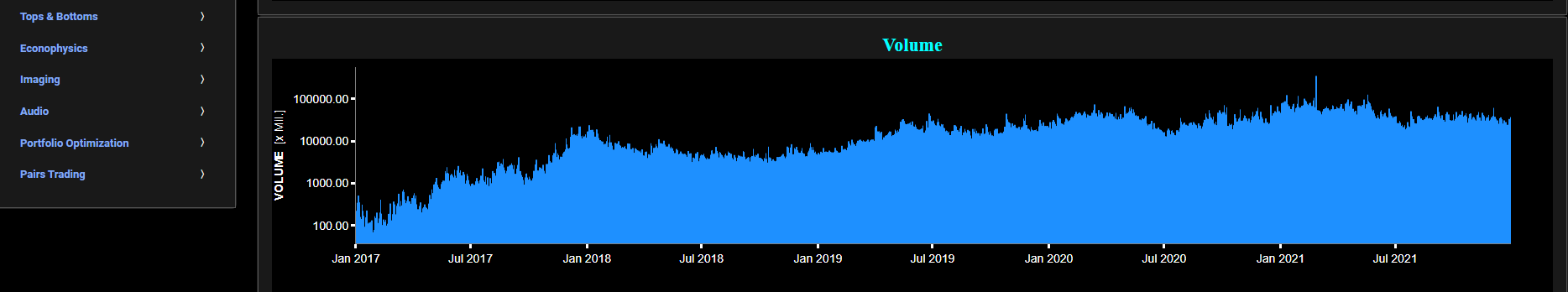

Historical Observations (Scaling)

This page visualizes historical asset prices and volume using either daily, weekly, or monthly close prices. In the menu bar (located just above the graphs), you can also see (and select) the associated asset country. Further you can select specific time periods to visualize and also toggle between a linear or logarithmic price and volume scaling on the y-axis.

Classical Indicators

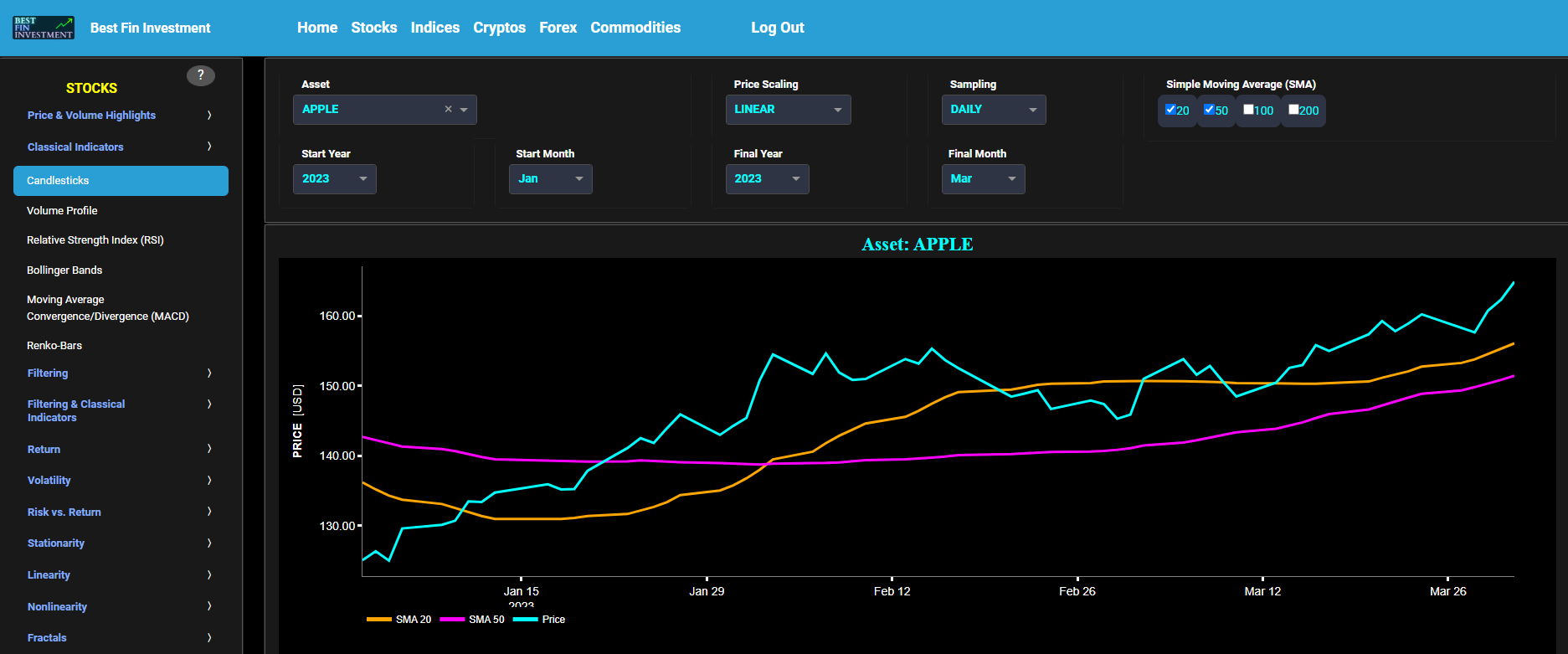

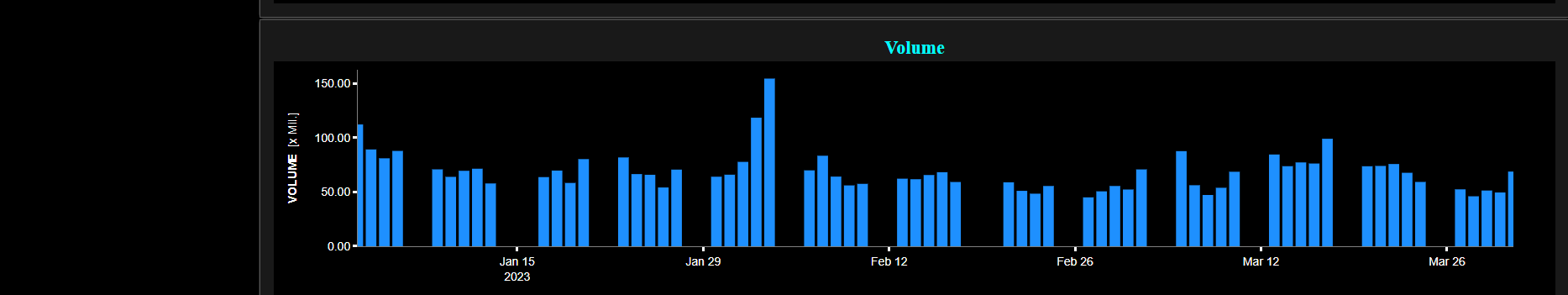

Candlesticks

This page provides 3 graphs. The upper graph visualizes historical asset prices using either daily, weekly, or monthly close prices. In the menu bar (located just above the graphs), you can also select specific time periods to visualize and also toggle between a linear or logarithmic price scaling on the y-axis. In addition the upper graph allows you to superimpose several Simple Moving Average (SMA) lines. The middle graph shows the corresponding candlesticks visualization, whereas the lower graph presents the volume indicator for the selected asset.

Classical Indicators

Volume Profile

This page provides 2 graphs. The upper graph visualizes historical asset prices using either daily, weekly, or monthly close prices. In the menu bar (located just above the graphs), you can also select specific time periods to visualize and also toggle between a linear or logarithmic price scaling on the y-axis. In addition the upper graph allows you to superimpose several Simple Moving Average (SMA) lines. Next the right-side of the upper graph shows the so-called Volume Profile chart, i.e. the volume histogram (bar graph) which represents the distribution of volume at each price level over a specific time period. This allows to visualize where the majority of trading activity occurred and may be useful to identify support and resistance levels based on volume distribution at various price levels. The lower graph shows the corresponding candlesticks visualization with its associated Volume Profile chart.

Classical Indicators

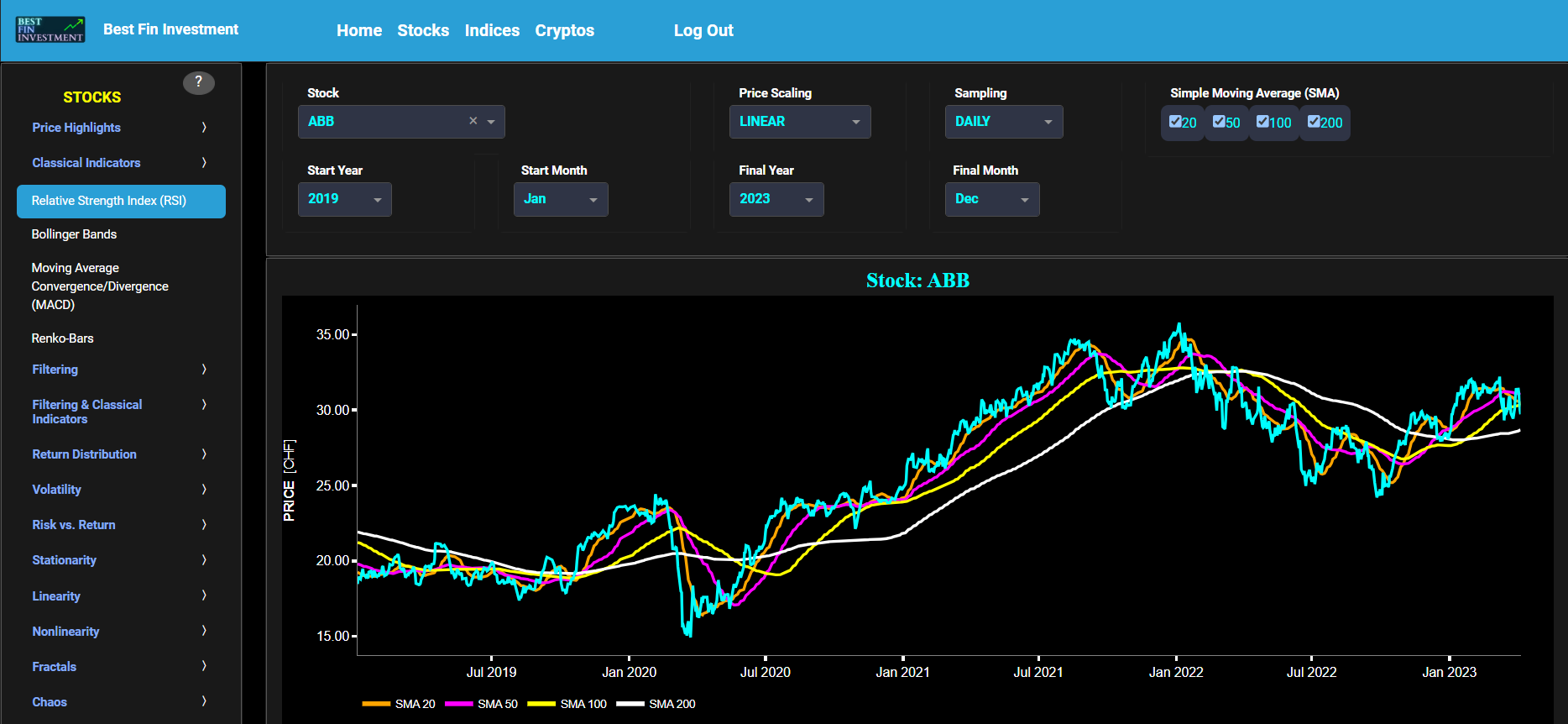

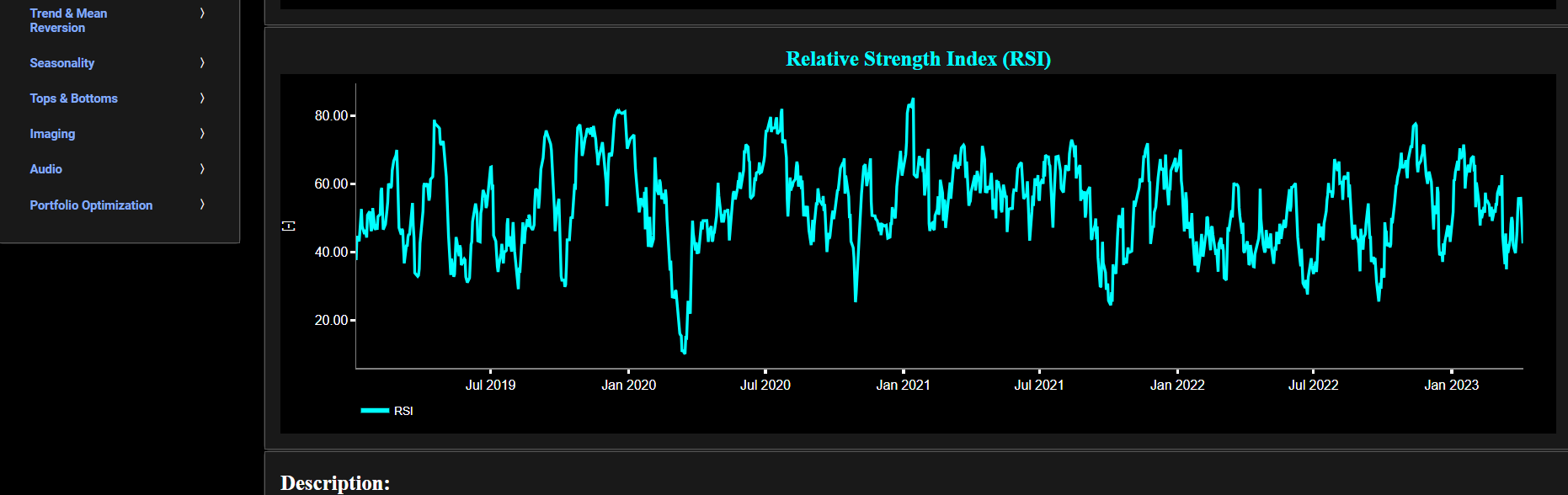

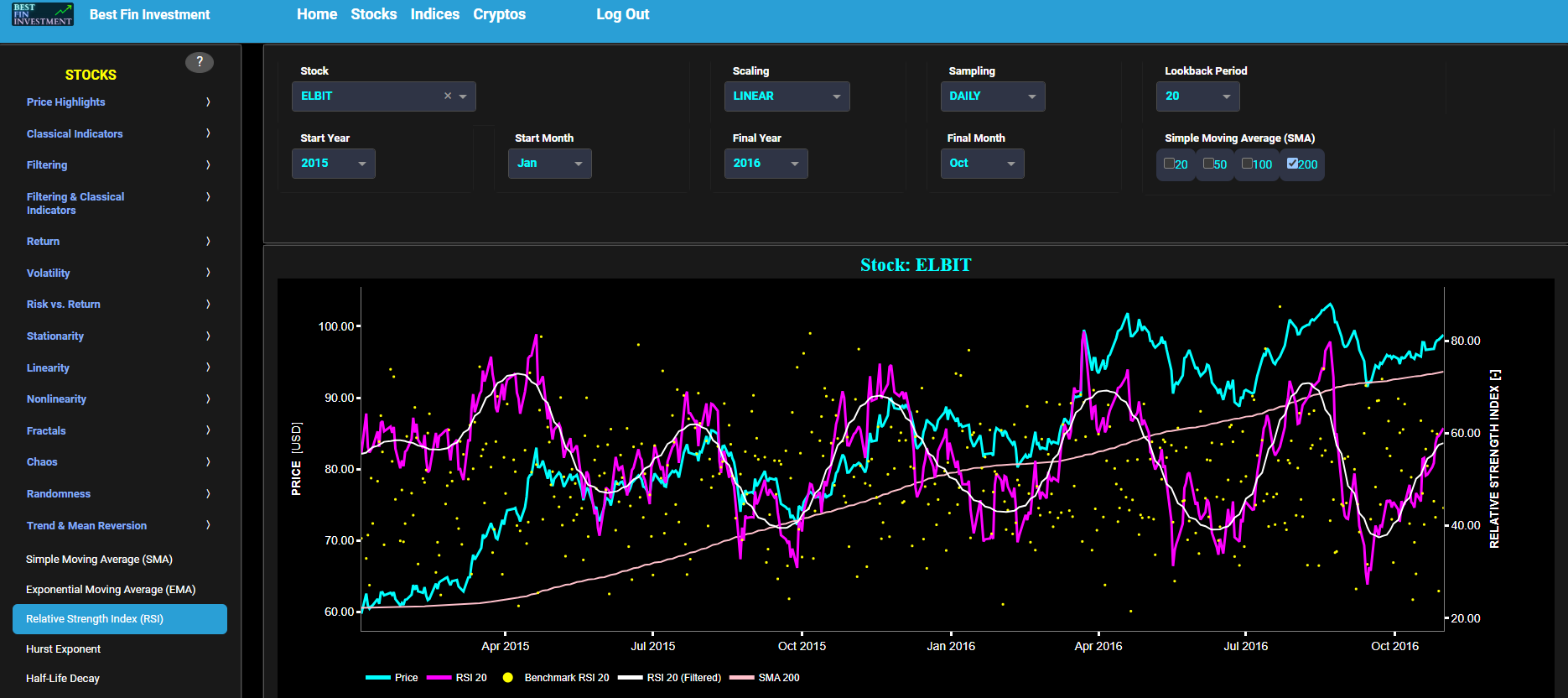

Relative Strength Index (RSI)

This page provides 2 graphs. The upper graph visualizes historical asset prices using either daily, weekly, or monthly close prices. In the menu bar (located just above the graphs), you can also select specific time periods to visualize and also toggle between a linear or logarithmic price scaling on the y-axis. In addition the upper graph allows you to superimpose several Simple Moving Average (SMA) lines. The lower graph presents the standard 14-period Relative Strength Index (RSI) indicator for the selected asset price data.

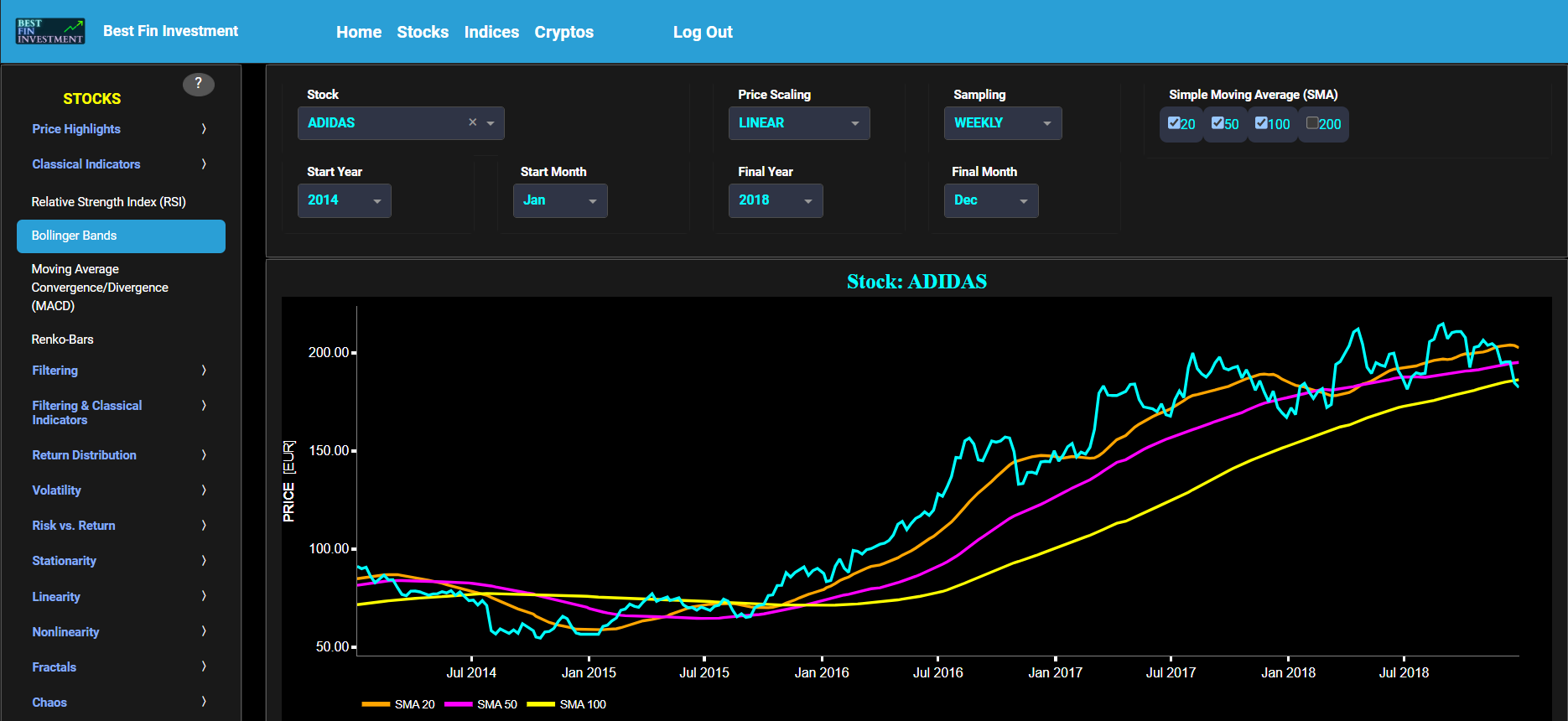

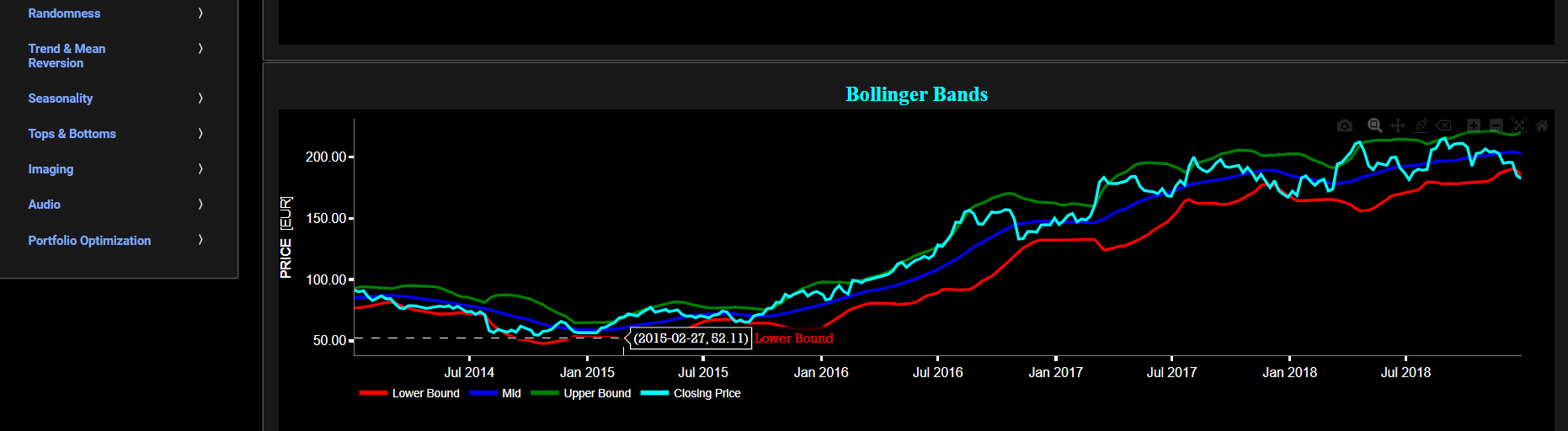

Classical Indicators

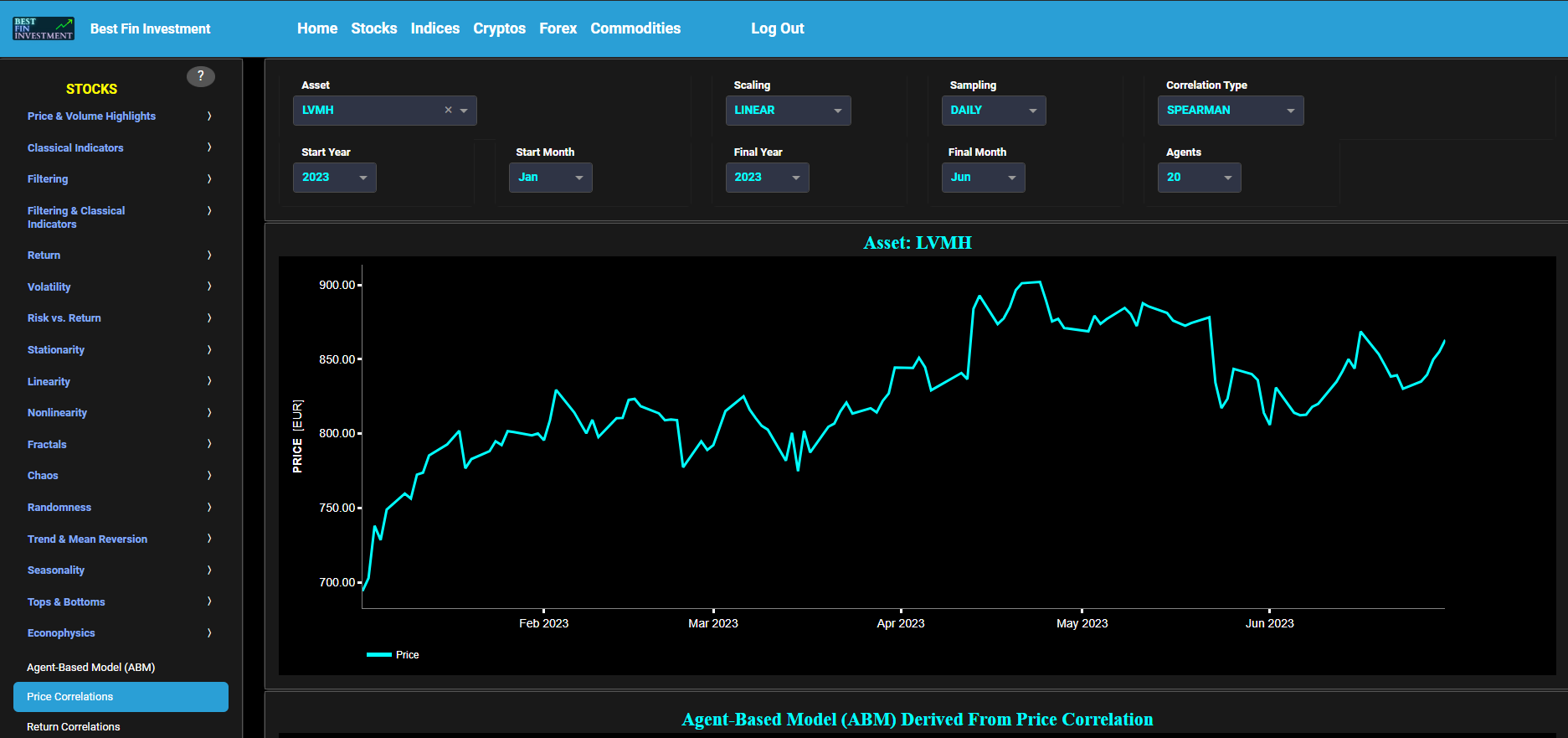

Bollinger Bands

This page provides 2 graphs. The upper graph visualizes historical asset prices using either daily, weekly, or monthly close prices. In the menu bar (located just above the graphs), you can also select specific time periods to visualize and also toggle between a linear or logarithmic price scaling on the y-axis. In addition the upper graph allows you to superimpose several Simple Moving Average (SMA) lines. The lower graph presents the Bollinger Bands indicator (computed on the basis of a standard 20-period moving average) for the selected asset price data.

Classical Indicators

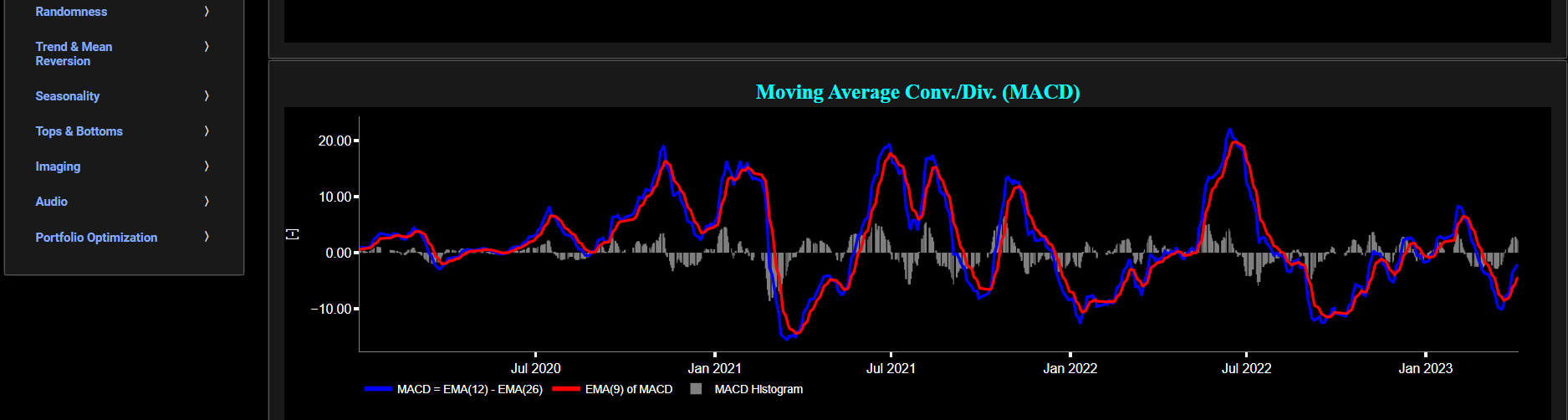

Moving Average Convergence/Divergence (MACD)

This page provides 2 graphs. The upper graph visualizes historical asset prices using either daily, weekly, or monthly close prices. In the menu bar (located just above the graphs), you can also select specific time periods to visualize and also toggle between a linear or logarithmic price scaling on the y-axis. In addition the upper graph allows you to superimpose several Simple Moving Average (SMA) lines. The lower graph presents the Moving Average Convergence/Divergence (MACD) indicator (using the standard 12,26,9 moving average periods) for the selected asset price data.

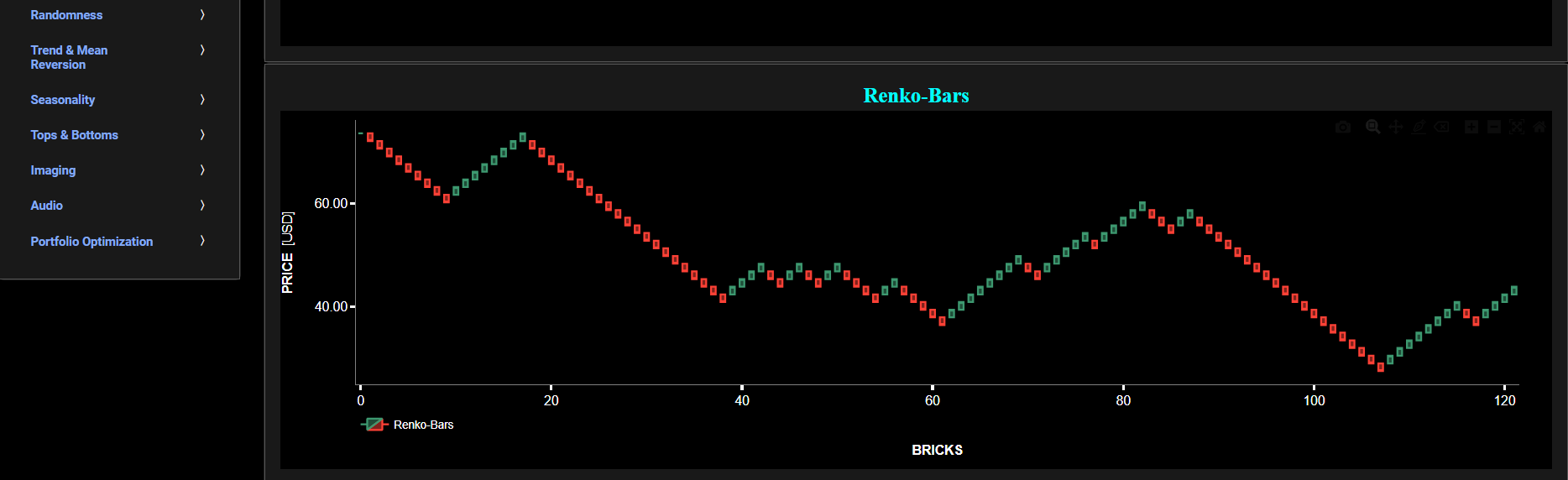

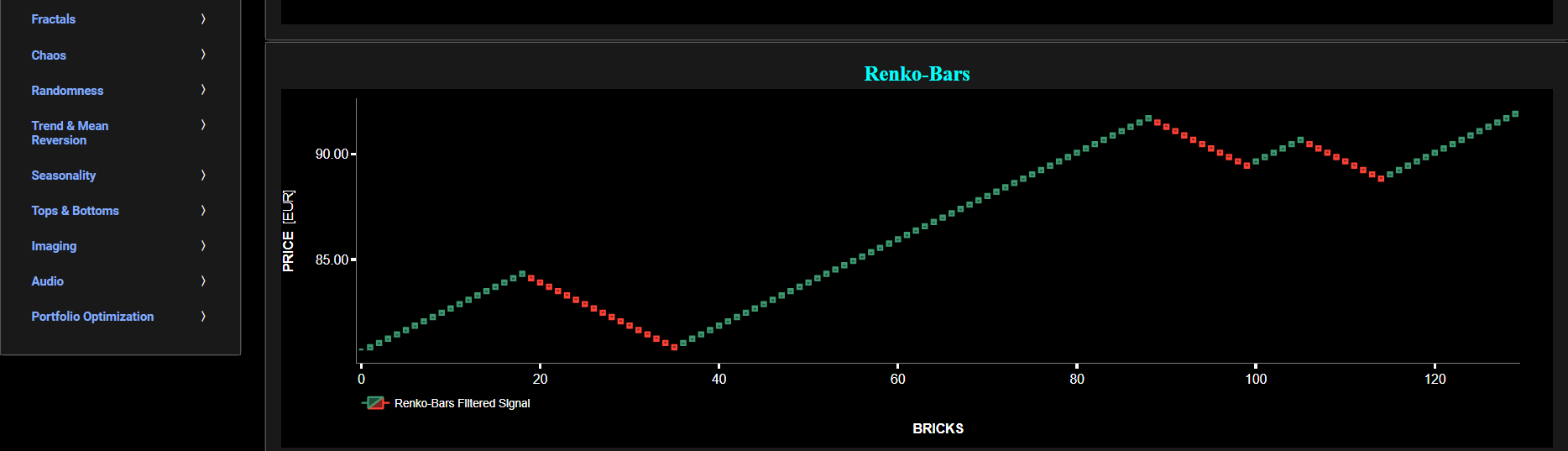

Classical Indicators

Renko-Bars

This page provides 2 graphs. The upper graph visualizes historical asset prices using either daily, weekly, or monthly close prices. In the menu bar (located just above the graphs), you can also select specific time periods to visualize and also toggle between a linear or logarithmic price scaling on the y-axis. In addition the upper graph allows you to superimpose several Simple Moving Average (SMA) lines. The lower graph presents the Renko Candle Bars indicator, using a simplified Average True Range (ATR) indicator, for the selected asset price data.

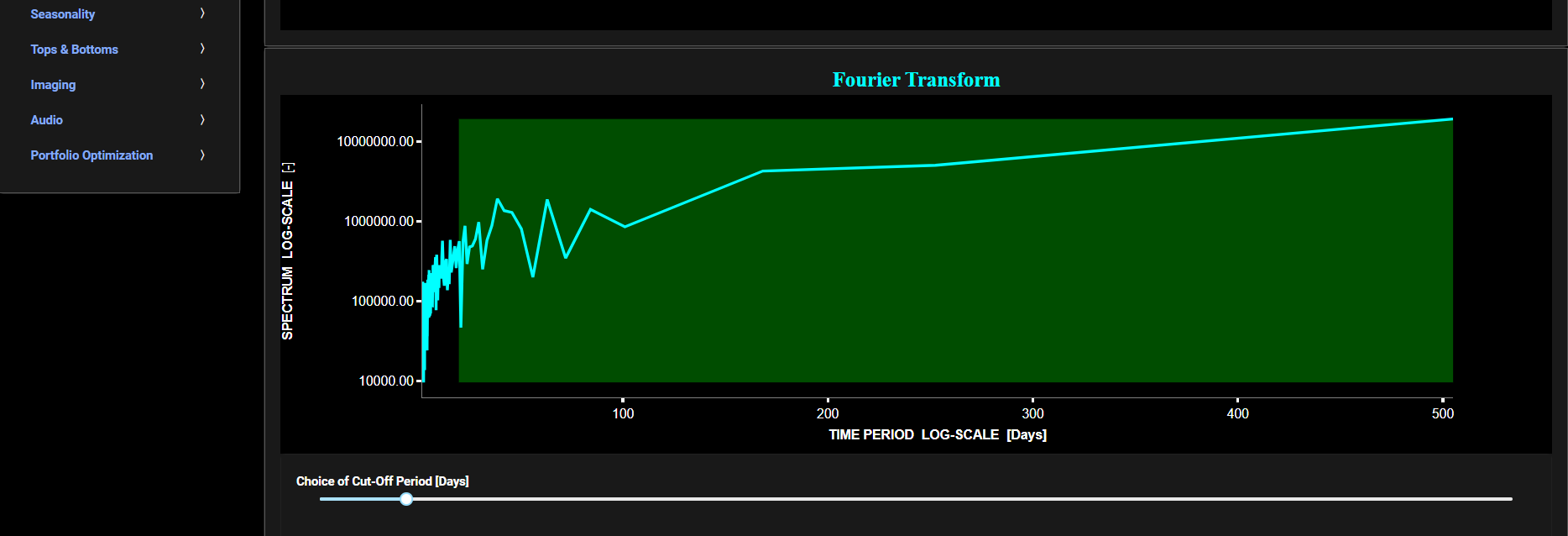

Filtering

Frequency Analysis

This page provides 2 graphs. The upper graph visualizes historical asset prices using either daily, weekly, or monthly close prices. In the menu bar (located just above the graphs), you can also select specific time periods to visualize and also toggle between a linear or logarithmic price scaling on the y-axis. In addition the upper graph allows you to superimpose several Simple Moving Average (SMA) lines.

The lower graph presents the frequency content of the price signal, computed through Fast Fourier Transform (FFT). For the lower graph x-axis unit we chose a more recognizable time period unit (expressed in Days) rather than the typical Herz metric. Beneath the lower graph there is a horizontal sliding bar which allows to select the cut-off time period (Tc, with Tc expressed in Days) of a low-pass filter. The purpose of such a filter would be to smoothen-out the original price signal, i.e. by filtering out (or removing) any high-frequency content (with frequency > 1/Tc), or equivalently, by removing the low-periodic signal content (with period < Tc). The green background color of the lower graph essentially represents the price signal-content which would be preserved, after the application of this low-pass filter. This cut-off period (Tc) will subsequently be used within the page “Butterworth Filter” to perform a low-pass Butterworth filtering.

Filtering

Butterworth Filter

This page visualizes historical asset prices using daily close prices. In the menu bar (located just above the graph), you can also select specific time periods to visualize and also toggle between a linear or logarithmic price scaling on the y-axis. In addition the graph allows you to superimpose several Simple Moving Average (SMA) lines. Next this page allows you to add a filtered price signal obtained through the application of a low-pass Butterworth digital filter. Note that here we use a causal implementation of the filter, meaning that the filter output at time t is only influenced by data points up to time t. The filter cut-off time period (Tc), with Tc expressed in Days, can also be selected in the menu bar. The purpose of this filter is to smoothen-out the original price signal, i.e. by filtering out (or removing) any high-frequency content (with frequency > 1/Tc), or equivalently, by removing the low-periodic signal content (with period < Tc). Refer also to the lower graph on page "Frequency Analysis" to help you select an appropriate cut-off time period value.

Butterworth filters are widely employed across various engineering disciplines, including aerospace, electrical, and mechanical engineering, to effectively filter and process digital signals. Further you can also compare the results obtained with this Butterworth filter with the ones obtained using a Wavelet filter on page “Wavelets Filter”. Finally, this filter will be used within the pages located under section “Filtering & Classical Indicators” to first filter out the price signal data before applying one of the classical indicators.

Filtering

Wavelets Filter

This page visualizes historical asset prices using daily close prices. In the menu bar (located just above the graph), you can also select specific time periods to visualize and also toggle between a linear or logarithmic price scaling on the y-axis. In addition the graph allows you to superimpose several Simple Moving Average (SMA) lines. Next this page allows you to add a filtered price signal obtained through the application of a Wavelet-based denoising filter. Please be aware that, unlike the Butterworth filter implementations used on other Dashboard pages, the current wavelet filter applied here is non-causal. This means the filter's output depends on both past and future data points, which can lead to potentially misleading or overly optimistic results, especially in real-time processing scenarios such as filtering daily asset prices. This non-causal filter is employed because standard wavelet functions are typically non-causal. However, after conducting tests using daily data, we have observed that the impact is not significant. Here we have used the "sym" (symmetric) wavelet family which tends to have good time-frequency localization properties and hence may be better suited for denoising non-stationary financial price data. The so-called Wavelet filter decomposition level can also be selected in the menu bar. The purpose of this filter is to smoothen-out the original price signal, i.e. by filtering out (or removing) any high-frequency content of the price signal.

Wavelet-based denoising filters are widely employed across various engineering disciplines, including aerospace, electrical, and mechanical engineering, to effectively filter and process digital signals. Further you can also compare the results obtained with this Wavelet filter with the ones obtained using a Butterworth filter on page “Butterworth Filter”. Finally, this filter will be used within the pages located under section “Filtering & Classical Indicators” to first filter out the price signal data before applying one of the classical indicators.

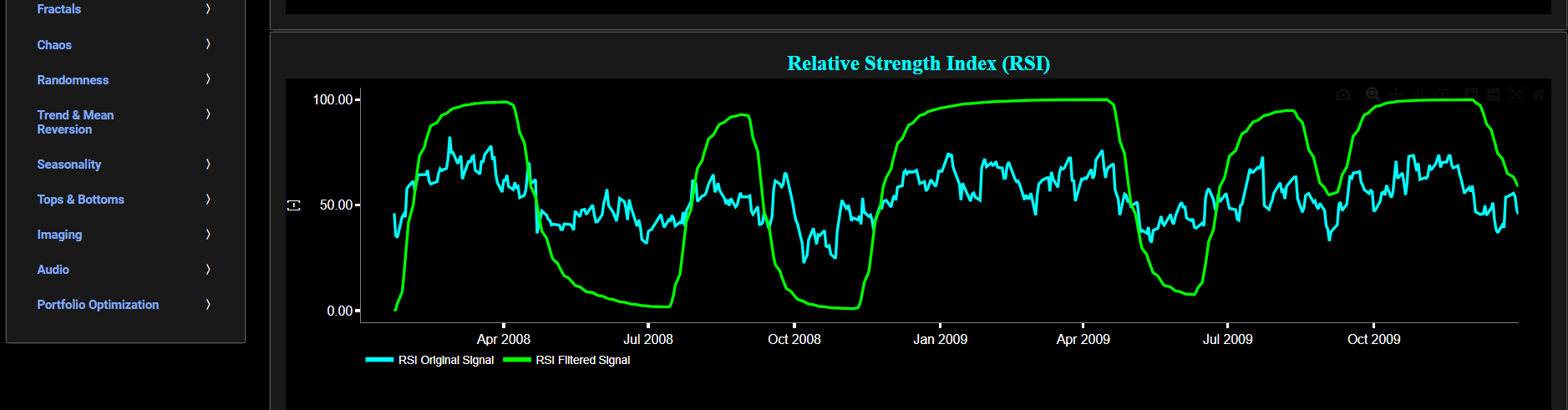

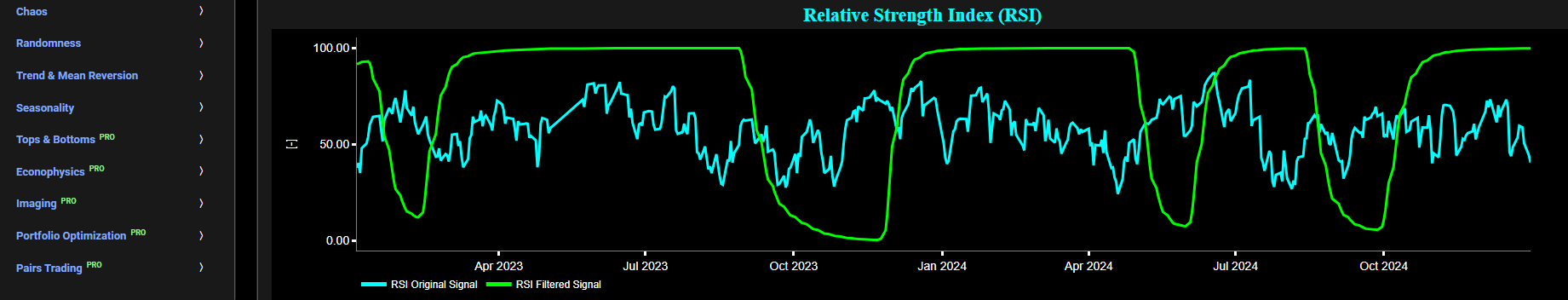

Filtering & Classical Indicators

RSI (Butterworth)

This page provides 2 graphs. The upper graph visualizes historical asset prices using daily close prices. In the menu bar (located just above the graphs), you can also select specific time periods to visualize and also toggle between a linear or logarithmic price scaling on the y-axis. In addition the upper graph allows you to superimpose several Simple Moving Average (SMA) lines. Next this page allows you to add a filtered price signal obtained through the application of a causal low-pass Butterworth digital filter. The filter cut-off time period (Tc), with Tc expressed in Days, can also be selected in the menu bar. The purpose of this filter is to smoothen-out the original price signal, i.e. by filtering out (or removing) any high-frequency content (with frequency > 1/Tc), or equivalently, by removing the low-periodic signal content (with period < Tc). Refer also to the lower graph on page "Frequency Analysis" to help you select an appropriate cut-off time period value.

The lower graph presents the standard 14-period Relative Strength Index (RSI) indicator applied to the original (unfiltered) price signal data and subsequently applied to the filtered (Butterworth) price signal data. Finally you can also compare the results obtained on this page with the ones obtained using a Wavelet filter on page “RSI (Wavelets)”.

Filtering & Classical Indicators

RRSI Optimization (Butterworth Filter)

This page provides 2 graphs. The upper graph visualizes historical asset prices using daily close prices. In the menu bar (located just above the graphs), you can also select specific time periods to visualize and also toggle between a linear or logarithmic price scaling on the y-axis. Next this page allows you to add a filtered price signal obtained through the application of a causal low-pass Butterworth digital filter. The filter cut-off time period (Tc), with Tc expressed in Days, can also be selected in the menu bar. The purpose of this filter is to smoothen-out the original price signal, i.e. by filtering out (or removing) any high-frequency content (with frequency > 1/Tc), or equivalently, by removing the low-periodic signal content (with period < Tc). Refer also to the lower graph on page "Frequency Analysis" to help you select an appropriate cut-off time period value. In addition, the upper graph allows you to superimpose a user-defined Simple Moving Average (SMA) line where its lookback time period (in Days) is set in a dedicated dropdown menu named "SMA Period". This SMA is applied to the original unfiltered price signal.

Further, the lower graph also allows you to superimpose a user-defined Relative Strength Index (RSI) line where its lookback time period (in Days) is also set by a dedicated dropdown menu named "RSI Period". This enables you to compare the RSI indicator applied to the original (unfiltered) price signal data with the RSI indicator applied to the filtered (Butterworth) price signal data.

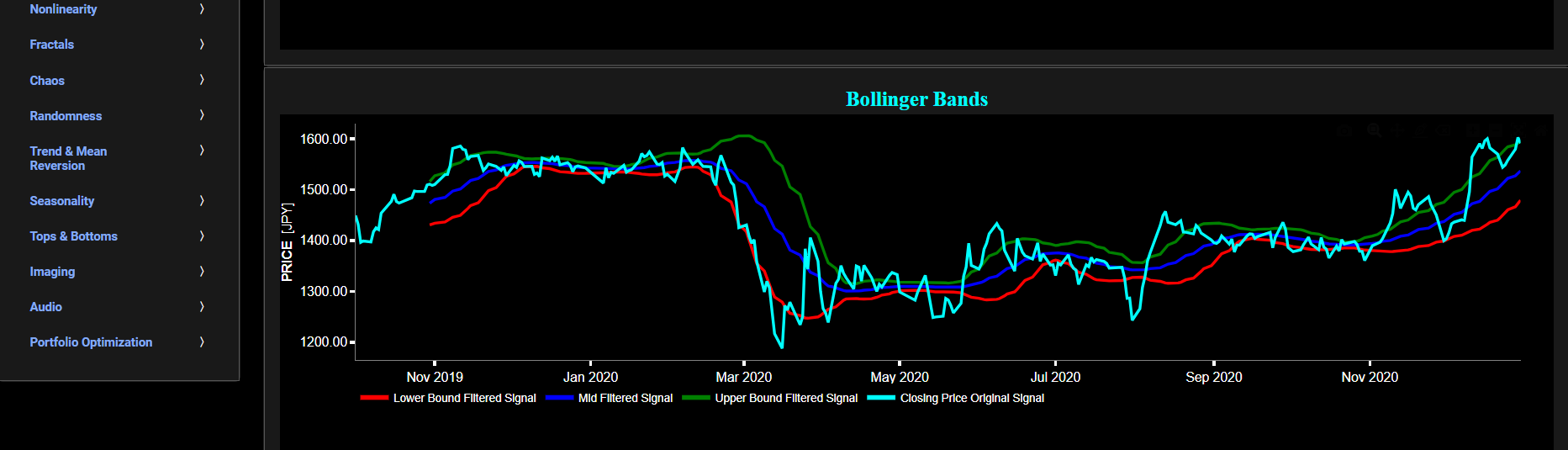

Filtering & Classical Indicators

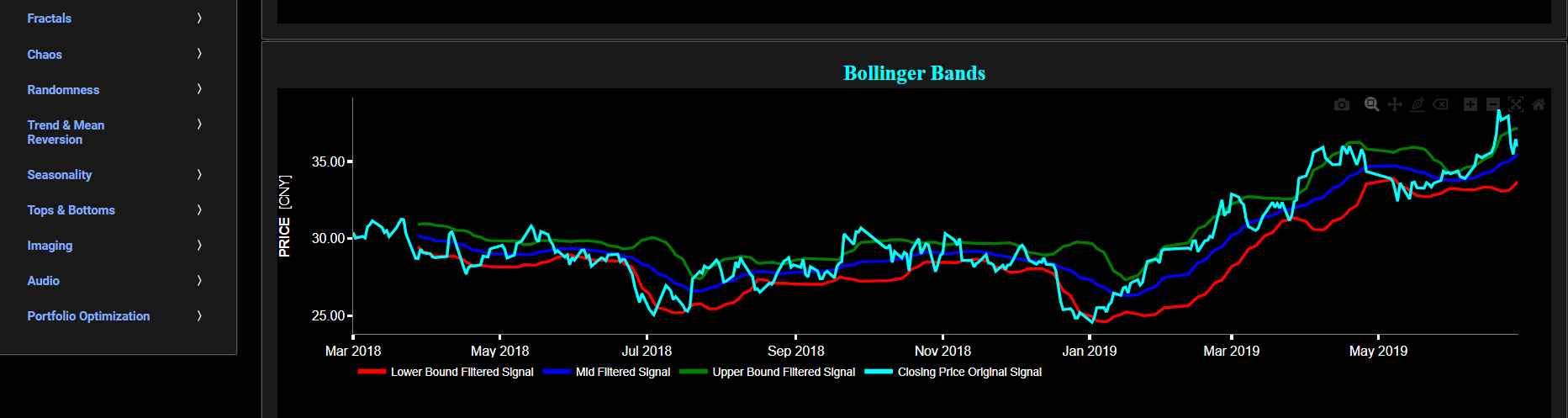

Bollinger Bands (Butterworth)

This page provides 2 graphs. The upper graph visualizes historical asset prices using daily close prices. In the menu bar (located just above the graphs), you can also select specific time periods to visualize and also toggle between a linear or logarithmic price scaling on the y-axis. In addition the upper graph allows you to superimpose several Simple Moving Average (SMA) lines. Next this page allows you to add a filtered price signal obtained through the application of a causal low-pass Butterworth digital filter. The filter cut-off time period (Tc), with Tc expressed in Days, can also be selected in the menu bar. The purpose of this filter is to smoothen-out the original price signal, i.e. by filtering out (or removing) any high-frequency content (with frequency > 1/Tc), or equivalently, by removing the low-periodic signal content (with period < Tc). Refer also to the lower graph on page "Frequency Analysis" to help you select an appropriate cut-off time period value.

The lower graph presents the Bollinger Bands indicator (computed on the basis of a standard 20-period moving average) and applied towards the filtered (Butterworth) price signal data. The lower graph also shows, in cyan color, the original (unfiltered) price signal data. Finally you can also compare the results obtained on this page with the ones obtained using a Wavelet filter on page “Bollinger Bands (Wavelets)”.

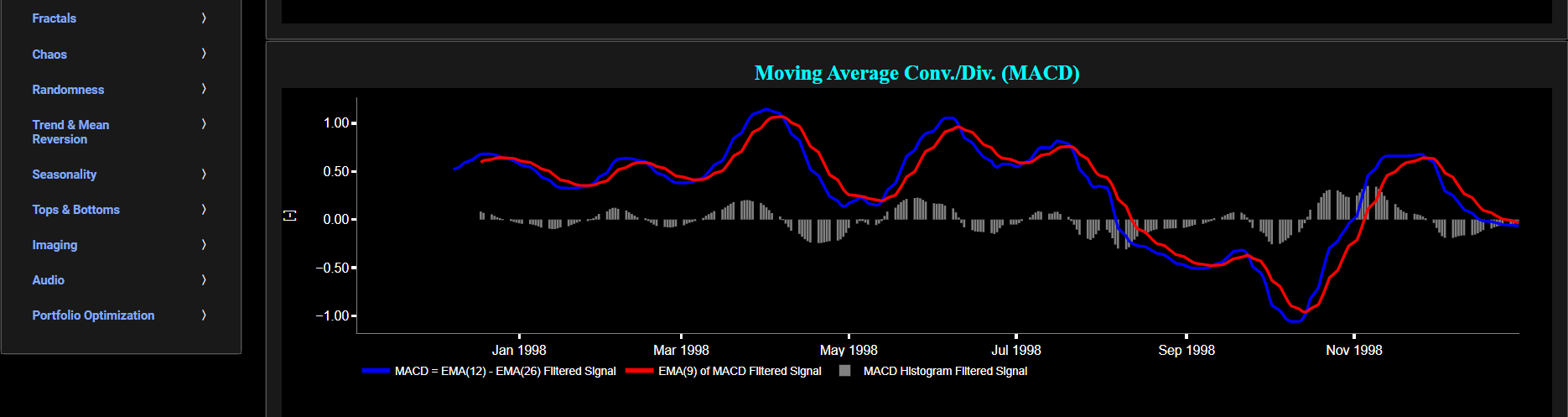

Filtering & Classical Indicators

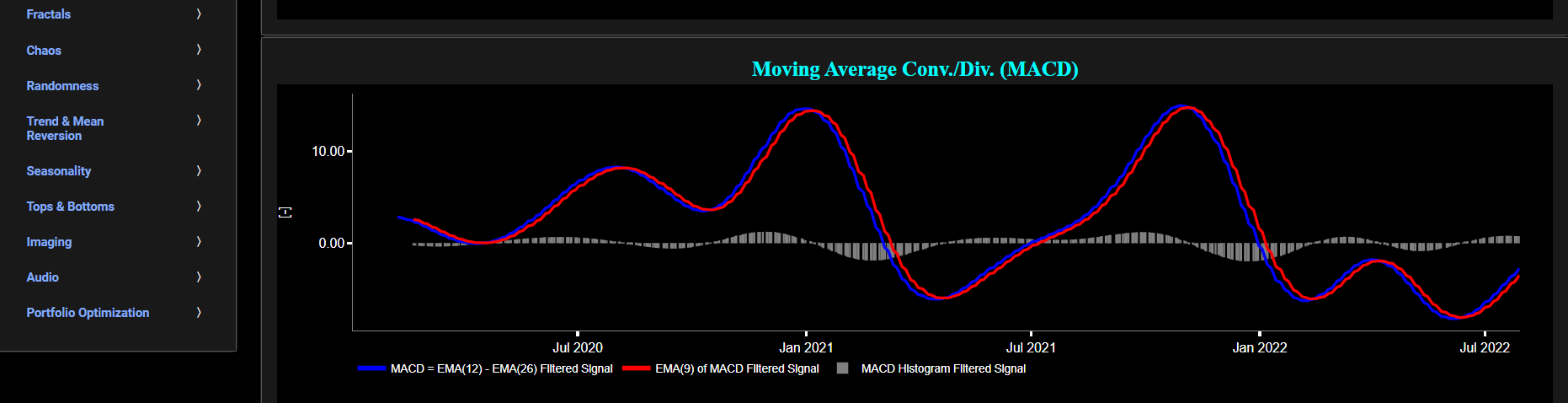

MACD (Butterworth)

This page provides 2 graphs. The upper graph visualizes historical asset prices using daily close prices. In the menu bar (located just above the graphs), you can also select specific time periods to visualize and also toggle between a linear or logarithmic price scaling on the y-axis. In addition the upper graph allows you to superimpose several Simple Moving Average (SMA) lines. Next this page allows you to add a filtered price signal obtained through the application of a causal low-pass Butterworth digital filter. The filter cut-off time period (Tc), with Tc expressed in Days, can also be selected in the menu bar. The purpose of this filter is to smoothen-out the original price signal, i.e. by filtering out (or removing) any high-frequency content (with frequency > 1/Tc), or equivalently, by removing the low-periodic signal content (with period < Tc). Refer also to the lower graph on page "Frequency Analysis" to help you select an appropriate cut-off time period value.

The lower graph presents the Moving Average Convergence/Divergence (MACD) indicator (using the standard 12,26,9 moving average periods) and applied towards the filtered (Butterworth) price signal data. Finally you can also compare the results obtained on this page with the ones obtained using a Wavelet filter on page “MACD (Wavelets)”.

Filtering & Classical Indicators

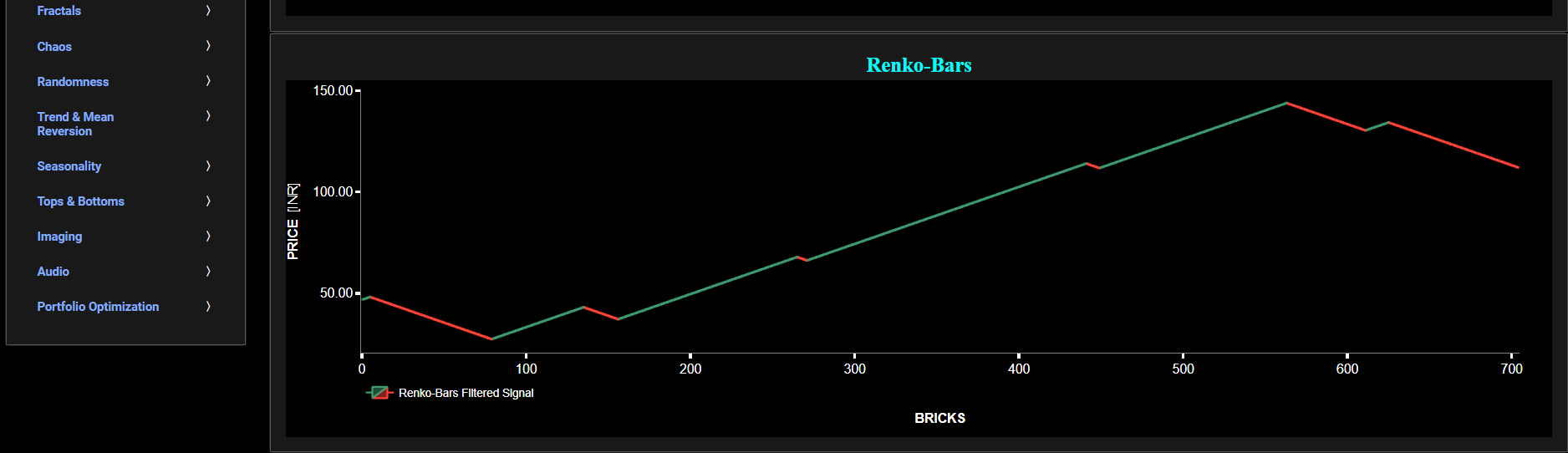

Renko-Bars (Butterworth)

This page provides 2 graphs. The upper graph visualizes historical asset prices using daily close prices. In the menu bar (located just above the graphs), you can also select specific time periods to visualize and also toggle between a linear or logarithmic price scaling on the y-axis. In addition the upper graph allows you to superimpose several Simple Moving Average (SMA) lines. Next this page allows you to add a filtered price signal obtained through the application of a causal low-pass Butterworth digital filter. The filter cut-off time period (Tc), with Tc expressed in Days, can also be selected in the menu bar. The purpose of this filter is to smoothen-out the original price signal, i.e. by filtering out (or removing) any high-frequency content (with frequency > 1/Tc), or equivalently, by removing the low-periodic signal content (with period < Tc). Refer also to the lower graph on page "Frequency Analysis" to help you select an appropriate cut-off time period value.

The lower graph presents the Renko Candle Bars indicator, using a simplified Average True Range (ATR) indicator, and applied towards the filtered (Butterworth) price signal data. Finally you can also compare the results obtained on this page with the ones obtained using a Wavelet filter on page “Renko-Bars (Wavelets)”.

Filtering & Classical Indicators

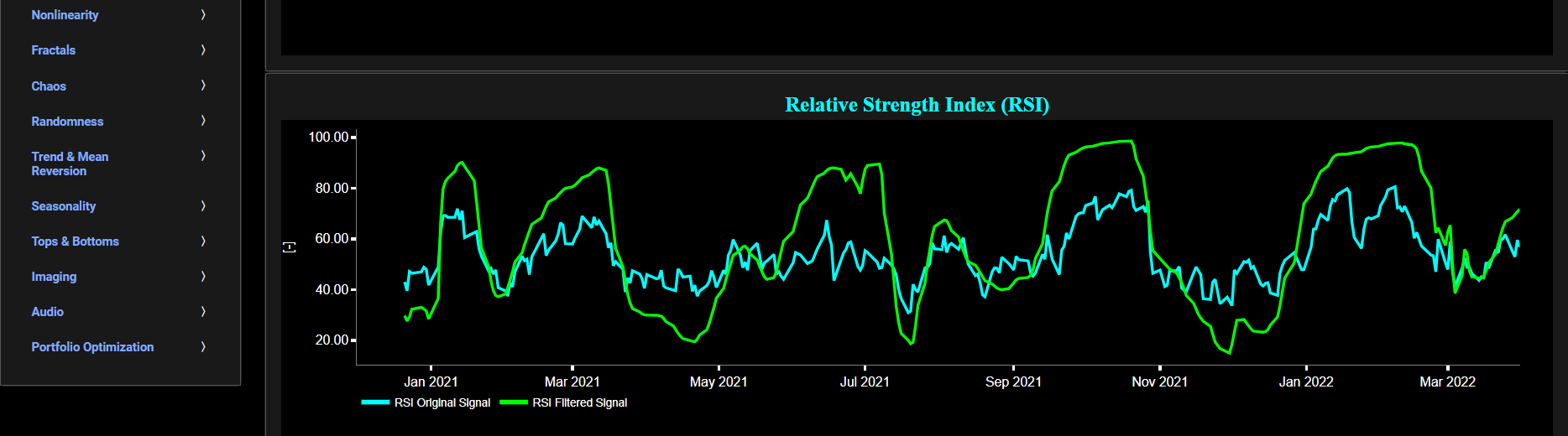

RSI (Wavelets)

This page provides 2 graphs. The upper graph visualizes historical asset prices using daily close prices. In the menu bar (located just above the graphs), you can also select specific time periods to visualize and also toggle between a linear or logarithmic price scaling on the y-axis. In addition the upper graph allows you to superimpose several Simple Moving Average (SMA) lines. Next this page allows you to add a filtered price signal obtained through the application of a Wavelet-based denoising filter. Here we have used the "sym" (symmetric) wavelet family which tends to have good time-frequency localization properties and hence may be better suited for denoising non-stationary financial price data. The so-called Wavelet filter decomposition level can also be selected in the menu bar. The purpose of this filter is to smoothen-out the original price signal, i.e. by filtering out (or removing) any high-frequency content.

The lower graph presents the standard 14-period Relative Strength Index (RSI) indicator applied to the original (unfiltered) price signal data and subsequently applied to the filtered (Wavelets) price signal data. Finally you can also compare the results obtained on this page with the ones obtained using a Butterworth filter on page “RSI (Butterworth)”.

Filtering & Classical Indicators

Bollinger Bands (Wavelets)

This page provides 2 graphs. The upper graph visualizes historical asset prices using daily close prices. In the menu bar (located just above the graphs), you can also select specific time periods to visualize and also toggle between a linear or logarithmic price scaling on the y-axis. In addition the upper graph allows you to superimpose several Simple Moving Average (SMA) lines. Next this page allows you to add a filtered price signal obtained through the application of a Wavelet-based denoising filter. Here we have used the "sym" (symmetric) wavelet family which tends to have good time-frequency localization properties and hence may be better suited for denoising non-stationary financial price data. The so-called Wavelet filter decomposition level can also be selected in the menu bar. The purpose of this filter is to smoothen-out the original price signal, i.e. by filtering out (or removing) any high-frequency content.

The lower graph presents the Bollinger Bands indicator (computed on the basis of a standard 20-period moving average) and applied towards the filtered (Wavelets) price signal data. The lower graph also shows, in cyan color, the original (unfiltered) price signal data. Finally you can also compare the results obtained on this page with the ones obtained using a Butterworth filter on page “Bollinger Bands (Butterworth)”.

Filtering & Classical Indicators

MACD (Wavelets)

This page provides 2 graphs. The upper graph visualizes historical asset prices using daily close prices. In the menu bar (located just above the graphs), you can also select specific time periods to visualize and also toggle between a linear or logarithmic price scaling on the y-axis. In addition the upper graph allows you to superimpose several Simple Moving Average (SMA) lines. Next this page allows you to add a filtered price signal obtained through the application of a Wavelet-based denoising filter. Here we have used the "sym" (symmetric) wavelet family which tends to have good time-frequency localization properties and hence may be better suited for denoising non-stationary financial price data. The so-called Wavelet filter decomposition level can also be selected in the menu bar. The purpose of this filter is to smoothen-out the original price signal, i.e. by filtering out (or removing) any high-frequency content.

The lower graph presents the Moving Average Convergence/Divergence (MACD) indicator (using the standard 12,26,9 moving average periods) and applied towards the filtered (Wavelets) price signal data. Finally you can also compare the results obtained on this page with the ones obtained using a Butterworth filter on page “MACD (Butterworth)”.

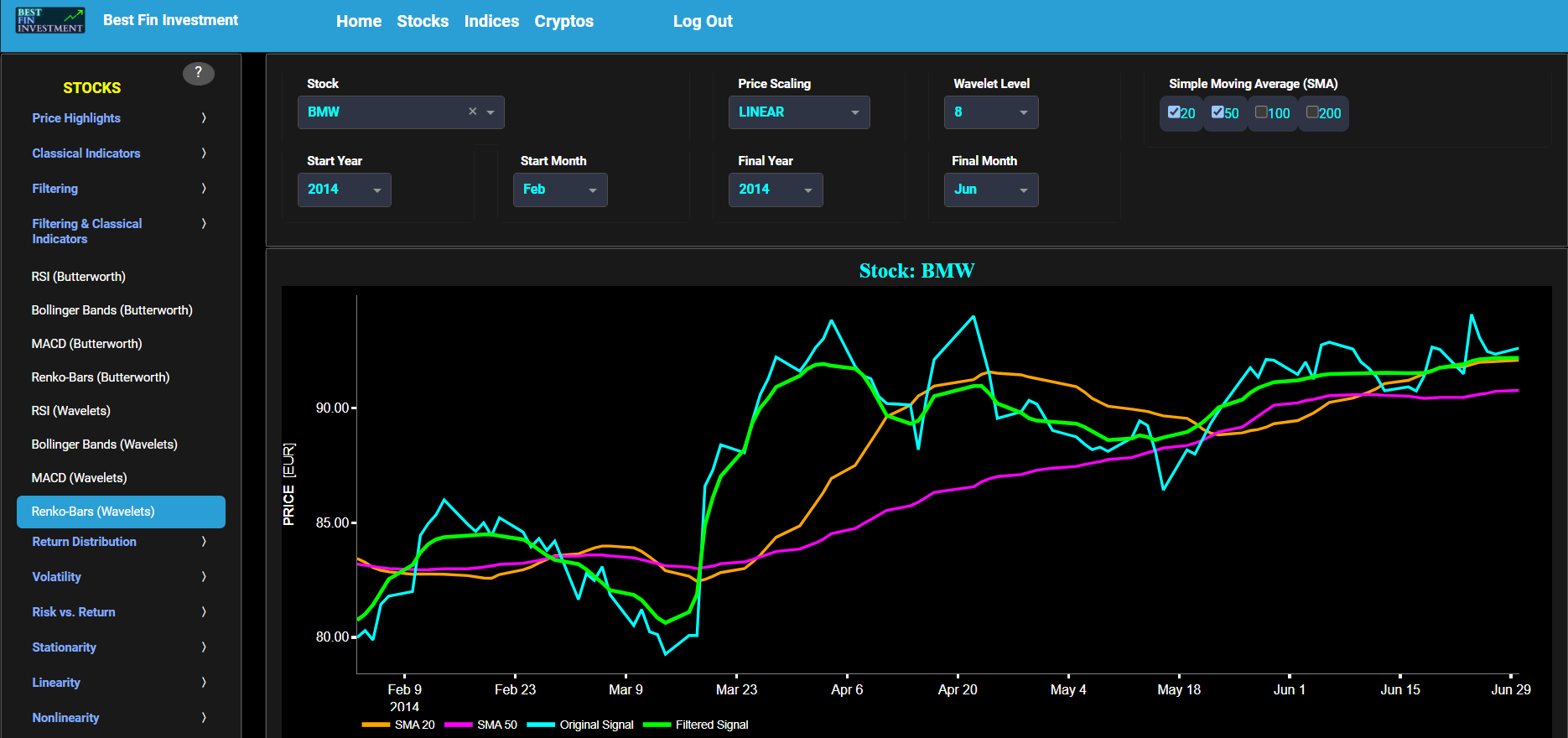

Filtering & Classical Indicators

Renko-Bars (Wavelets)

This page provides 2 graphs. The upper graph visualizes historical asset prices using daily close prices. In the menu bar (located just above the graphs), you can also select specific time periods to visualize and also toggle between a linear or logarithmic price scaling on the y-axis. In addition the upper graph allows you to superimpose several Simple Moving Average (SMA) lines. Next this page allows you to add a filtered price signal obtained through the application of a Wavelet-based denoising filter. Here we have used the "sym" (symmetric) wavelet family which tends to have good time-frequency localization properties and hence may be better suited for denoising non-stationary financial price data. The so-called Wavelet filter decomposition level can also be selected in the menu bar. The purpose of this filter is to smoothen-out the original price signal, i.e. by filtering out (or removing) any high-frequency content.

The lower graph presents the Renko Candle Bars indicator, using a simplified Average True Range (ATR) indicator, and applied towards the filtered (Wavelets) price signal data. Finally you can also compare the results obtained on this page with the ones obtained using a Butterworth filter on page “Renko-Bars (Butterworth)”.

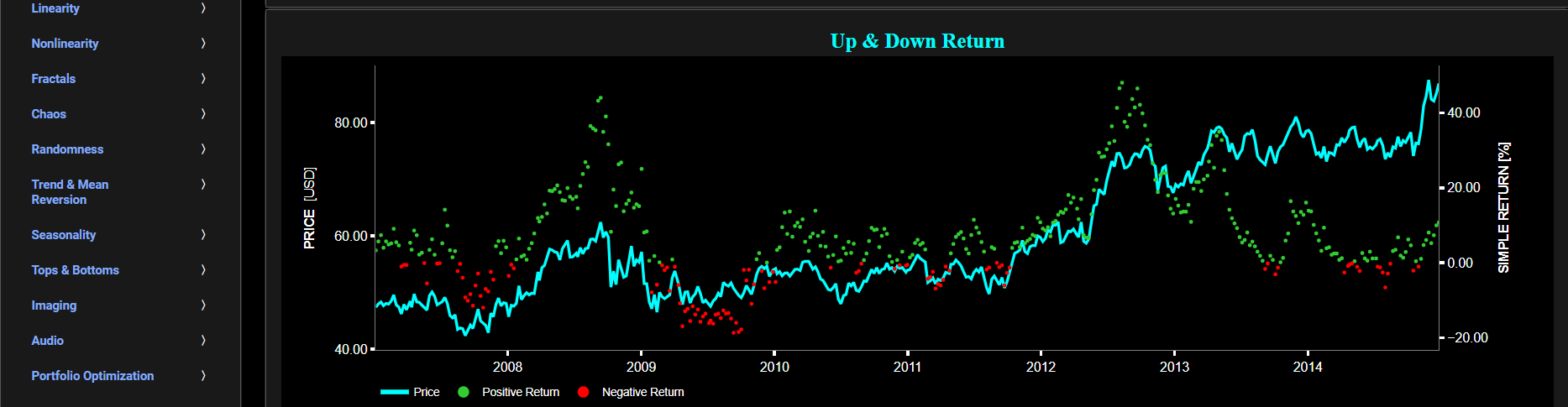

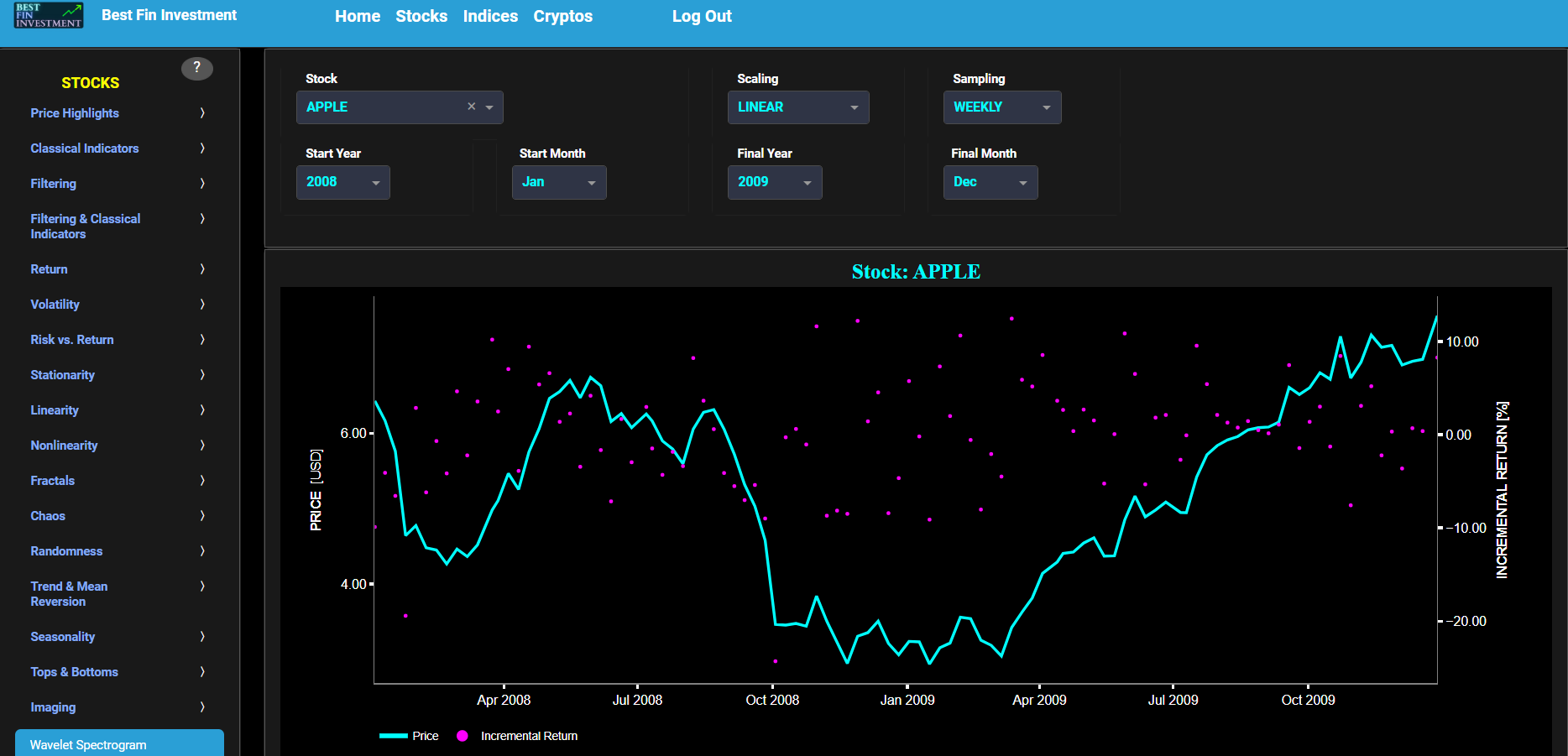

Return

Simple Return (Historical Observations)

This page provides 2 graphs. The upper graph visualizes historical asset prices in cyan color using either daily, weekly, or monthly close prices. In the menu bar (located just above the graphs), you can also select specific time periods to visualize and also toggle between a linear or logarithmic price scaling on the left y-axis. Next to the cyan line, the upper graph also visualizes the historical asset return. Here, return is defined as the simple asset return which is quantified as the percentage change in the asset's price between consecutive time periods. This return is shown in magenta and expressed on the right y-axis. The return is computed using a lookback time period that is selected in the menu bar (located just above the graphs). The lower graph is somewhat similar to the upper graph except that it shows the positive price returns in green and negative price returns in red.

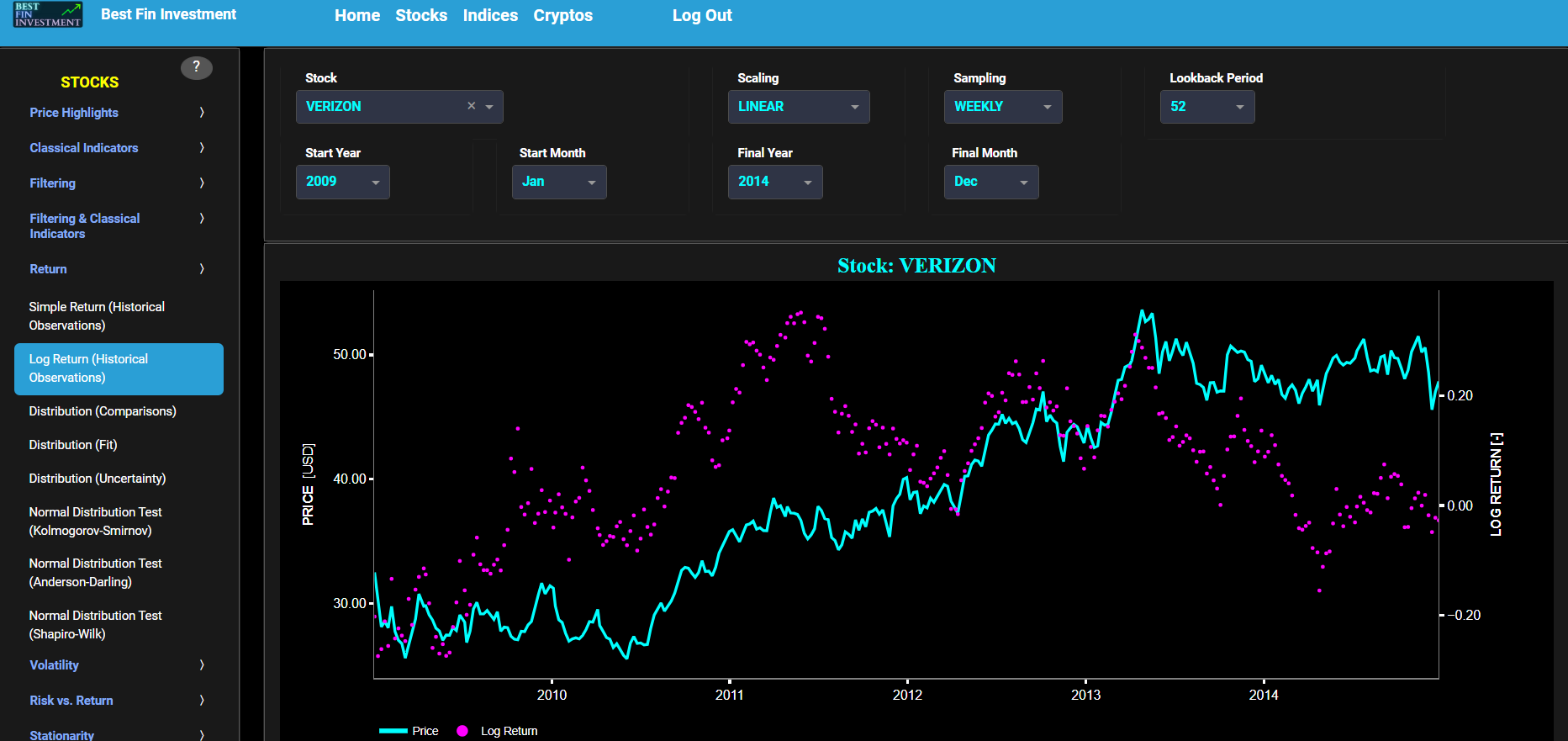

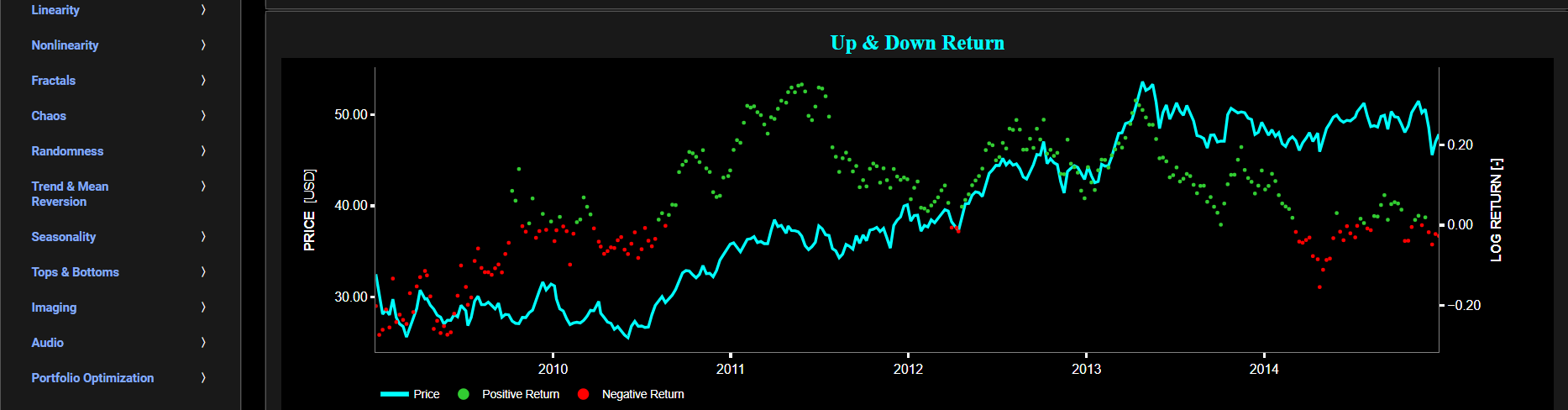

Return

Log Return (Historical Observations)

This page provides 2 graphs. The upper graph visualizes historical asset prices in cyan color using either daily, weekly, or monthly close prices. In the menu bar (located just above the graphs), you can also select specific time periods to visualize and also toggle between a linear or logarithmic price scaling on the left y-axis. Next to the cyan line, the upper graph also visualizes the historical asset log return. Here, log return is defined as the natural logarithm of the asset price percentage change between consecutive time periods. This return is shown in magenta and expressed on the right y-axis. The return is computed using a lookback time period that is selected in the menu bar (located just above the graphs). The lower graph is somewhat similar to the upper graph except that it shows the positive price returns in green and negative price returns in red.

Return

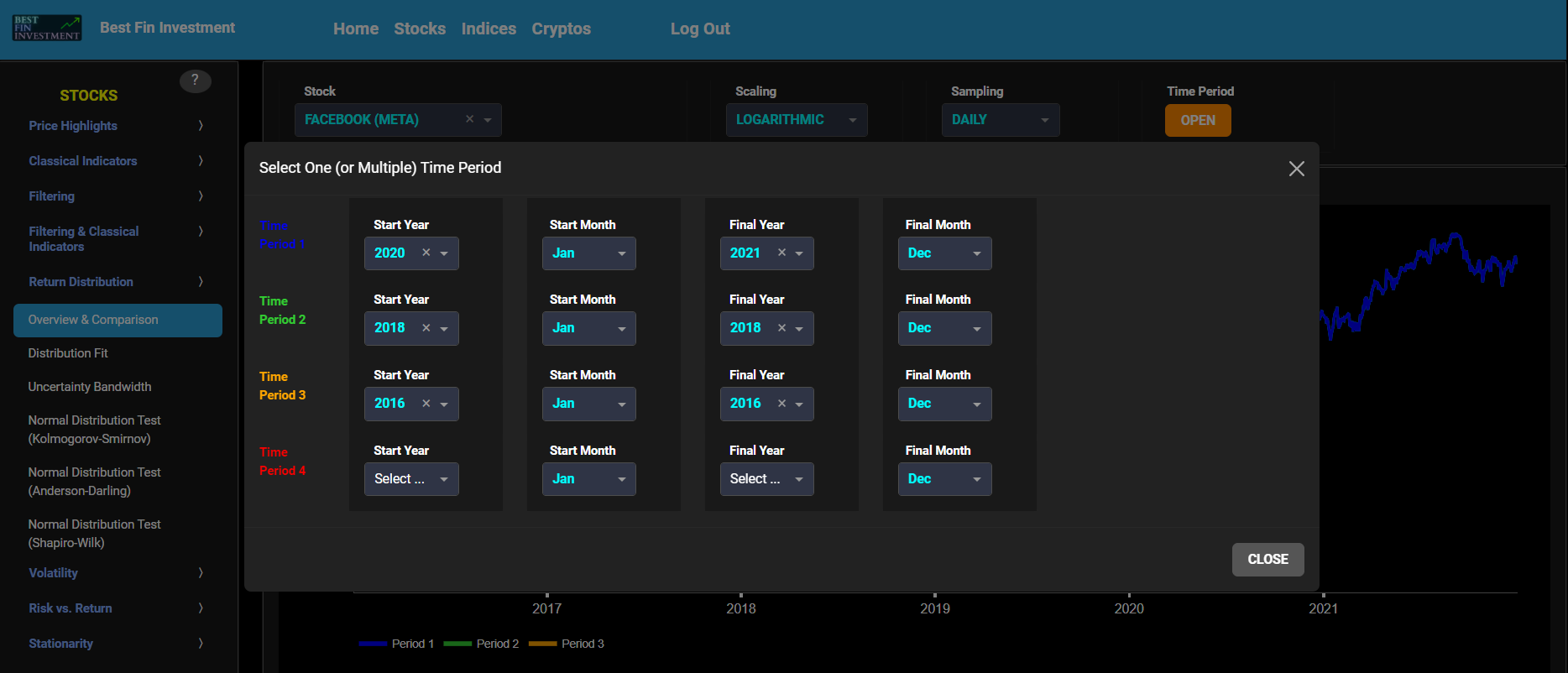

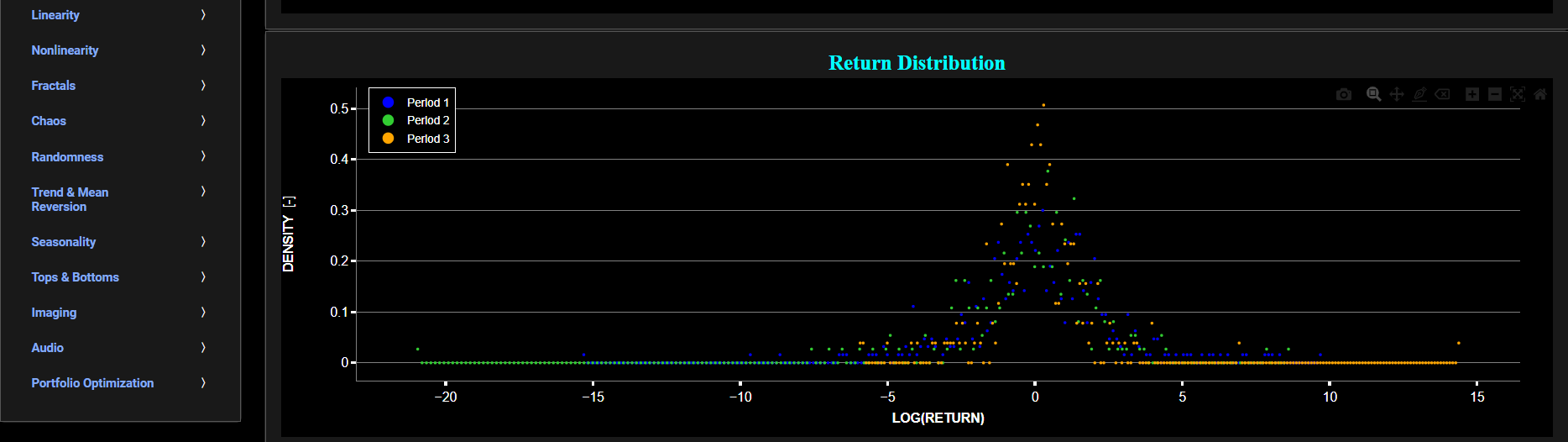

Distribution (Comparisons)

This page provides 2 graphs. The upper graph visualizes historical asset prices using either daily, weekly, or monthly close prices. In the menu bar (located just above the graphs), you can also toggle between a linear or logarithmic price scaling on the y-axis. Further you can select up to 4 specific time periods for comparisons of return distributions. Here price “return” is defined as the % change between 2 consecutive close prices. The lower graph visualizes the corresponding return distributions for the selected asset price data. Note that if logarithmic scaling is selected, the lower graph will then provide the distribution of the logarithm of price returns.

Return

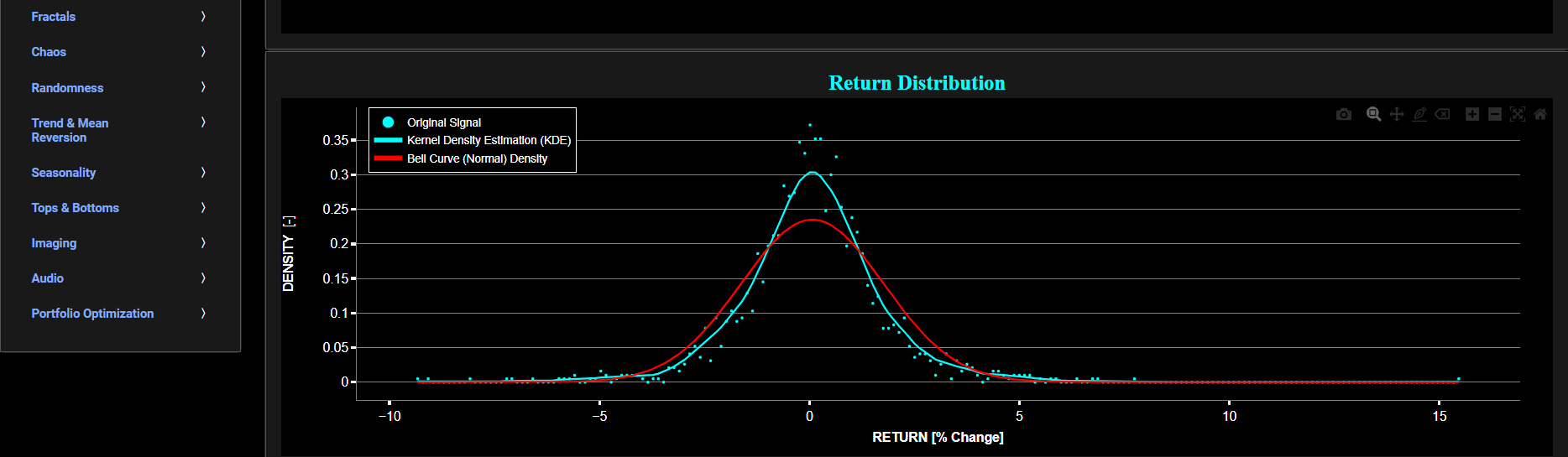

Distribution (Fit)

This page provides 2 graphs. The upper graph visualizes historical asset prices using either daily, weekly, or monthly close prices. In the menu bar (located just above the graphs), you can also select specific time periods to visualize and also toggle between a linear or logarithmic price scaling on the y-axis. The lower graph visualizes the corresponding return distributions for the selected asset price data. Note that if logarithmic scaling is selected, the lower graph will then provide the distribution of the logarithm of price returns. Further, the lower graph also presents 2 additional lines. The line shown in red represents a normal density fit of the price return data, whereas the second line shown in cyan represents a fit from a Kernel Density Estimator (KDE) using “exponential” type kernels. In the menu bar (located just above the graphs), you can also select the number of bandwidth samples that will be used during the KDE identification process. The higher the number of bandwidth samples, the better the density fit albeit at the expense of increased computational cost. Once the number of bandwidth samples is selected the algorithm will then search for the optimal KDE bandwidth using a so-called leave-one-out cross-validation procedure.

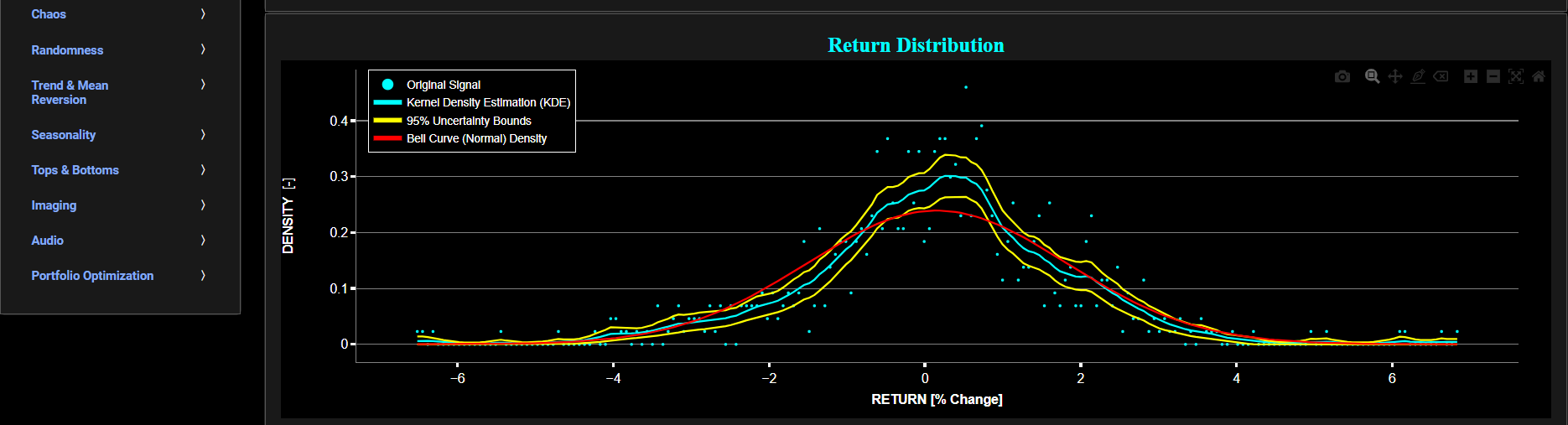

Return

Distribution (Uncertainty)

This page provides 2 graphs. The upper graph visualizes historical asset prices using either daily, weekly, or monthly close prices. In the menu bar (located just above the graphs), you can also select specific time periods to visualize and also toggle between a linear or logarithmic price scaling on the y-axis. The lower graph visualizes the corresponding return distributions for the selected asset price data. Note that if logarithmic scaling is selected, the lower graph will then provide the distribution of the logarithm of price returns. Further, the lower graph also presents 4 additional lines. The line shown in red represents a normal density fit of the price return data, whereas the line shown in cyan represents a fit from a Kernel Density Estimator (KDE) using “exponential” type kernels. The 2 yellow lines represent the 95% confidence interval (or uncertainty bounds) around the cyan line, obtained through a bootstrapping procedure. In the menu bar (located just above the graphs), you can also select the number of bootstrap samples that will be used during the confidence interval estimation. This number is also used during the KDE identification process. Note that the higher the number of bootstrap samples, the better the confidence interval estimation albeit at the expense of increased computational cost.

Return

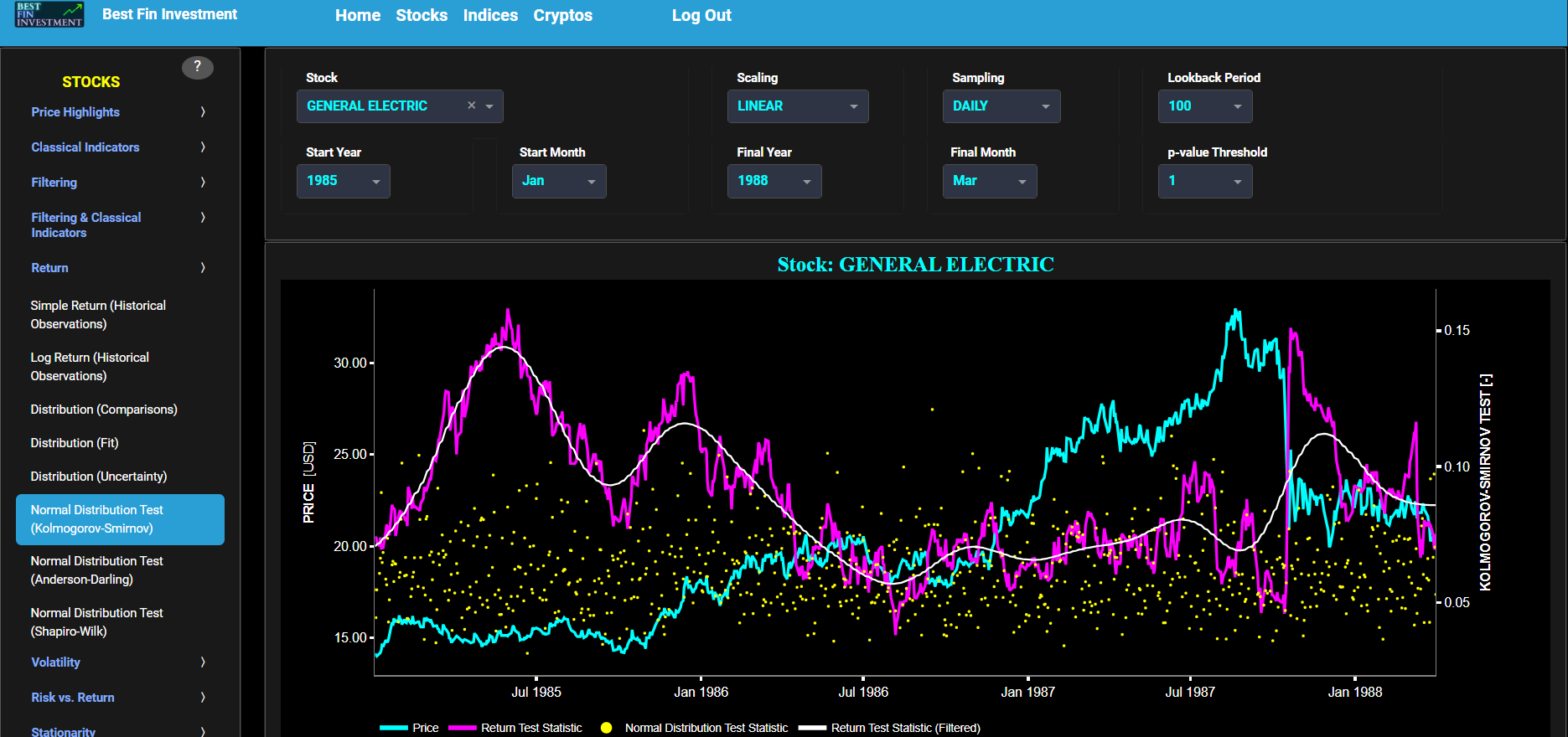

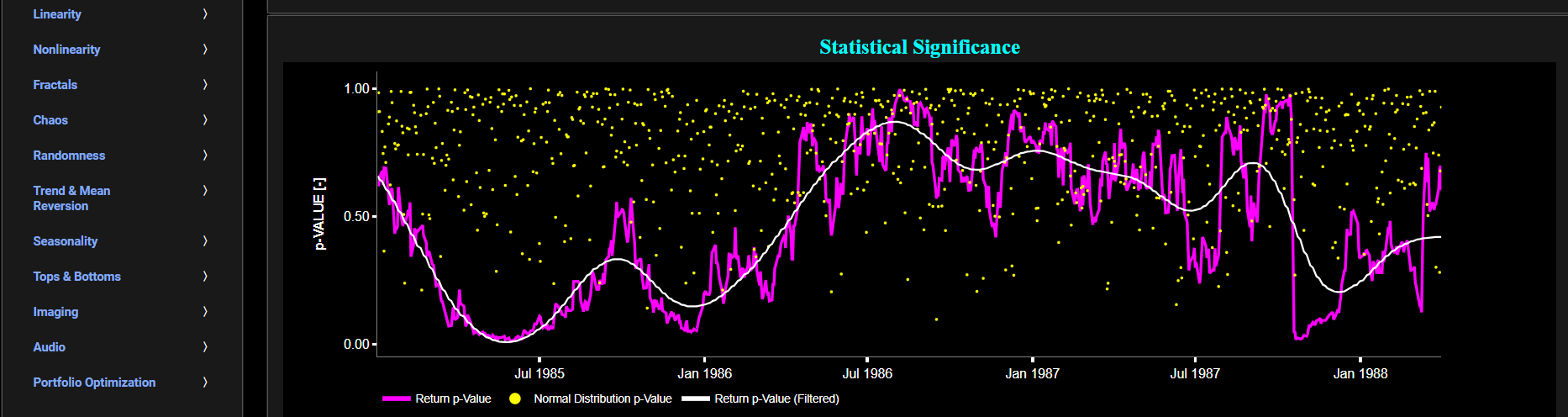

Normal Distribution Test (Kolmogorov-Smirnov)

This page provides 2 graphs. The upper graph visualizes historical asset prices in cyan color using either daily, weekly, or monthly close prices. In the menu bar (located just above the graphs), you can also select specific time periods to visualize and also toggle between a linear or logarithmic price scaling on the y-axis. Next to the cyan line, the upper graph also visualizes the test statistic for the one-sample Kolmogorov-Smirnov (KS) normal distribution test for the asset incremental returns (i.e. % change) in magenta, and for synthetically generated normally distributed independent and identically distributed (i.i.d.) returns in yellow. The yellow data points are given here for reference and comparison purposes (i.e. benchmarking). Next the white line represents a filtered version of the magenta line through the application of a low-pass Butterworth digital filter. The one-sample KS test compares the underlying distribution (the sample returns of the selected asset) against a given normal (i.e. Gaussian) distribution. The null hypothesis consists in having the sample returns distributed according to the standard normal distribution. The KS test statistic represents a measure of how well the sample data matches the theoretical normal distribution. A larger KS statistic indicates a greater discrepancy from normal distribution.

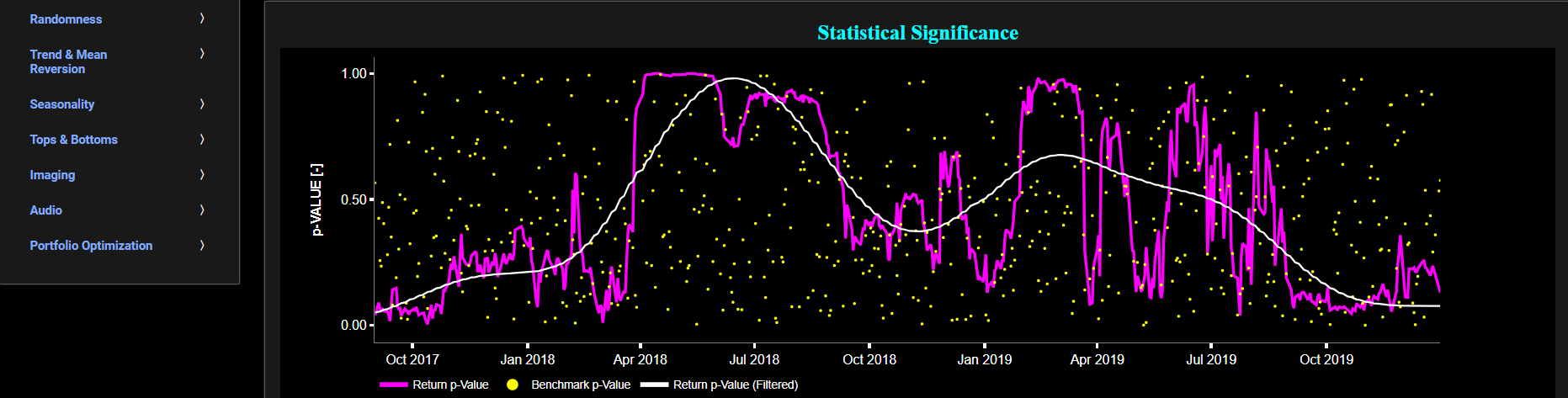

Next the lower graph visualizes the statistical significance (or so called p-value) for the KS test and for the benchmark signal. It is often typical to choose a confidence level of 95%; meaning that we will reject the null hypothesis in favor of the alternative (i.e. the data does not follow a standard normal distribution) if the test statistic p-value is less than 0.05. Finally in the menu bar (located just above the graphs), you can also select specific values for the lookback time period on which the KS test will be computed as well as set a maximum p-value visualization threshold pM, i.e. by only showing the data points for which we have p-value < pM.

Return

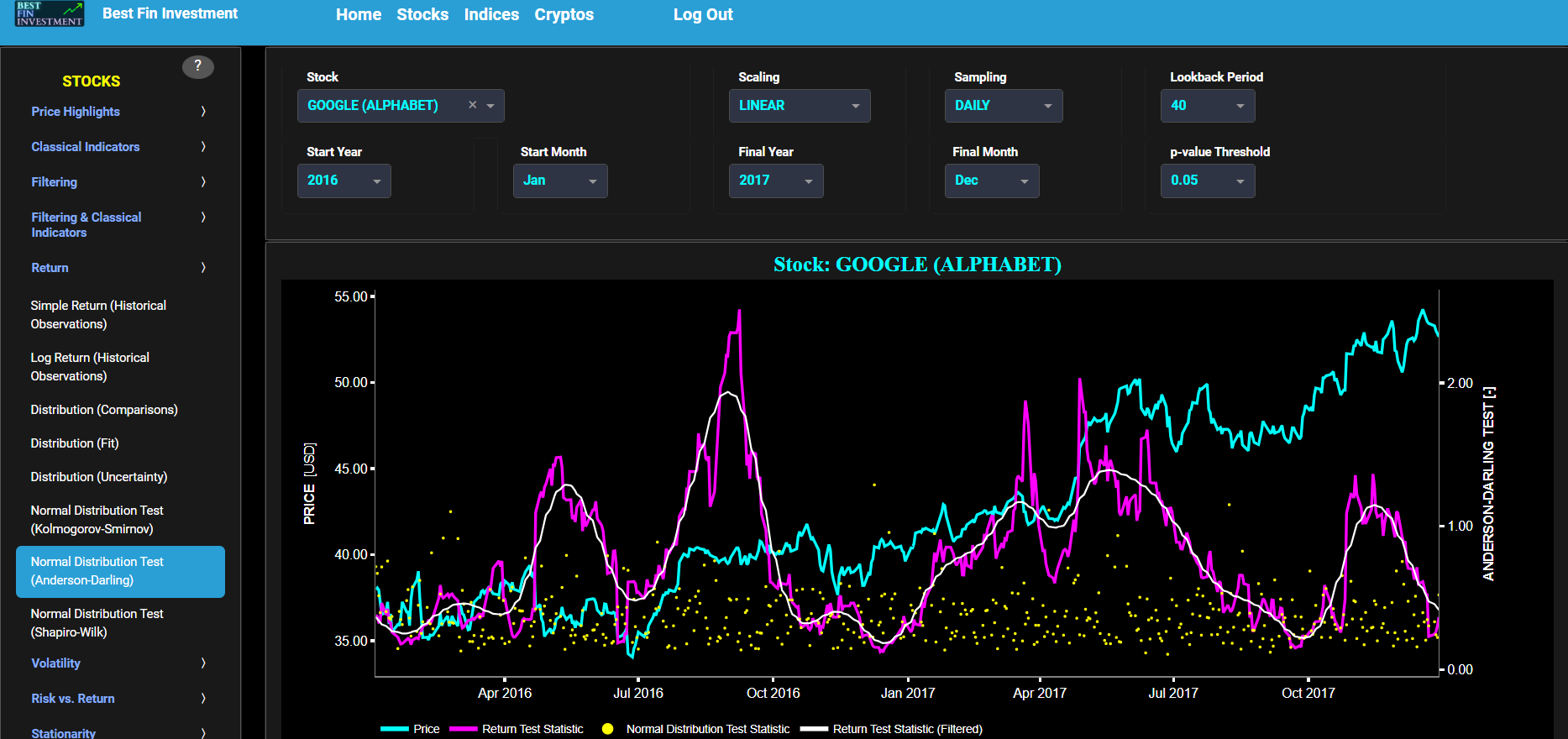

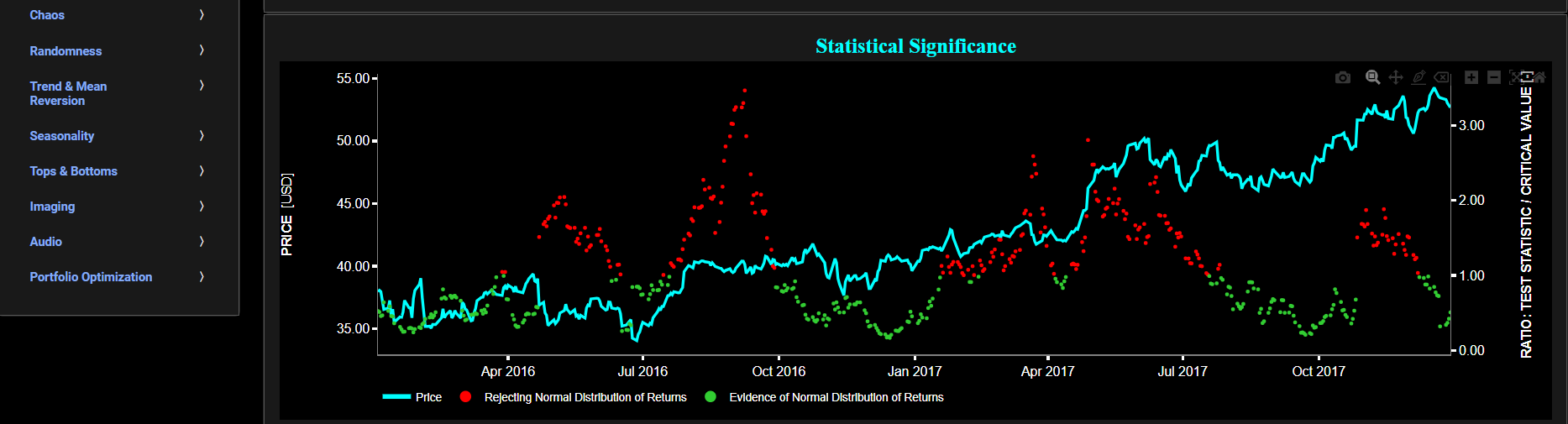

Normal Distribution Test (Anderson-Darling)

This page provides 2 graphs. The upper graph visualizes historical asset prices in cyan color using either daily, weekly, or monthly close prices. In the menu bar (located just above the graphs), you can also select specific time periods to visualize and also toggle between a linear or logarithmic price scaling on the y-axis. Next to the cyan line, the upper graph also visualizes the test statistic for the Anderson-Darling (AD) normal distribution test for the asset incremental returns (i.e. % change) in magenta, and for synthetically generated normally distributed independent and identically distributed (i.i.d.) returns in yellow. The yellow data points are given here for reference and comparison purposes (i.e. benchmarking). Next the white line represents a filtered version of the magenta line through the application of a low-pass Butterworth digital filter. The AD test compares the underlying distribution (the sample returns of the selected asset) against a given normal (i.e. Gaussian) distribution. The null hypothesis consists in having the sample returns distributed according to the standard normal distribution. The AD test statistic represents a measure of how well the sample data matches the theoretical normal distribution. A larger AD statistic indicates a greater discrepancy from normal distribution.

Next the lower graph visualizes the ratio of test statistic divided by test critical value at a selected p-value threshold. The p-value corresponds to the statistical significance of the AD test. In other words, if the returned statistic is larger than the critical value (i.e. ratio > 1) then for the corresponding chosen p-value significance level, the null hypothesis that the data comes from a normal distribution can be rejected. Note that it is often typical to choose a confidence level of 95% (i.e. p-value less than 0.05). Finally in the menu bar (located just above the graphs), you can also select specific values for the lookback time period on which the AD test will be computed as well as set a maximum p-value visualization threshold pM, i.e. by only showing the data points for which we have p-value < pM.

Return

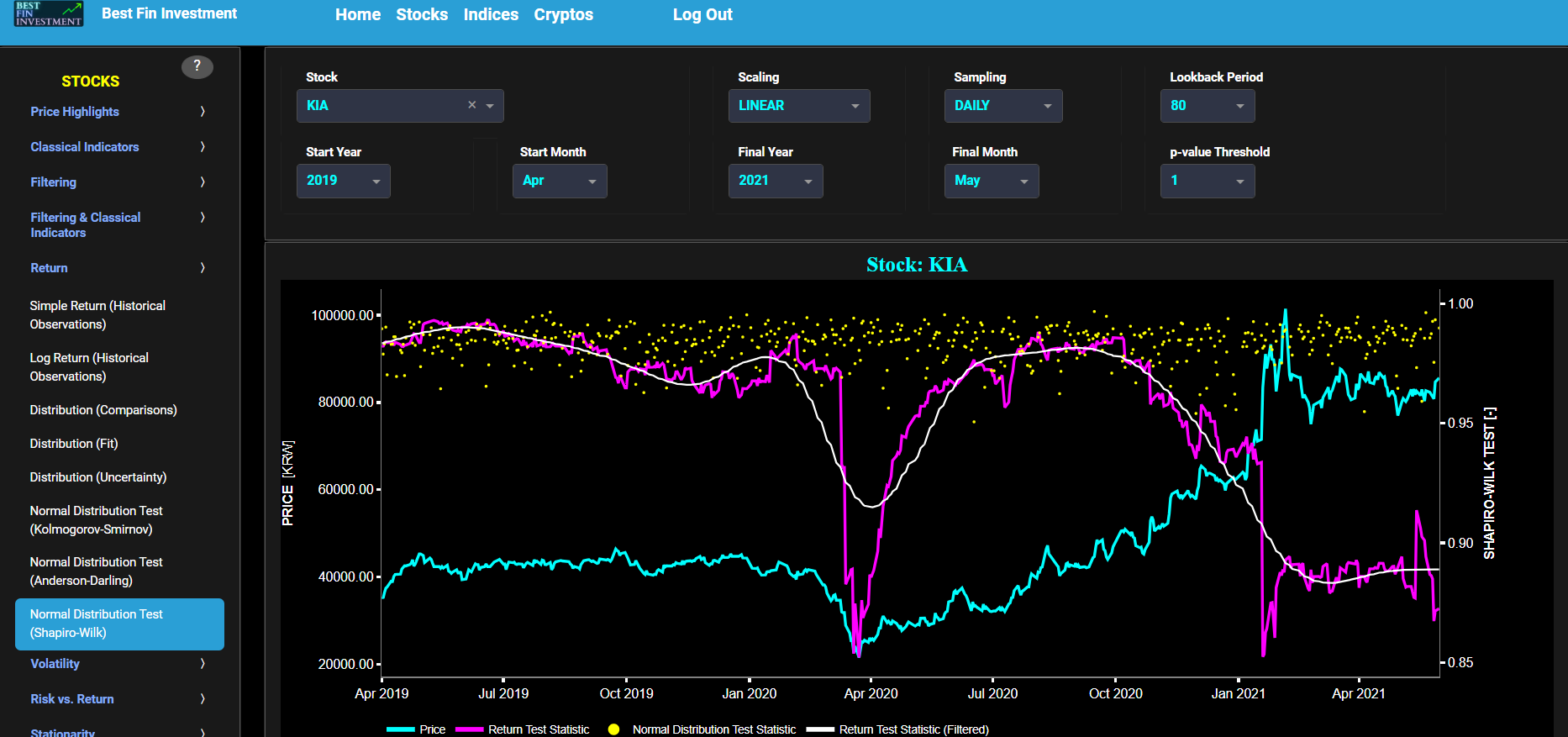

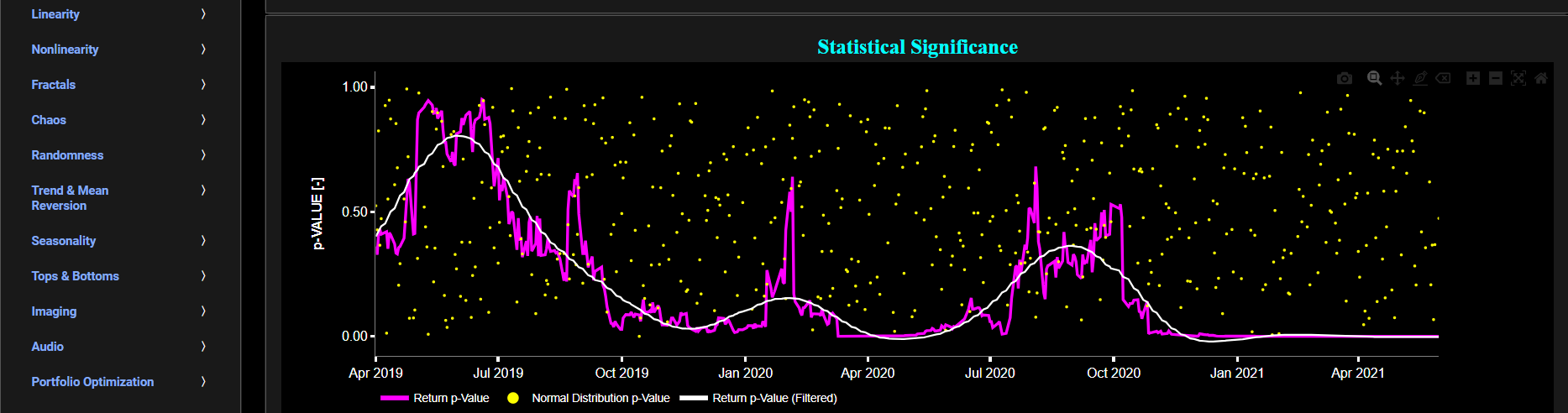

Normal Distribution Test (Shapiro-Wilk)

This page provides 2 graphs. The upper graph visualizes historical asset prices in cyan color using either daily, weekly, or monthly close prices. In the menu bar (located just above the graphs), you can also select specific time periods to visualize and also toggle between a linear or logarithmic price scaling on the y-axis. Next to the cyan line, the upper graph also visualizes the test statistic for the Shapiro-Wilk (SW) normal distribution test for the asset incremental returns (i.e. % change) in magenta, and for synthetically generated normally distributed independent and identically distributed (i.i.d.) returns in yellow. The yellow data points are given here for reference and comparison purposes (i.e. benchmarking). Next the white line represents a filtered version of the magenta line through the application of a low-pass Butterworth digital filter. The SW test compares the underlying distribution (the sample returns of the selected asset) against a given normal (i.e. Gaussian) distribution. The null hypothesis consists in having the sample returns distributed according to the standard normal distribution. The SW test statistic measures the degree of departure from a normal distribution. It is based on the covariances between the ordered data values and the expected values under the assumption of normality. The test statistic tends to be close to 1 for normally distributed data and smaller for data that deviate from normality.

Next the lower graph visualizes the statistical significance (or so called p-value) for the SW test and for the benchmark signal. It is often typical to choose a confidence level of 95%; meaning that we will reject the null hypothesis in favor of the alternative (i.e. the data does not follow a standard normal distribution) if the test statistic p-value is less than 0.05. Finally in the menu bar (located just above the graphs), you can also select specific values for the lookback time period on which the SW test will be computed as well as set a maximum p-value visualization threshold pM, i.e. by only showing the data points for which we have p-value < pM.

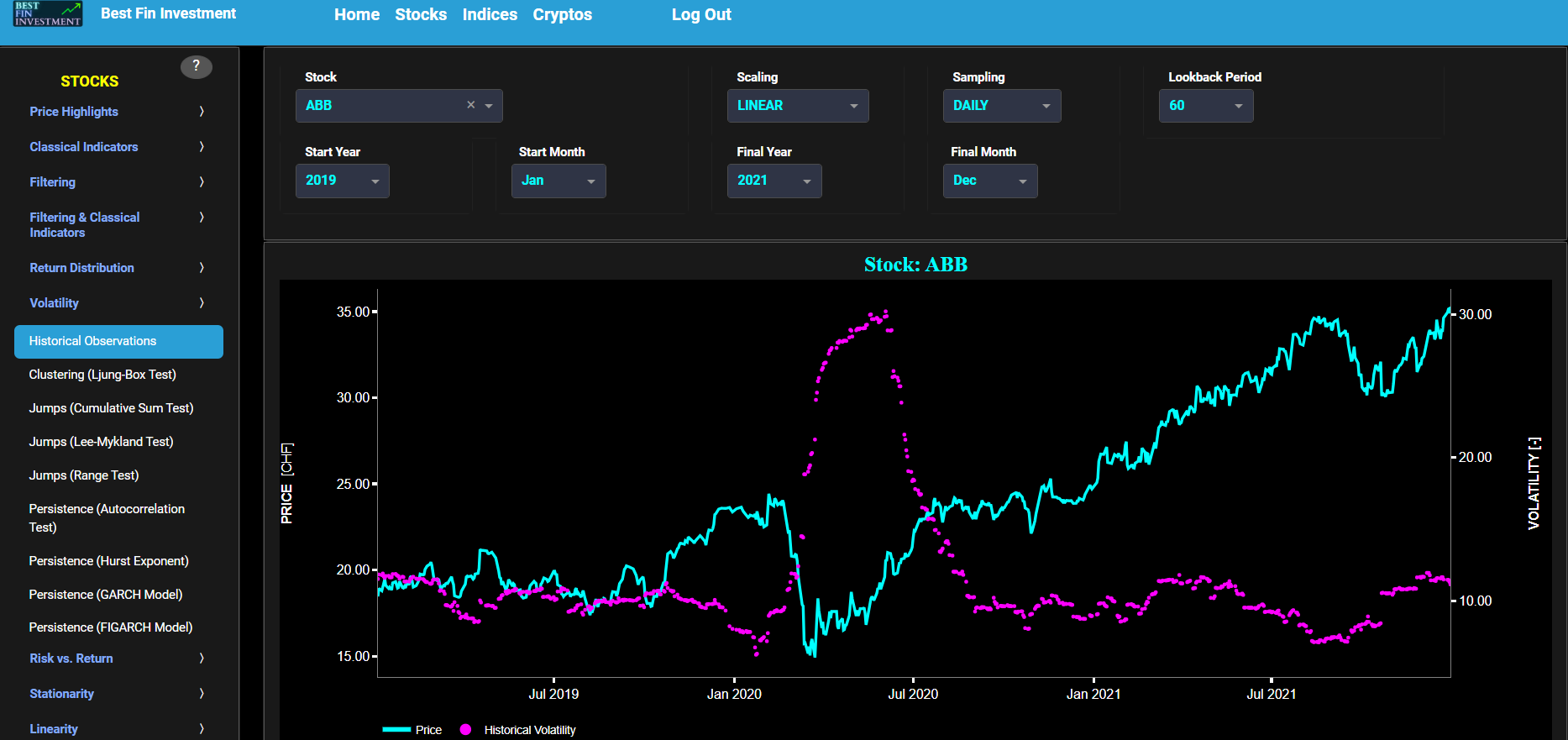

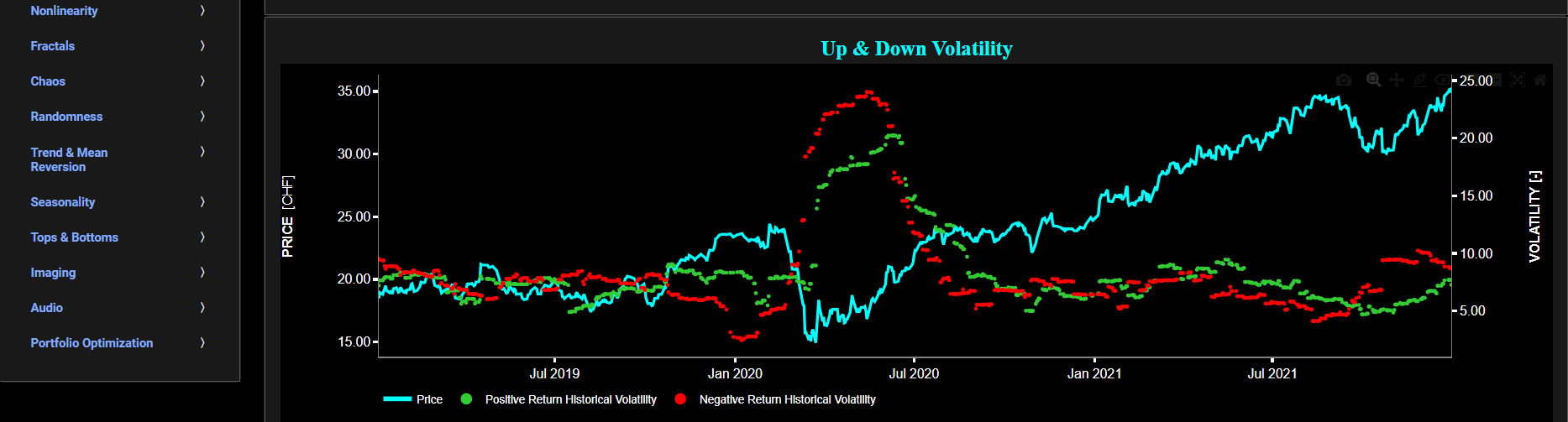

Volatility

Historical Observations

This page provides 2 graphs. The upper graph visualizes historical asset prices in cyan color using either daily, weekly, or monthly close prices. In the menu bar (located just above the graphs), you can also select specific time periods to visualize and also toggle between a linear or logarithmic price scaling on the left y-axis. Next to the cyan line, the upper graph also visualizes the historical asset volatility. This volatility is shown in magenta and expressed on the right y-axis. The volatility is computed using a lookback time period that is selected in the menu bar (located just above the graphs). The lower graph is somewhat similar to the upper graph except that it shows the historical volatility of positive price returns in green and historical volatility of negative price returns in red.

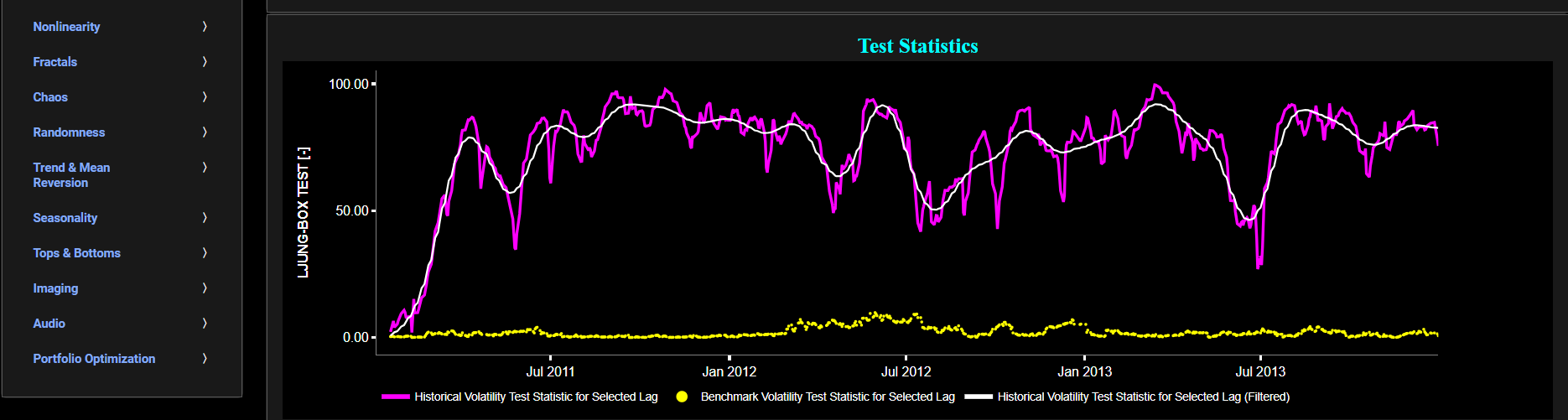

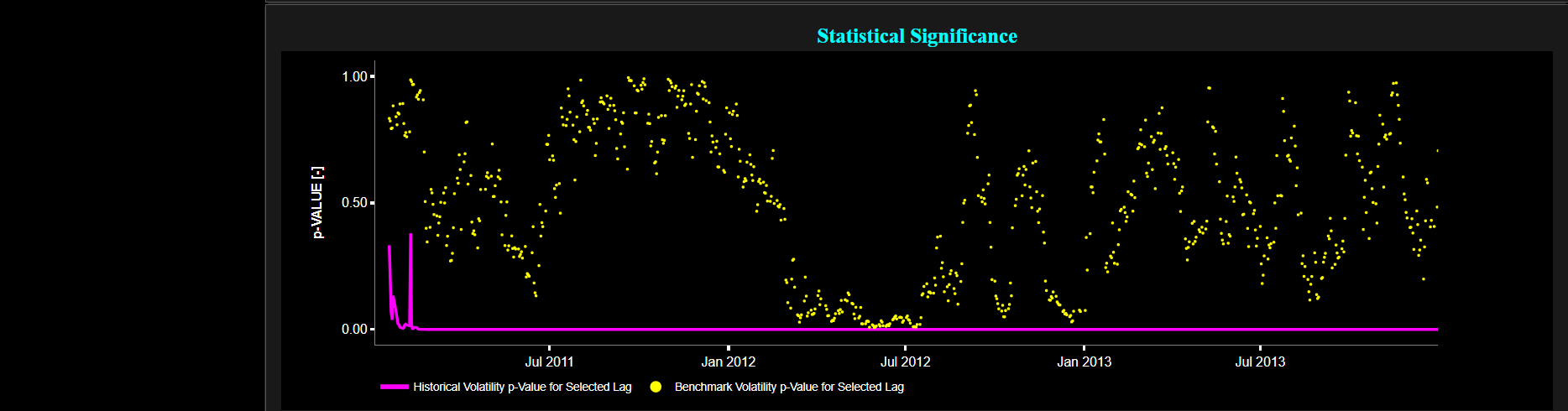

Volatility

Clustering (Ljung-Box Test)

This page provides 3 graphs. The upper graph visualizes historical asset prices in cyan color using either daily, weekly, or monthly close prices. In the menu bar (located just above the graphs), you can also select specific time periods to visualize and also toggle between a linear or logarithmic price scaling on the left y-axis. Next to the cyan line, the upper graph also visualizes the historical asset volatility. This volatility is shown in magenta and expressed on the right y-axis. The volatility is computed using a lookback time period that is selected in the menu bar (located just above the graphs).

The middle graph visualizes the statistic for the Ljung-Box autocorrelation (LB) test for the asset volatility in magenta, and for a benchmark time series in yellow. This benchmark signal is based upon synthetically generated, normally distributed, independent and identically distributed (i.i.d.) returns. For this benchmark signal, each step is independent of the previous steps, and there is no systematic trend or pattern in the data. The yellow data points are given here for reference and comparison purposes (hence benchmarking). Next the white line represents a filtered version of the magenta line through the application of a low-pass Butterworth digital filter. Now the LB test is a statistical test used to check for the presence of autocorrelation in a time series. In the context of financial time series, autocorrelation can be indicative of volatility clustering. The null hypothesis of the LB test assumes that there is no autocorrelation in the time series data. In other words, it assumes that the data points are independently and identically distributed (i.i.d.), which would imply no clustering of volatility. The alternative hypothesis suggests the presence of autocorrelation in the time series data, indicating volatility clustering. Now a high LB test value would suggest that there is autocorrelation in the time series data. This implies that there are periods of high volatility followed by periods of low volatility, indicating volatility clustering. Further, you can test this autocorrelation for specific time series lags, with the latter being selected in the menu bar (located just above the graphs).

Finally the lower graph visualizes the statistical significance of the LB autocorrelation test for the asset volatility in magenta, and for the benchmark signal in yellow. It is often typical to choose a confidence level of 95%; meaning that we will reject the null hypothesis in favor of the alternative (i.e. meaning that there is significant autocorrelation in the data, which implies the presence of volatility clustering) if the test statistic p-value is less than 0.05.

Volatility

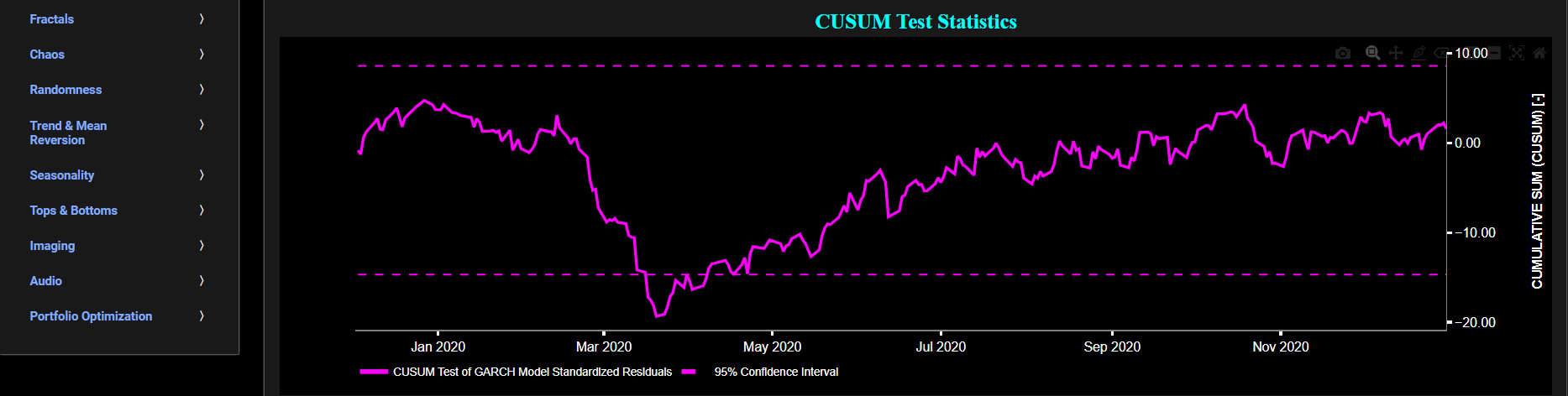

Jumps (Cumulative Sum Test)

This page provides 2 graphs. The upper graph visualizes historical asset prices in cyan color using either daily, weekly, or monthly close prices. In the menu bar (located just above the graphs), you can also select specific time periods to visualize and also toggle between a linear or logarithmic price scaling on the left y-axis. Next to the cyan line, the upper graph also visualizes the historical asset volatility. This volatility is shown in magenta and expressed on the right y-axis. The volatility is computed using a lookback time period that is selected in the menu bar (located just above the graphs). The lower graph visualizes the cumulative sum (CUSUM) test statistic which is designed to detect structural changes or shifts in a time series data. This test can help identify periods when the volatility of asset returns significantly deviates from their historical norm. Here we first fit a Generalized Autoregressive Conditional Heteroskedasticity (GARCH) model with (1,1,1) coefficients to the asset Log returns data and then apply the CUSUM test on the model’s standardized residuals. By doing so we are essentially looking for changes in the asset return characteristics which could be driven by changes in the asset volatility. Finally the dashed lines visualize the 95% confidence interval.

Volatility

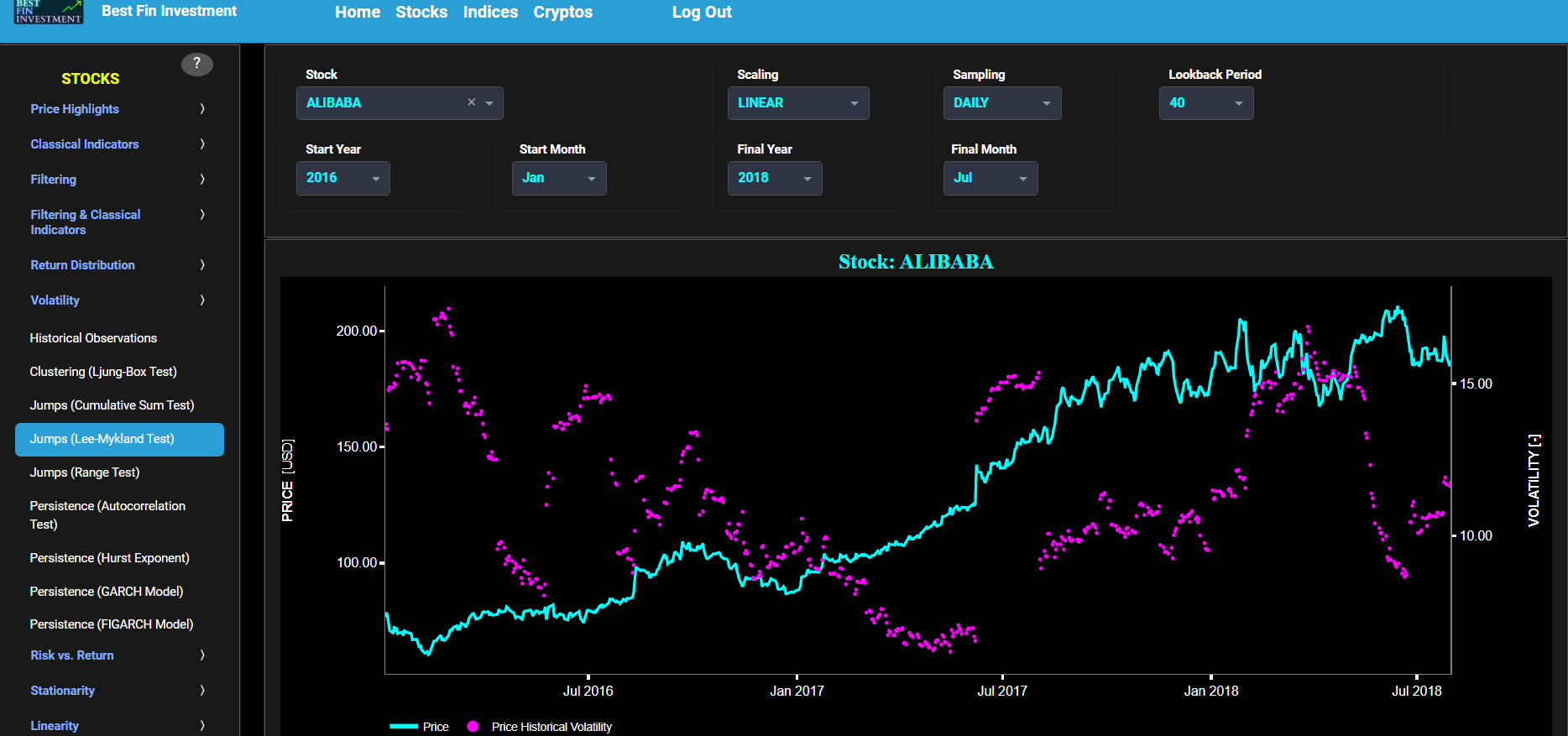

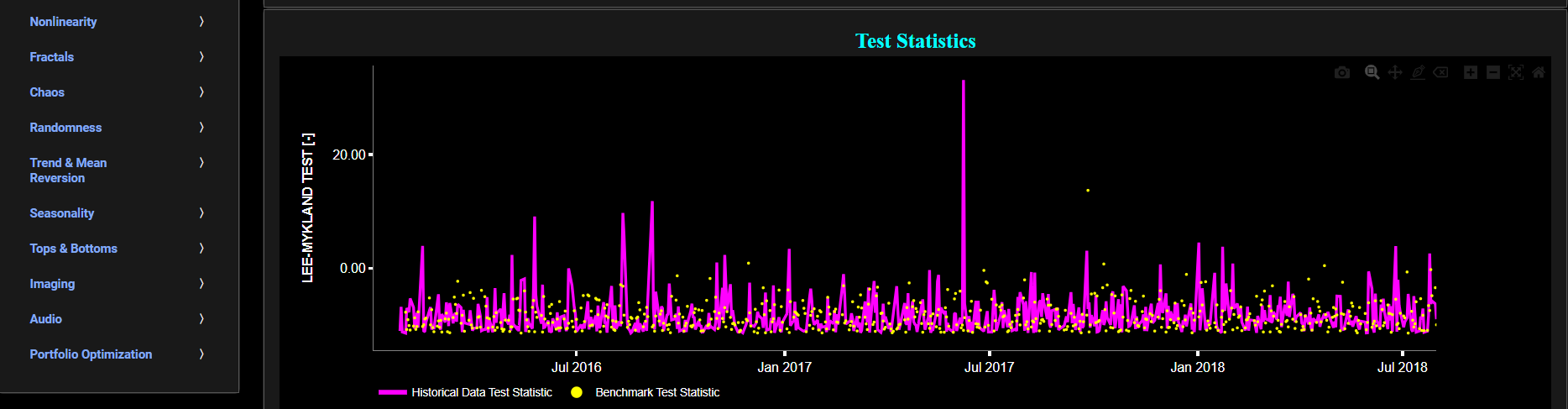

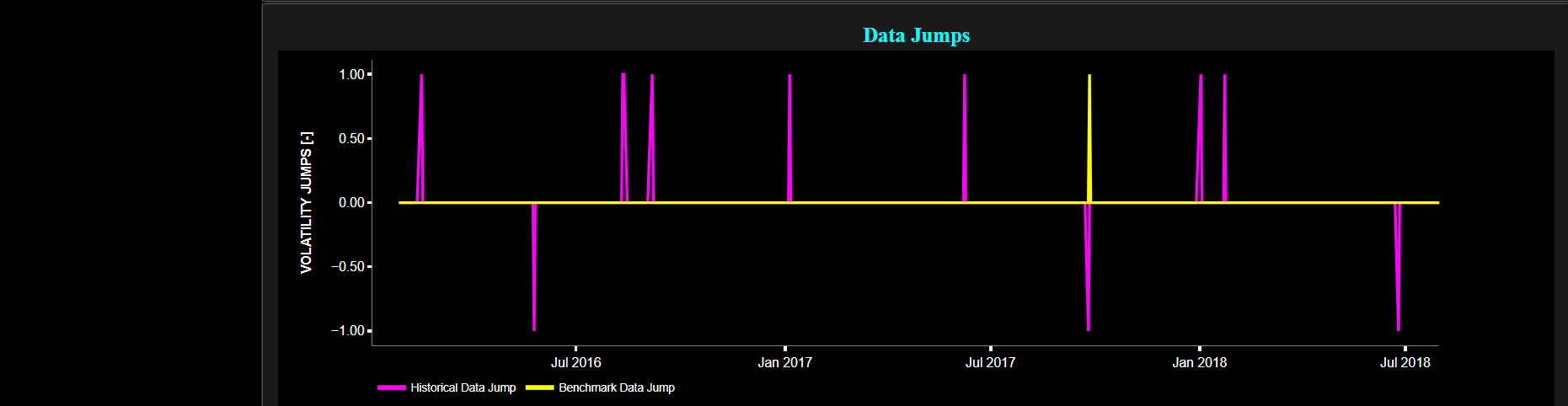

Jumps (Lee-Mykland Test)

This page provides 3 graphs. The upper graph visualizes historical asset prices in cyan color using either daily, weekly, or monthly close prices. In the menu bar (located just above the graphs), you can also select specific time periods to visualize and also toggle between a linear or logarithmic price scaling on the left y-axis. Next to the cyan line, the upper graph also visualizes the historical asset volatility. This volatility is shown in magenta and expressed on the right y-axis. The volatility is computed using a lookback time period that is selected in the menu bar (located just above the graphs).

The middle graph visualizes the so-called “T” statistic for the Lee-Mykland (LM) test for the asset returns in magenta, and for a benchmark time series in yellow. This benchmark signal is based upon synthetically generated, normally distributed, independent and identically distributed (i.i.d.) returns. For this benchmark signal, each step is independent of the previous steps, and there is no systematic trend or pattern in the data. The yellow data points are given here for reference and comparison purposes (hence benchmarking). Now the LM statistical test is used to detect volatility jumps, particularly large and abrupt ones which are often associated with significant events, and the “T” test statistic summarizes the overall evidence for jumps in the time series. Note that in our case we have applied this test to the asset returns rather than asset volatility, as the algorithm has an internal volatility estimation procedure based upon a so-called rolling window realized bipower variation. Further we have also used this test with a 5% significance level threshold.

Finally the lower graph visualizes the so-called “J” Jump statistic for both the asset and benchmark signals. Here the convention reads as follows: J = 1 suggests an upward volatility jump, J = -1 suggests a downward volatility jump, and J = 0 indicates no significant volatility jump.

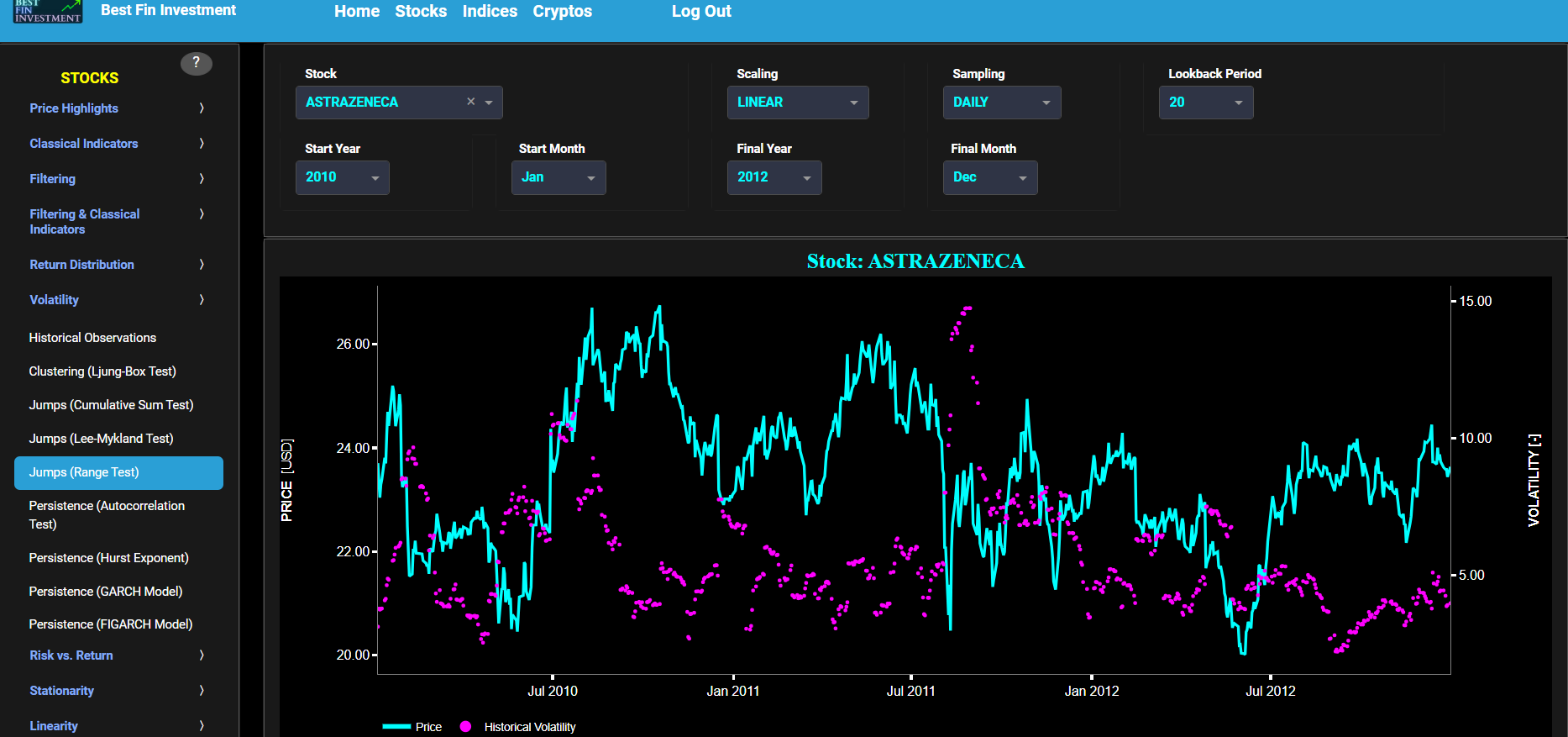

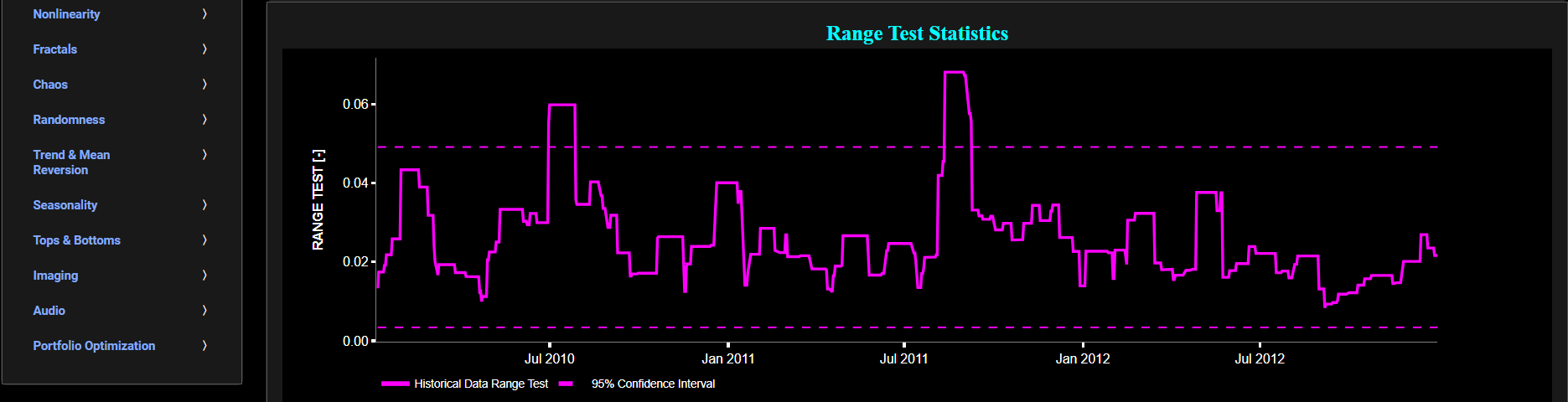

Volatility

Jumps (Range Test)

This page provides 2 graphs. The upper graph visualizes historical asset prices in cyan color using either daily, weekly, or monthly close prices. In the menu bar (located just above the graphs), you can also select specific time periods to visualize and also toggle between a linear or logarithmic price scaling on the left y-axis. Next to the cyan line, the upper graph also visualizes the historical asset volatility. This volatility is shown in magenta and expressed on the right y-axis. The volatility is computed using a lookback time period that is selected in the menu bar (located just above the graphs). The lower graph visualizes the Range test statistic which is designed to quantify the magnitude or size of the jumps or fluctuations in financial data. It provides a measure of how significant or pronounced the jumps in the data are, which can be useful for identifying and characterizing volatility changes. The Range test is shown for the asset returns in magenta. Finally the dashed lines visualize the 95% confidence interval.

Volatility

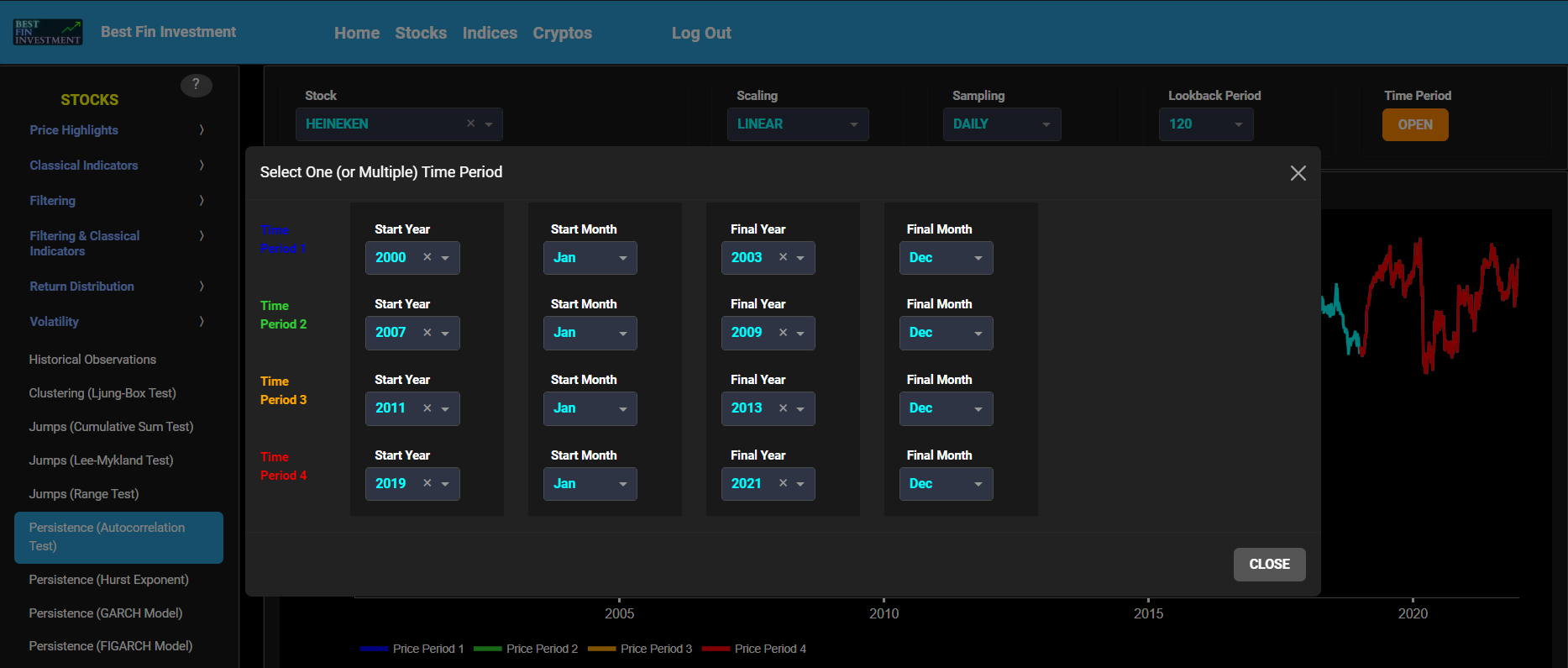

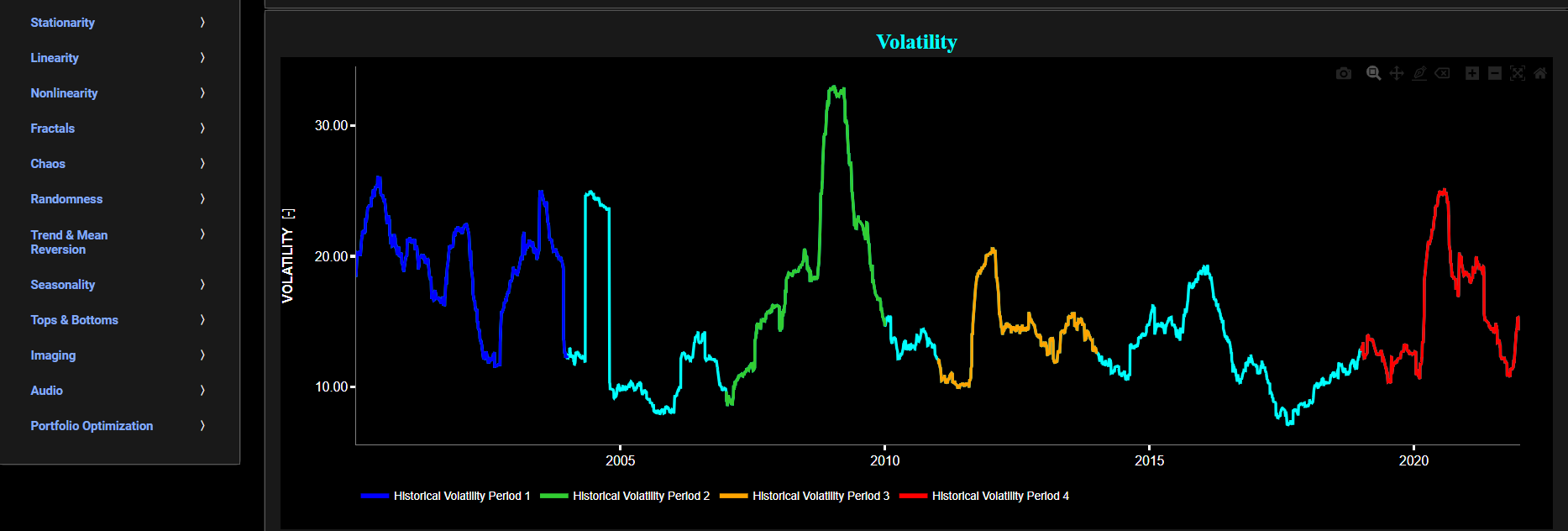

Persistence (Autocorrelation Test)

This page provides 3 graphs. The upper graph visualizes historical asset prices using either daily, weekly, or monthly close prices. In the menu bar (located just above the graphs), you can also toggle between a linear or logarithmic price scaling on the y-axis. Further you can select up to 4 specific time periods for further comparisons of price and volatility characteristics.

The middle graph visualizes the historical asset volatility. This volatility is shown for all selected time periods. The volatility is computed using a lookback time period that is selected in the menu bar (located just above the graphs).

Finally the lower graph visualizes volatility persistence, i.e. high volatility persistence suggests that periods of high or low volatility tend to persist over time. Here we compute the Partial Autocorrelation Function (PACF) of the squared asset returns using a 95% confidence interval. Squaring the returns is a common practice when studying volatility because it amplifies the impact of extreme values, making volatility patterns more apparent. Specifically the PACF values measure the relationship between the squared returns at different lags, while controlling for the influence of shorter lags. Significant PACF values at longer lags may indeed indicate the presence of volatility persistence in the data, i.e. large PACF values at longer lags provide evidence of past volatility's influence on current volatility.

Note that the Autocorrelation Function (ACF) measures the relationship between a data point and its lagged values, including all shorter lags, whereas the PACF measures the relationship between a data point and its lagged values while controlling for the influence of shorter lags. Therefore the PACF is better suited for identifying the direct influence of a specific lag on the current data point. The PACF is shown separately for each selected time period and for a benchmark time series in yellow. This benchmark signal is based upon synthetically generated, normally distributed, independent and identically distributed (i.i.d.) returns. For this benchmark signal, each step is independent of the previous steps, and there is no systematic trend or pattern in the data. The yellow data points are given here for reference and comparison purposes (hence benchmarking).

Volatility

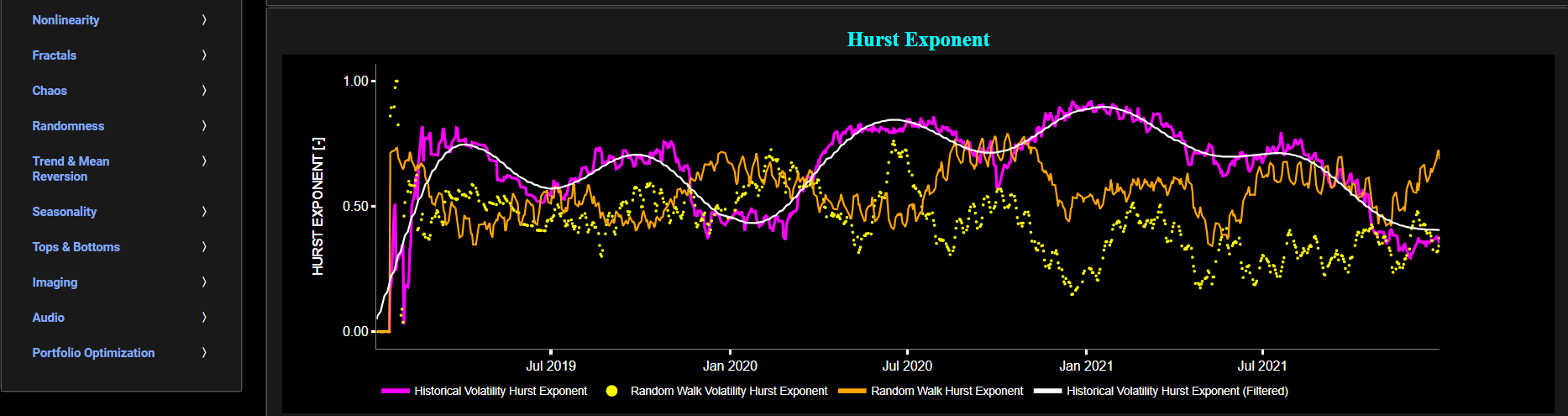

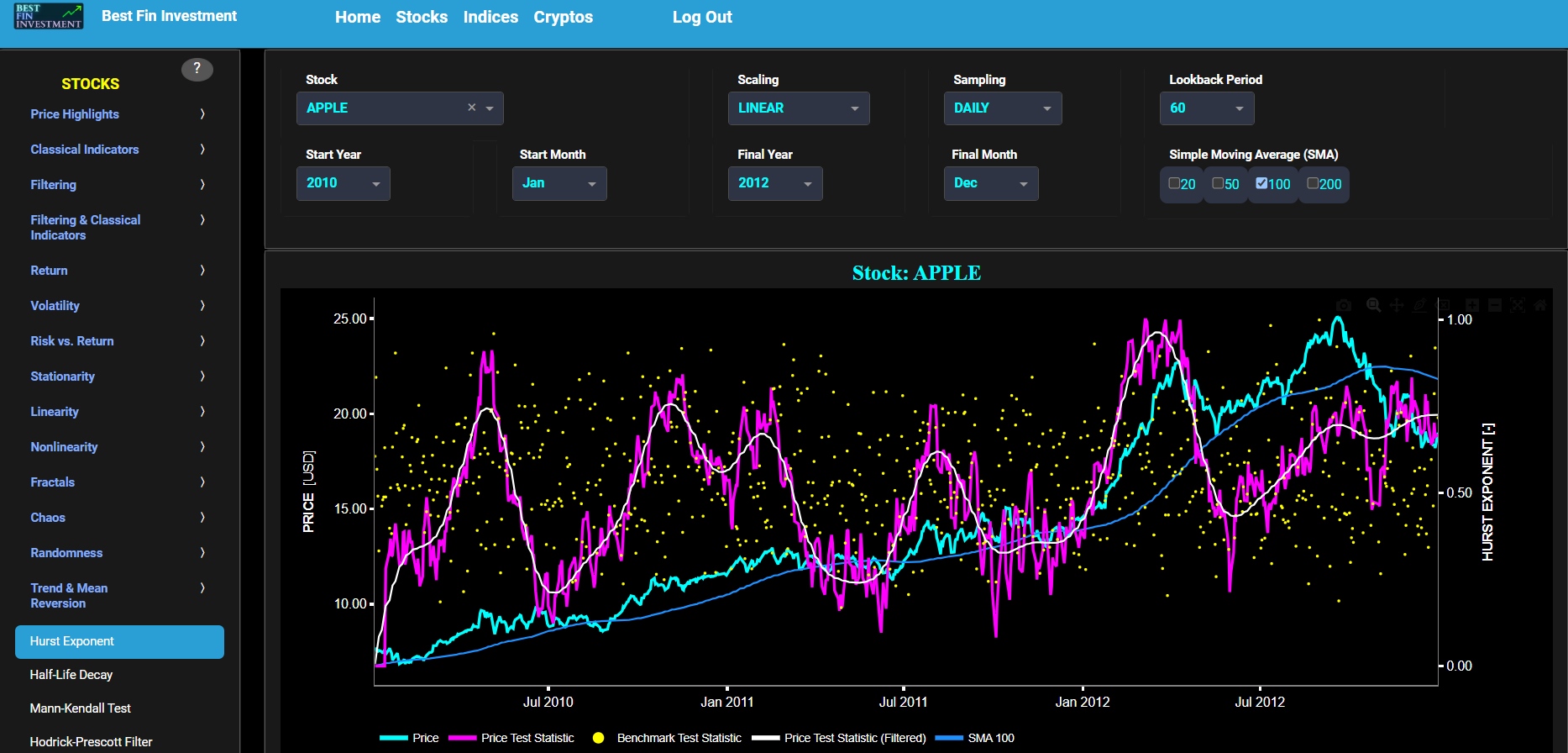

Persistence (Hurst Exponent)

This page provides 2 graphs. The upper graph visualizes historical asset prices in cyan color using either daily, weekly, or monthly close prices. In the menu bar (located just above the graphs), you can also select specific time periods to visualize and also toggle between a linear or logarithmic price scaling on the left y-axis. Next to the cyan line, the upper graph also visualizes the historical asset volatility. This volatility is shown in magenta and expressed on the right y-axis. The volatility is computed using a lookback time period that is selected in the menu bar (located just above the graphs).

The lower graph visualizes the Hurst Exponent (HE) statistical measure computed using the R/S statistic. The HE, named after the British hydrologist Harold Edwin Hurst (1880 – 1978), is used in various fields, including hydrology, to assess long-range dependence or persistence in time series data. In the context of hydrology, the HE is often used to analyze and understand the behavior of hydrological processes, particularly the flow of water in rivers, streams, and other water bodies. Essentially the HE helps to understand whether the flow at a particular time is influenced by past flow measurements over extended periods, i.e. the long-range dependence or memory of river flow. Similarly in financial time series analysis, the HE may be used to, among others, assess the persistence or long-term memory of asset volatility. It is particularly useful for understanding whether past volatility affects future volatility and for identifying patterns of persistence (such as persistence of trends) or mean-reverting behavior in financial markets. Here the HE is shown for the asset volatility in magenta, for a synthetically generated random walk in orange, and for the volatility of a random walk in yellow. In a random walk, each step is independent of the previous steps, and there is no systematic trend or pattern in the data. The random walk data points are given here for reference and comparison purposes (i.e. benchmarking). Next the white line represents a filtered version of the magenta line through the application of a low-pass Butterworth digital filter.

Finally the HE interpretation is given as follows: A HE value close to 0 suggests anti-persistence, where high volatility is likely to be followed by low volatility, and vice versa. A HE value close to 1 indicates persistence, where high volatility tends to be followed by high volatility, and low volatility by low volatility. A HE value around 0.5 suggests a random walk or no significant autocorrelation in volatility.

Volatility

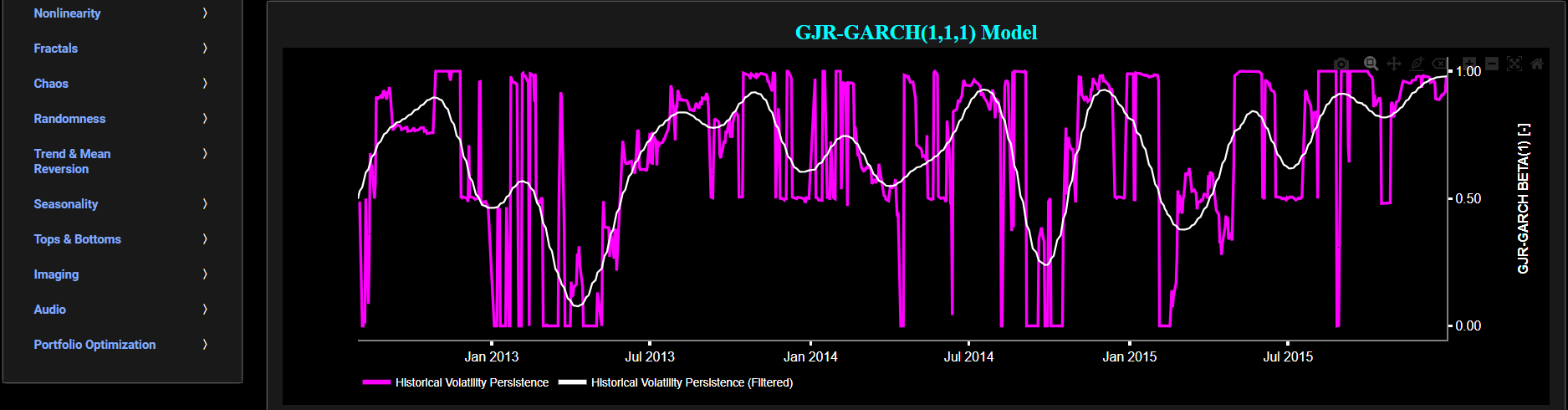

Persistence (GJR-GARCH Model)

This page provides 2 graphs. The upper graph visualizes historical asset prices in cyan color using either daily, weekly, or monthly close prices. In the menu bar (located just above the graphs), you can also select specific time periods to visualize and also toggle between a linear or logarithmic price scaling on the left y-axis. Next to the cyan line, the upper graph also visualizes the historical asset volatility. Here, volatility is defined as the annualized volatility of asset log returns. This volatility is shown in magenta and expressed on the right y-axis. The volatility is computed using a lookback time period that is selected in the menu bar (located just above the graphs).

The lower graph visualizes volatility persistence, i.e. the tendency of volatility to persist or autocorrelate over time. Here we use a Generalized Autoregressive Conditional Heteroskedasticity (GARCH) model which is commonly used in finance to model and forecast volatility. The basic GARCH model has been augmented with a so-called Generalized Jump Regression (GJR) term which allows to incorporate sudden jumps or shocks in volatility beyond what a standard GARCH model can capture. This means that the model can capture sudden changes in volatility due to unexpected events or shocks. Specifically the lower graph visualizes in magenta color the estimated model coefficient associated with the lagged conditional variance term (with lag 1). Next the white line represents a filtered version of the magenta line through the application of a low-pass Butterworth digital filter. Finally this graph may be interpreted as follows: if the shown coefficient values are close to 1, it suggests that past volatility has a strong influence on current volatility, indicating persistence. On the other hand if the shown coefficient values are significantly different from 1, it suggests that volatility may not exhibit strong persistence.

Volatility

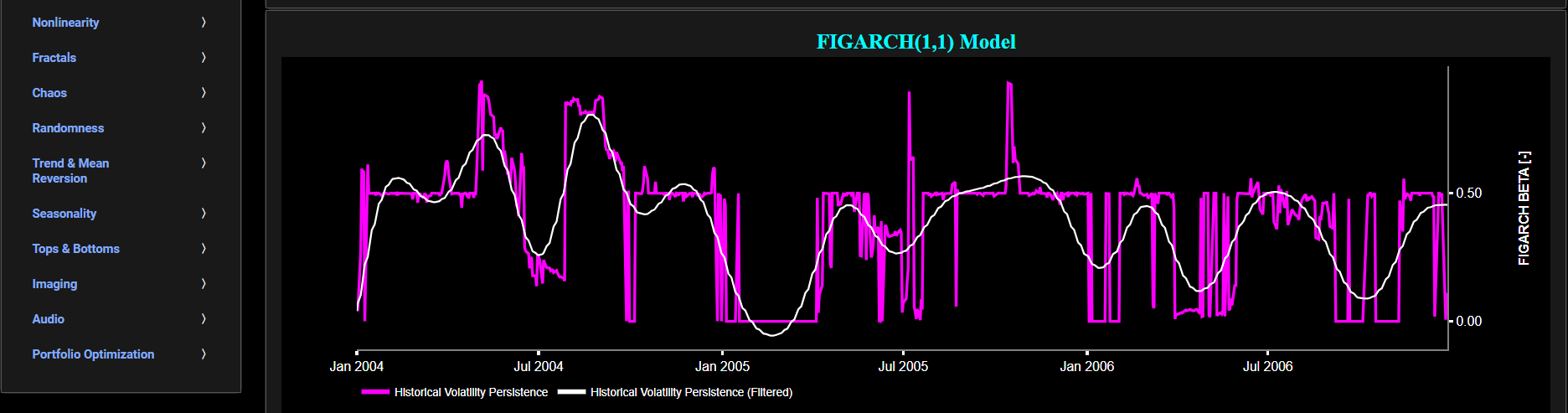

Persistence (FIGARCH Model)

This page provides 2 graphs. The upper graph visualizes historical asset prices in cyan color using either daily, weekly, or monthly close prices. In the menu bar (located just above the graphs), you can also select specific time periods to visualize and also toggle between a linear or logarithmic price scaling on the left y-axis. Next to the cyan line, the upper graph also visualizes the historical asset volatility. Here, volatility is defined as the annualized volatility of asset log returns. This volatility is shown in magenta and expressed on the right y-axis. The volatility is computed using a lookback time period that is selected in the menu bar (located just above the graphs).

The lower graph visualizes volatility persistence, i.e. the tendency of volatility to persist or autocorrelate over time. Here we use a Fractionally Integrated Generalized Autoregressive Conditional Heteroskedasticity (FIGARCH) model, whose primary focus is on capturing long memory or long-range dependence in volatility (typically associated with autocorrelation in the squared asset returns). The FIGARCH model achieves this by including a so-called fractional differencing parameter to account for the persistence in volatility. Specifically the lower graph visualizes in magenta color this estimated fractional differencing parameter. Essentially this parameter controls how quickly past shocks in volatility decay over time. A higher parameter value leads to slower decay and higher persistence, while a lower parameter value leads to faster decay and lower persistence. Next the white line represents a filtered version of the magenta line through the application of a low-pass Butterworth digital filter. Finally this graph may be interpreted as follows. A value close to 1 suggests high persistence in volatility. It indicates that past volatility strongly influences current volatility, and there is a significant autocorrelation in the squared asset returns. In this case, volatility tends to persist over time. On the other hand a value close to 0 indicates low persistence or near-random walk behavior in volatility. It suggests that past volatility does not have a strong influence on current volatility, and there is little autocorrelation in the squared asset returns. Finally an intermediate value suggests moderate persistence. It implies that past volatility has a moderate influence on current volatility, and there is some autocorrelation in the squared asset returns.

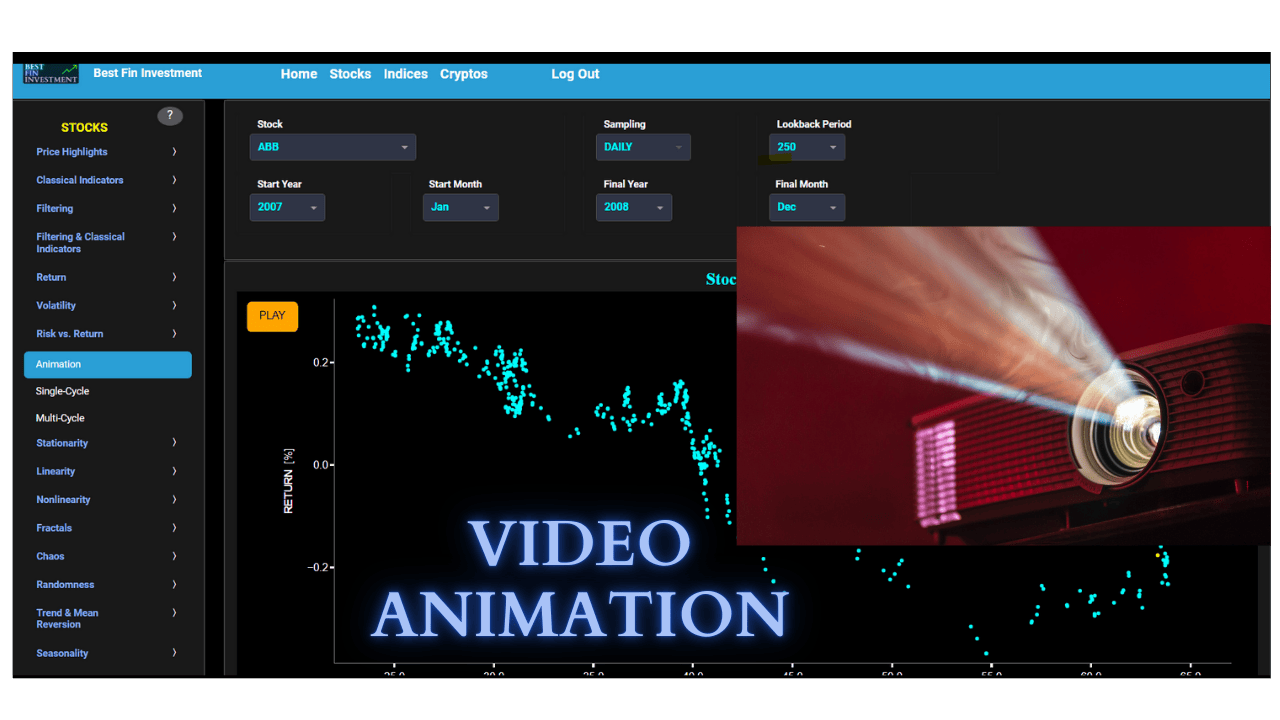

Risk vs. Return

Animation

This page visualizes asset “Risk” versus “Return”, through a video animation, using either daily, weekly, or monthly close prices. In the menu bar (located just above the graph), you can also select specific time periods to visualize. Here “Risk” is defined as the annualized sample volatility of the financial asset's returns based upon the lookback time period. It is a measure of how much the returns vary over time, scaled to an annualized basis. The lookback time period can also be selected from the menu bar (located just above the graph). Next “Return” is here defined as the percentage change in price from one time period to the next and subsequently averaged over the lookback time period. Now clicking on the orange “PLAY” button will start the video animation. The main purpose of this animation is to get a sense of market cycles and their associated clockwise or counter-clockwise rotations.

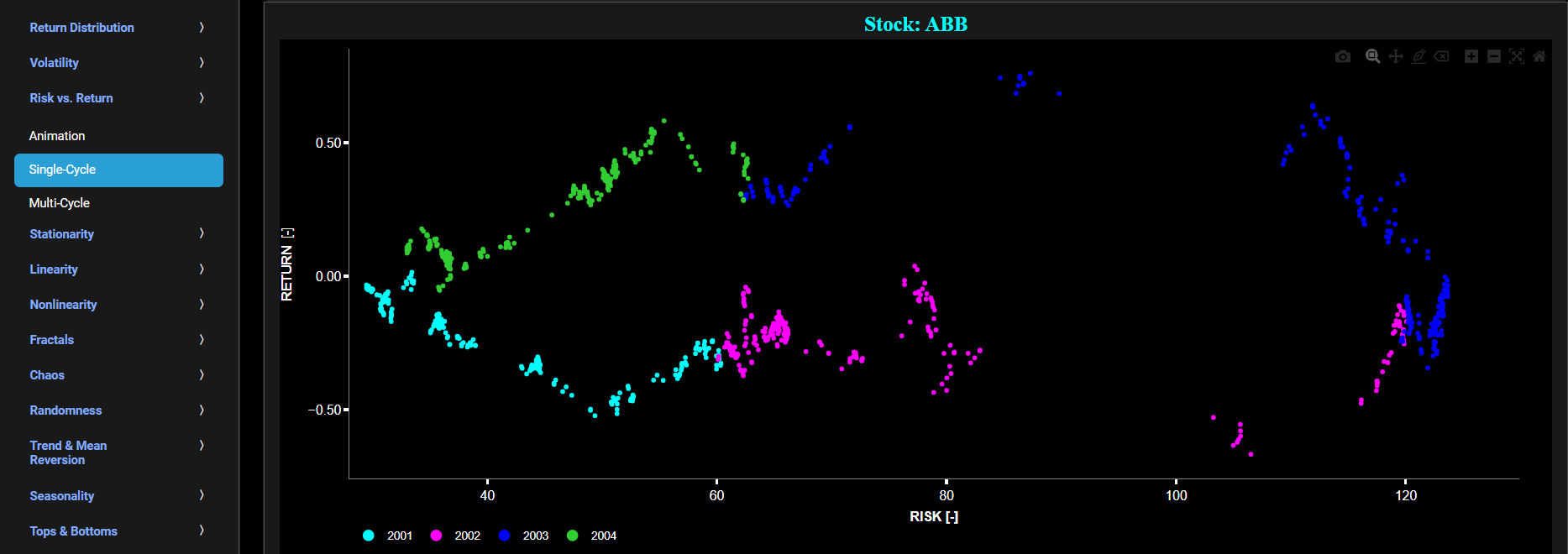

Risk vs. Return

Single-Cycle

This page visualizes asset “Risk” versus “Return”, in a single graph, using either daily, weekly, or monthly close prices. In the menu bar (located just above the graph), you can also select specific time periods to visualize. Here “Risk” is defined as the annualized sample volatility of the financial asset's returns based upon the lookback time period. It is a measure of how much the returns vary over time, scaled to an annualized basis. The lookback time period can also be selected from the menu bar (located just above the graph). Next “Return” is here defined as the percentage change in price from one time period to the next and subsequently averaged over the lookback time period. On this graph each year will be shown with a different color coding, hence helping you identify market cycles and their associated clockwise or counter-clockwise rotations.

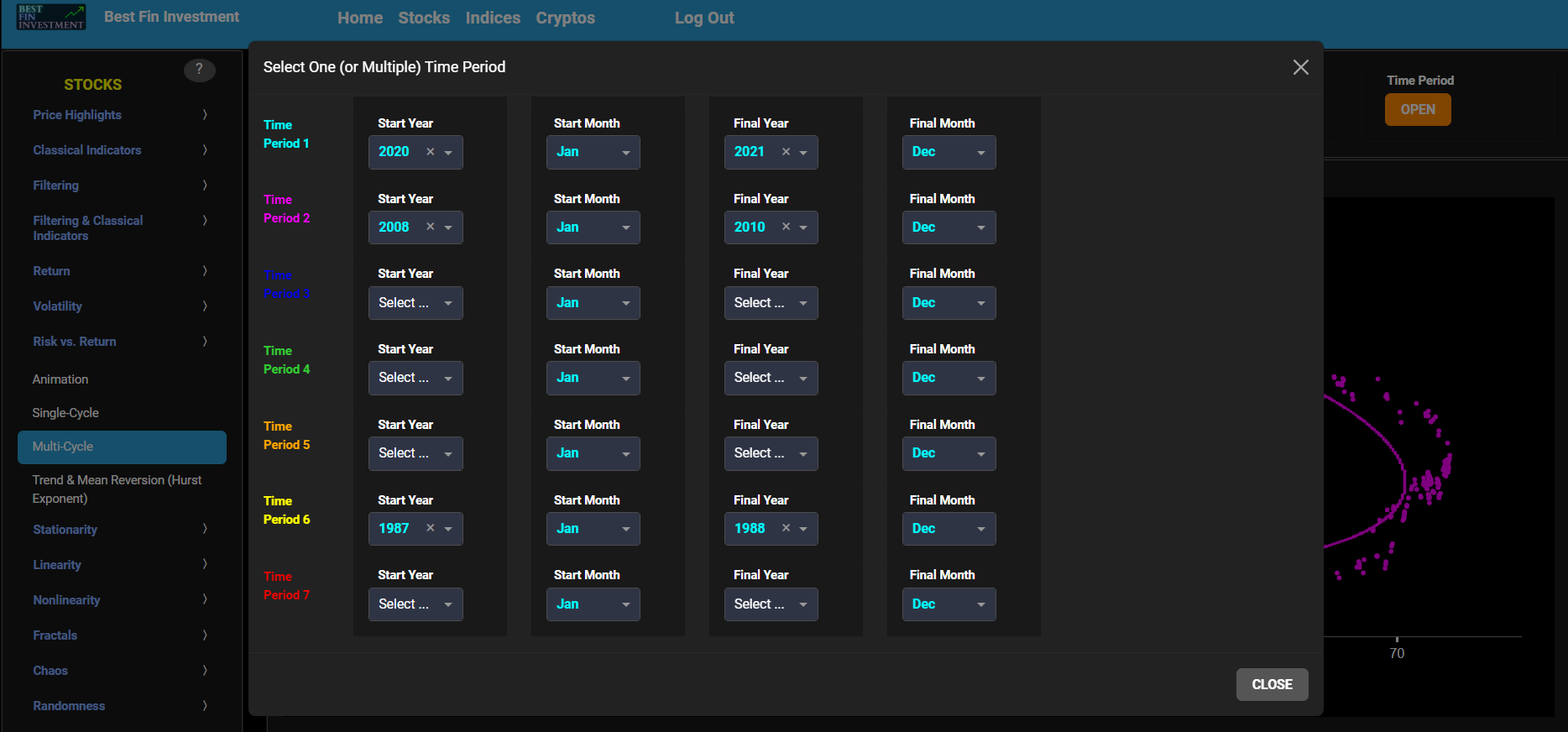

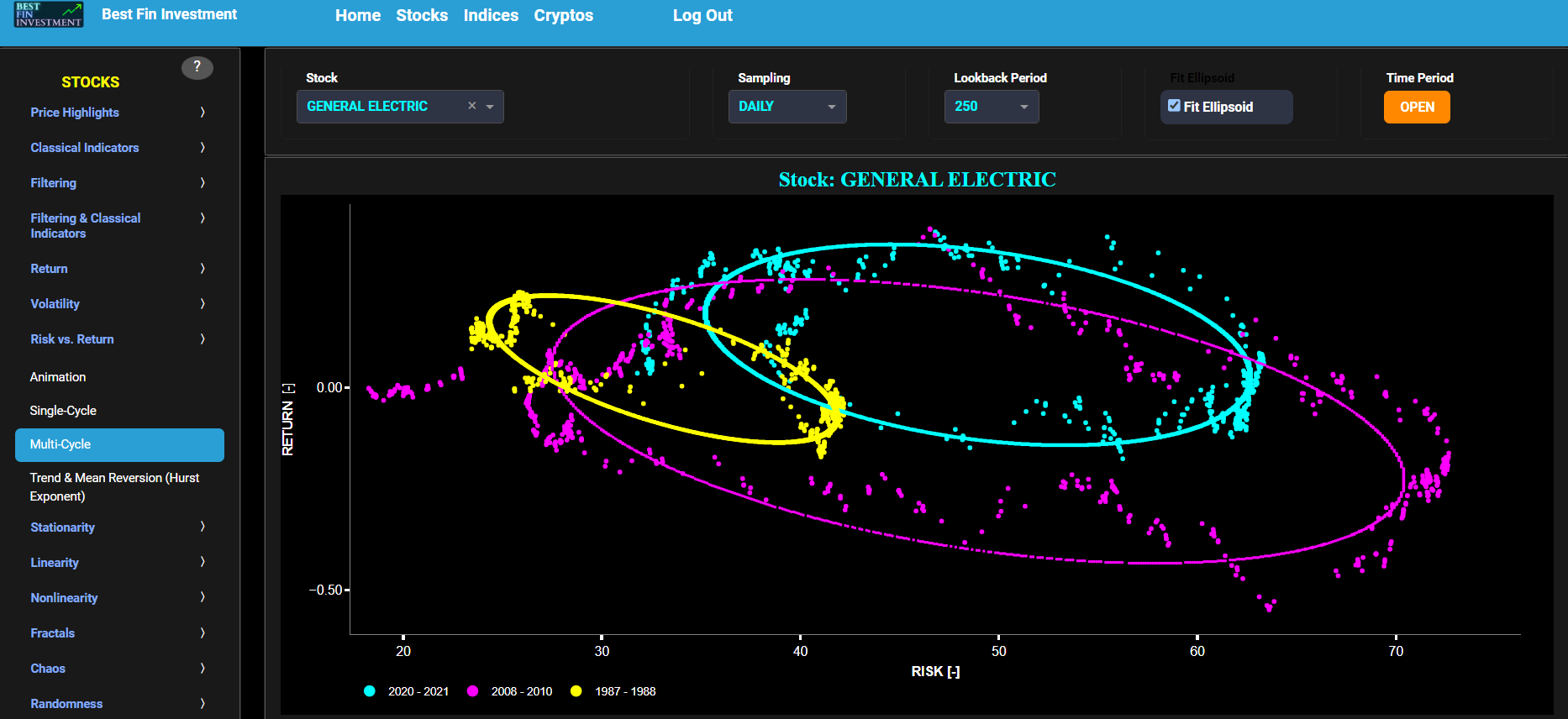

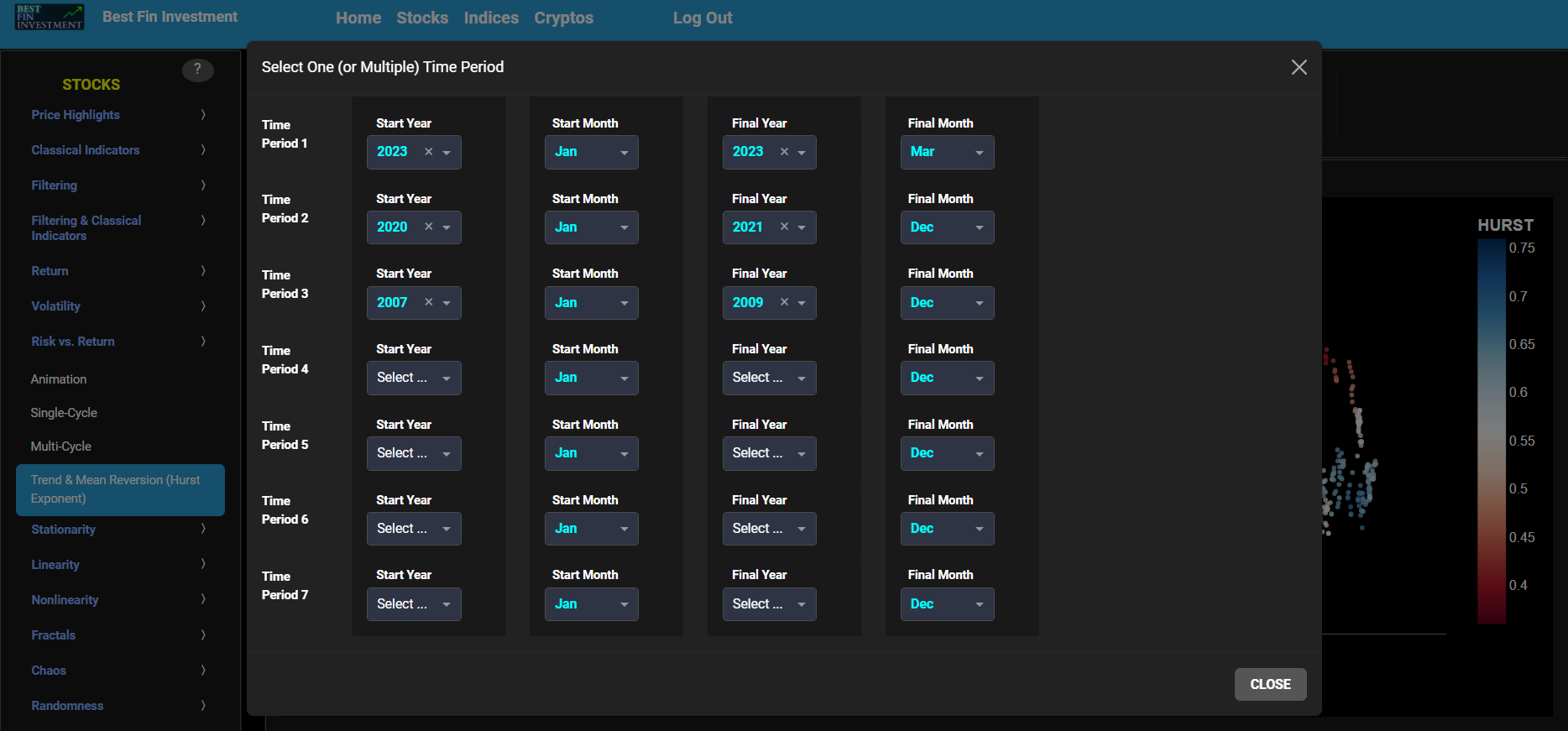

Risk vs. Return

Multi-Cycle

This page visualizes asset “Risk” versus “Return”, in a single graph, using either daily, weekly, or monthly close prices. In the menu bar (located just above the graph), you can also select up to 4 specific time periods for comparisons (each having its own color). Here “Risk” is defined as the annualized sample volatility of the financial asset's returns based upon the lookback time period. It is a measure of how much the returns vary over time, scaled to an annualized basis. The lookback time period can also be selected from the menu bar (located just above the graph). Next “Return” is here defined as the percentage change in price from one time period to the next and subsequently averaged over the lookback time period. On this graph each time period will be shown with a different color coding, hence helping you compare the various market cycles. Finally you can also add an ellipsoid fit to all shown market cycles, using a toggle button available on the menu bar.

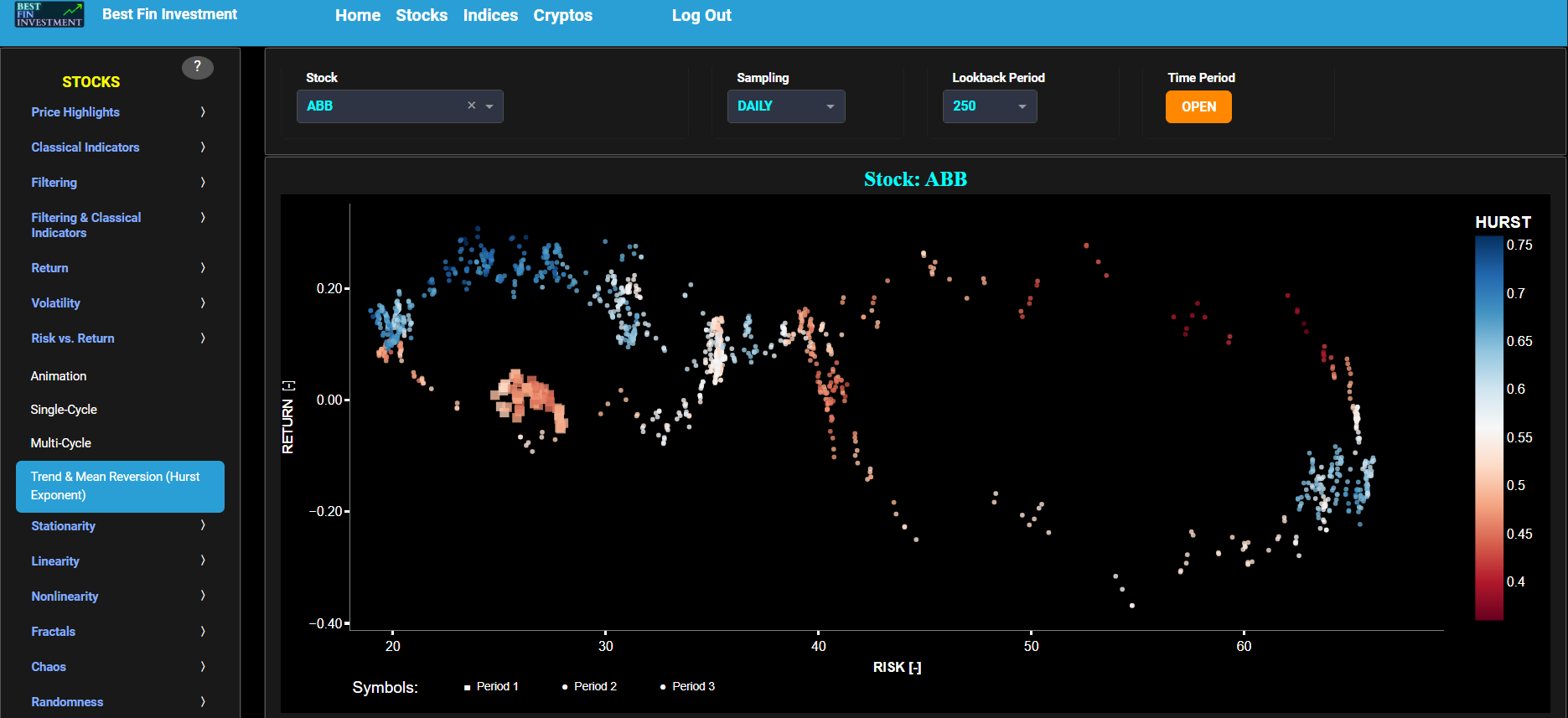

Risk vs. Return

Trend & Mean Reversion (Hurst Exponent)

This page visualizes asset “Risk” versus “Return” with the Hurst Exponent (HE) as color coding using either daily, weekly, or monthly close prices. In the menu bar (located just above the graph), you can also select up to 2 specific time periods for comparisons. Here “Risk” is defined as the annualized sample volatility of the financial asset's returns based upon the lookback time period. It is a measure of how much the returns vary over time, scaled to an annualized basis. The lookback time period can also be selected from the menu bar (located just above the graph). Next “Return” is defined as the percentage change in price from one time period to the next and subsequently averaged over the lookback time period.

In our case the HE statistical measure is computed using the R/S statistic. The HE, named after the British hydrologist Harold Edwin Hurst (1880 – 1978), is used in various fields, including hydrology, to assess long-range dependence or persistence in time series data. In the context of hydrology, the HE is often used to analyze and understand the behavior of hydrological processes, particularly the flow of water in rivers, streams, and other water bodies. Essentially the HE helps to understand whether the flow at a particular time is influenced by past flow measurements over extended periods, i.e. the long-range dependence or memory of river flow. Similarly in financial time series analysis, the HE may be used to analyze the intrinsic behavior of the asset's price movements. When applied to asset prices, the HE helps analyze whether the asset's prices exhibit a trending (persistent) or ean-reverting (anti-persistent) behavior. Here the HE is computed using the lookback time period that is selected in the menu bar (located just above the graph).

In terms of HE interpretation we have the following: A HE < 0.5 indicates a mean-reverting or anti-persistent behavior. In the context of asset prices, it suggests that the asset tends to revert to its mean price over time. This could imply opportunities for contrarian strategies. A HE > 0.5 suggests a trending or persistent behavior, indicating that the asset exhibits long-term trends and momentum. A HE value around 0.5 suggests a random walk.

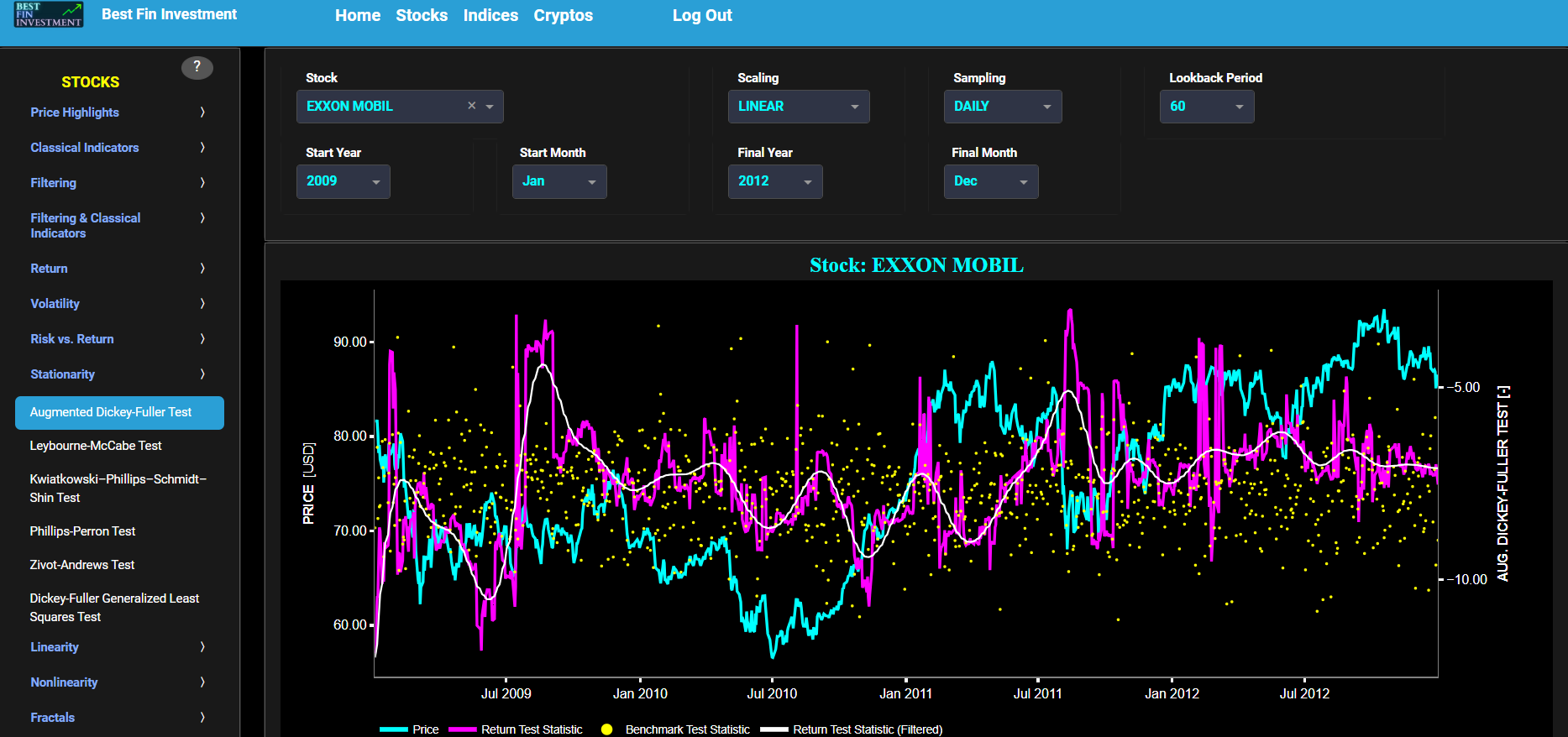

Stationarity

Augmented Dickey-Fuller Test

This page provides 2 graphs. The upper graph visualizes historical asset prices in cyan color using either daily, weekly, or monthly close prices. In the menu bar (located just above the graphs), you can also select specific time periods to visualize and also toggle between a linear or logarithmic price scaling on the y-axis. Next to the cyan line, the upper graph also visualizes the test statistic for the Augmented Dickey-Fuller (ADF) test for the asset incremental returns in magenta, and for a benchmark time series in yellow. This benchmark signal is based upon synthetically generated, normally distributed, independent and identically distributed (i.i.d.) returns. For this benchmark signal, each step is independent of the previous steps, and there is no systematic trend or pattern in the data. The yellow data points are given here for reference and comparison purposes (hence benchmarking). Next the white line represents a filtered version of the magenta line through the application of a low-pass Butterworth digital filter.

In our case the ADF test is applied using a constant (intercept) and a trend component when testing for stationarity of the asset returns. When a time series is non-stationary (i.e. it has a so-called unit root) it means that it exhibits a stochastic trend or a non-constant mean over time. In other words, the statistical properties of the time series, such as the mean and variance, are not constant over time. Here the null hypothesis of the ADF test is that the time series has a unit root, which means it is non-stationary. The alternative hypothesis is that the time series is stationary, i.e. it does not have a unit root. Now if the test statistic is significantly different from 0, it suggests evidence against the null hypothesis. In other words, it indicates that the time series is likely stationary, as it does not exhibit a unit root. Conversely if the test statistic is close to 0, it means that there is not enough evidence to reject the null hypothesis. In this case, the time series is more likely to be non-stationary, indicating the presence of a unit root.

Next the lower graph visualizes the statistical significance (or so called p-value) for the ADF test and for the benchmark signal. If the p-value is less than a significance level (typically 0.05), we may reject the null hypothesis and conclude that the data is stationary. Otherwise, if the p-value is greater than the significance level, we may fail to reject the null hypothesis, hence indicating non-stationarity. Finally in the menu bar (located just above the graphs), you can also select specific values for the lookback time period on which the ADF test will be computed.

Stationarity

Leybourne-McCabe Test

This page provides 2 graphs. The upper graph visualizes historical asset prices in cyan color using either daily, weekly, or monthly close prices. In the menu bar (located just above the graphs), you can also select specific time periods to visualize and also toggle between a linear or logarithmic price scaling on the y-axis. Next to the cyan line, the upper graph also visualizes the test statistic for the Leybourne-McCabe (LM) test for the asset cumulative returns in magenta, and for a synthetically generated random walk in yellow. In a random walk, each step is independent of the previous steps, and there is no systematic trend or pattern in the data. The yellow random walk data points are given here for reference and comparison purposes (i.e. benchmarking). Next the white line represents a filtered version of the magenta line through the application of a low-pass Butterworth digital filter.

In our case the LM test is applied using a constant (intercept) and a trend component when testing for stationarity of the asset returns. When a time series is non-stationary (i.e. it has a so-called unit root) it means that it exhibits a stochastic trend or a non-constant mean over time. In other words, the statistical properties of the time series, such as the mean and variance, are not constant over time. Here the null hypothesis of the LM test is that the time series is stationary. The alternative hypothesis is that the time series is non-stationary, i.e. it does have a unit root. Now if the test statistic is significantly different from 0, it suggests evidence against the null hypothesis. In other words, it indicates that the time series is likely non-stationary, as it does exhibit a unit root. Conversely if the test statistic is close to 0, it means that there is not enough evidence to reject the null hypothesis. In this case, the time series is more likely to be stationary.

Next the lower graph visualizes the statistical significance (or so called p-value) for the LM test and for the benchmark signal. If the p-value is less than a significance level (typically 0.05), we may reject the null hypothesis and conclude that the data is non-stationary. Otherwise, if the p-value is greater than the significance level, we may fail to reject the null hypothesis, hence indicating stationarity. Finally in the menu bar (located just above the graphs), you can also select specific values for the lookback time period on which the LM test will be computed.

Stationarity

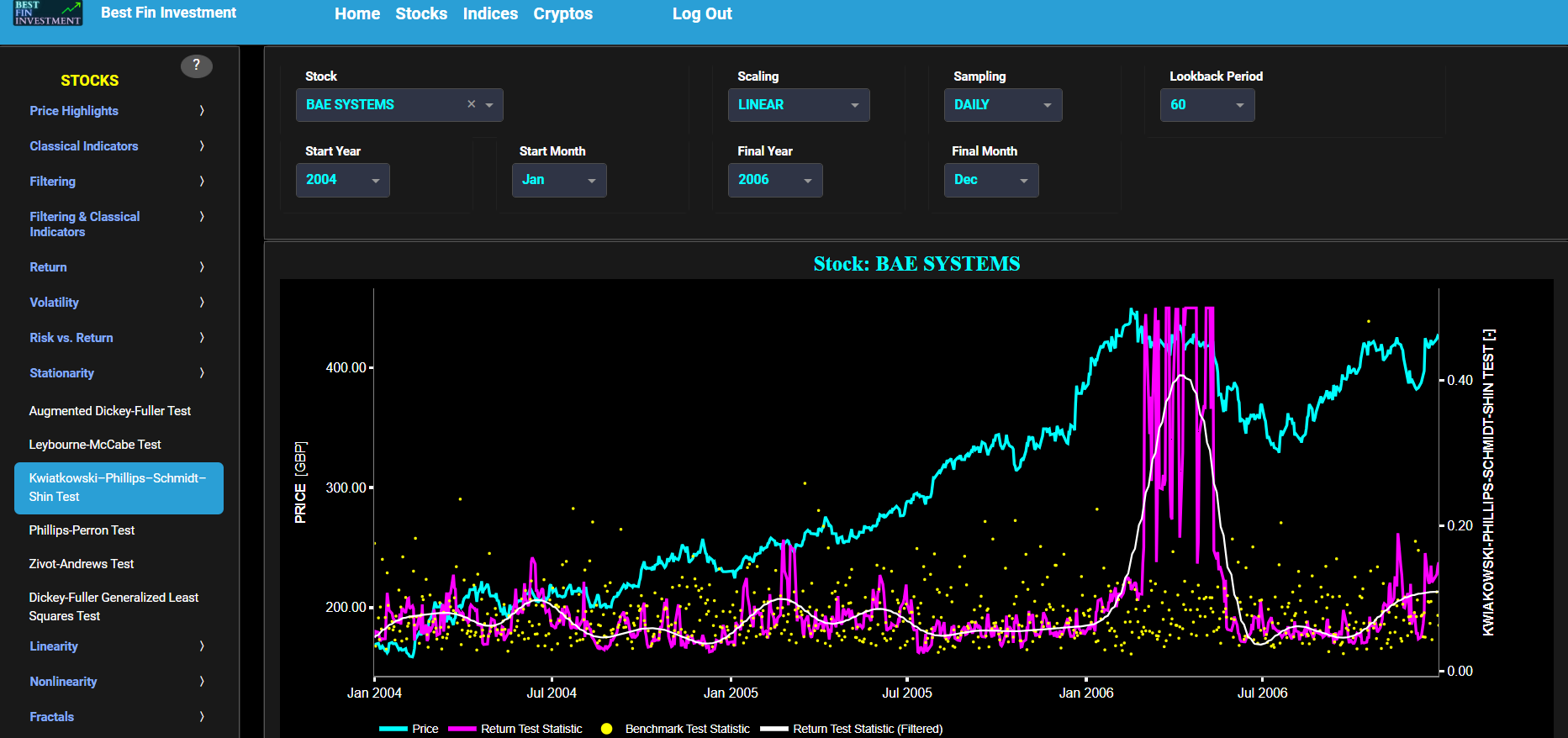

Kwiatkowski–Phillips–Schmidt–Shin Test

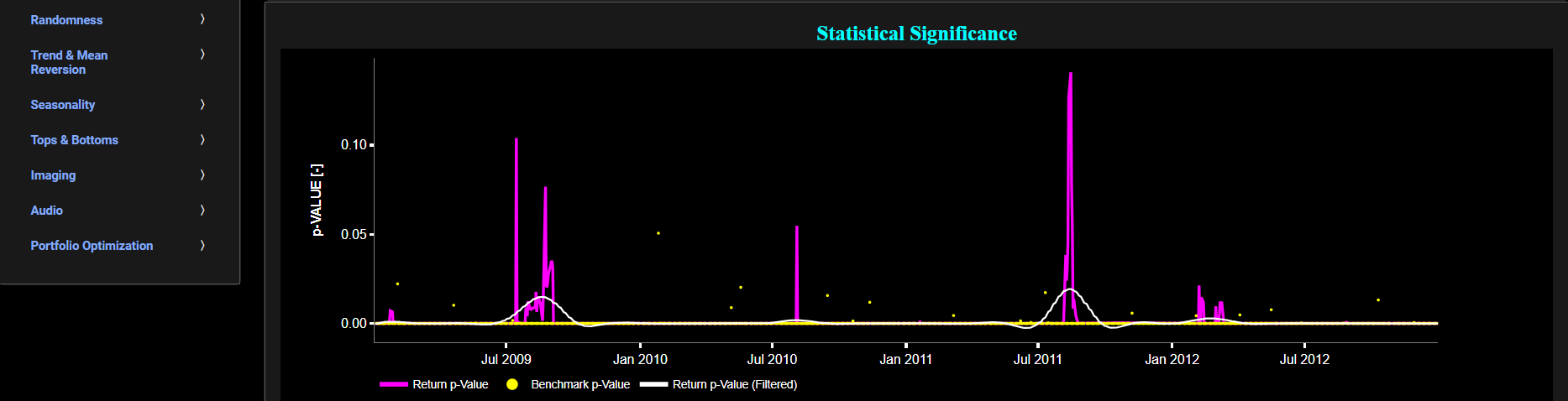

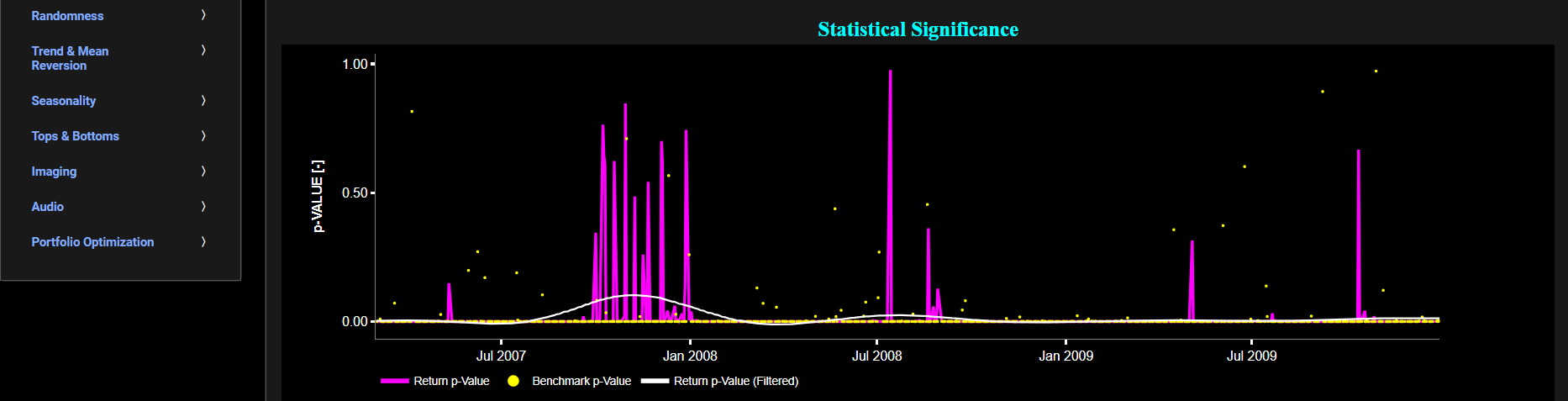

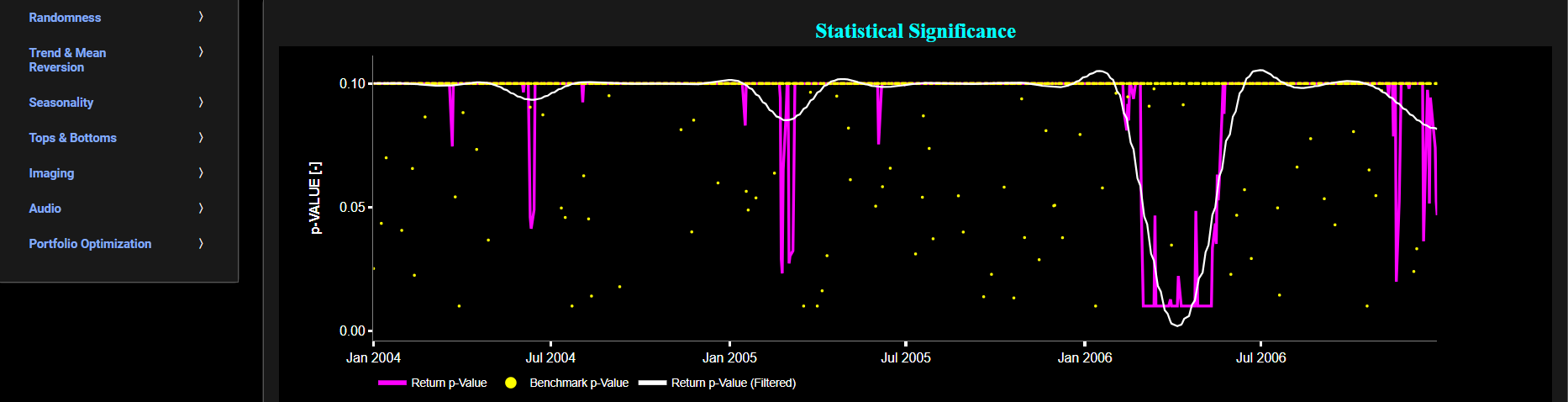

This page provides 2 graphs. The upper graph visualizes historical asset prices in cyan color using either daily, weekly, or monthly close prices. In the menu bar (located just above the graphs), you can also select specific time periods to visualize and also toggle between a linear or logarithmic price scaling on the y-axis. Next to the cyan line, the upper graph also visualizes the test statistic for the Kwiatkowski–Phillips–Schmidt–Shin (KPSS) test for the asset incremental returns in magenta, and for a benchmark time series in yellow. This benchmark signal is based upon synthetically generated, normally distributed, independent and identically distributed (i.i.d.) returns. For this benchmark signal, each step is independent of the previous steps, and there is no systematic trend or pattern in the data. The yellow data points are given here for reference and comparison purposes (hence benchmarking). Next the white line represents a filtered version of the magenta line through the application of a low-pass Butterworth digital filter.

In our case the KPSS test is applied using a constant (intercept) and a trend component when testing for stationarity of the asset returns. When a time series is non-stationary (i.e. it has a so-called unit root) it means that it exhibits a stochastic trend or a non-constant mean over time. In other words, the statistical properties of the time series, such as the mean and variance, are not constant over time. Here the null hypothesis for the KPSS test is that the time series data is stationary around a deterministic trend. In other words, the null hypothesis assumes that the data is stationary with a constant mean and variance but may have a linear trend. The alternative hypothesis is that the time series is non-stationary, meaning it has a unit root, a stochastic trend, or some other form of non-constant variance. Now if the test statistic is significantly different from 0 (i.e. far from 0 by exceeding critical values), it suggests that the time series has a unit root, indicating non-stationarity. In this case, you may reject the null hypothesis (i.e., you conclude that the data is non-stationary). Conversely if the test statistic is close to 0, it suggests that the time series is stationary around a deterministic trend. In this case, you fail to reject the null hypothesis (i.e., you conclude that the data is stationary).

Next the lower graph visualizes the statistical significance (or so called p-value) for the KPSS test and for the benchmark signal. A low p-value (typically less than 0.05) suggests that the data is non-stationary, as you would reject the null hypothesis. A high p-value suggests that the data is stationary, as you fail to reject the null hypothesis. Finally in the menu bar (located just above the graphs), you can also select specific values for the lookback time period on which the KPSS test will be computed.

Stationarity

Phillips-Perron Test

This page provides 2 graphs. The upper graph visualizes historical asset prices in cyan color using either daily, weekly, or monthly close prices. In the menu bar (located just above the graphs), you can also select specific time periods to visualize and also toggle between a linear or logarithmic price scaling on the y-axis. Next to the cyan line, the upper graph also visualizes the test statistic for the Phillips-Perron (PP) test for the asset cumulative returns in magenta, and for a synthetically generated random walk in yellow. In a random walk, each step is independent of the previous steps, and there is no systematic trend or pattern in the data. The yellow random walk data points are given here for reference and comparison purposes (i.e. benchmarking). Next the white line represents a filtered version of the magenta line through the application of a low-pass Butterworth digital filter.

In our case the PP test is applied using a constant (intercept) and a trend component when testing for stationarity of the asset returns. When a time series is non-stationary (i.e. it has a so-called unit root) it means that it exhibits a stochastic trend or a non-constant mean over time. In other words, the statistical properties of the time series, such as the mean and variance, are not constant over time. Here the null hypothesis of the PP test is that the time series has a unit root, which means it is non-stationary. The alternative hypothesis is that the time series is stationary, i.e. it does not have a unit root. Now if the test statistic is significantly different from 0 (i.e. far from 0 by exceeding critical values), it suggests evidence against the null hypothesis. In other words, it indicates that the time series is likely stationary, as it does not exhibit a unit root. Conversely if the test statistic is close to 0, it means that there is not enough evidence to reject the null hypothesis. In this case, the time series is more likely to be non-stationary, indicating the presence of a unit root.

Next the lower graph visualizes the statistical significance (or so called p-value) for the PP test and for the benchmark signal. If the p-value is less than a significance level (typically 0.05), we may reject the null hypothesis and conclude that the data is stationary. Otherwise, if the p-value is greater than the significance level, we may fail to reject the null hypothesis, hence indicating non-stationarity. Finally in the menu bar (located just above the graphs), you can also select specific values for the lookback time period on which the PP test will be computed.

Stationarity

Zivot-Andrews Test

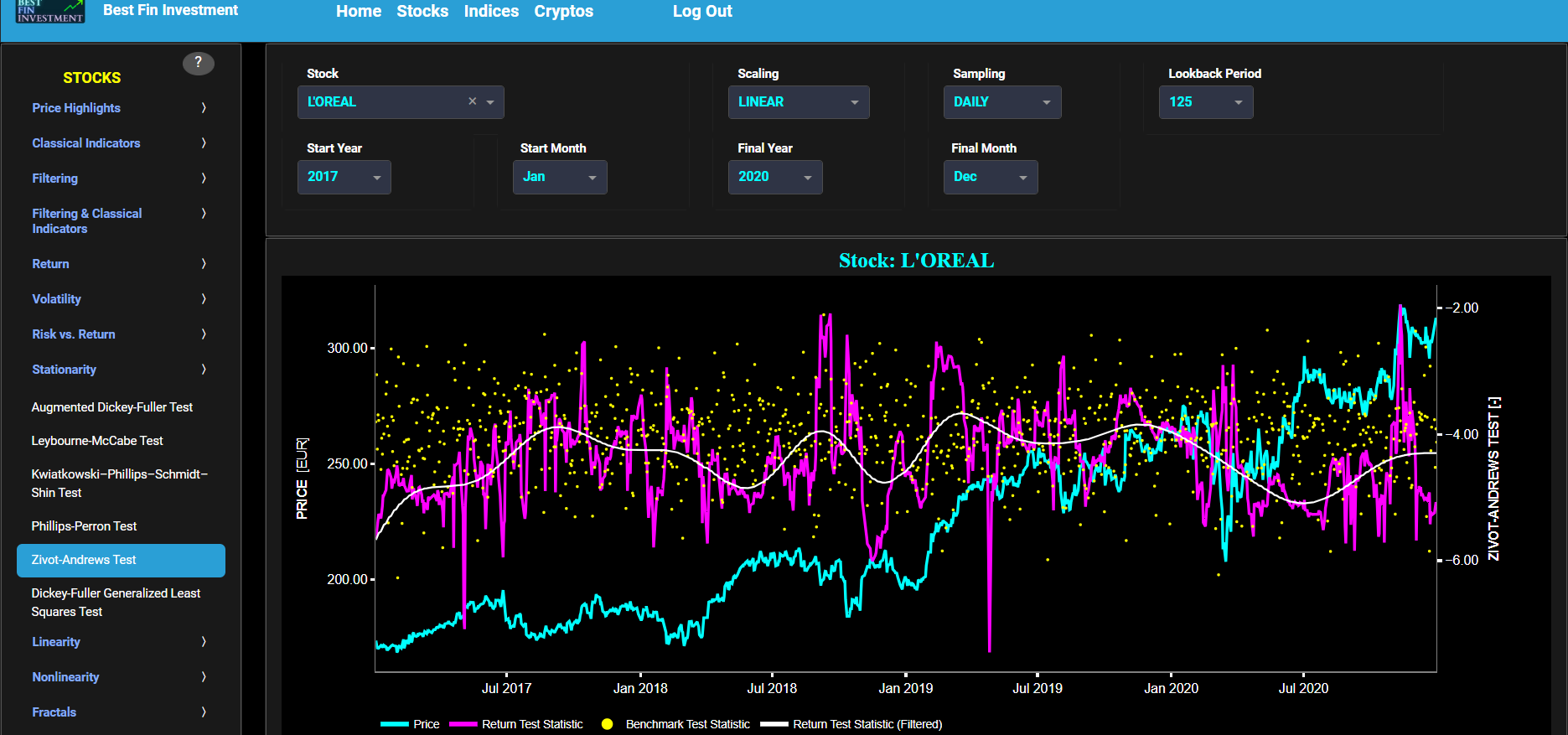

This page provides 2 graphs. The upper graph visualizes historical asset prices in cyan color using either daily, weekly, or monthly close prices. In the menu bar (located just above the graphs), you can also select specific time periods to visualize and also toggle between a linear or logarithmic price scaling on the y-axis. Next to the cyan line, the upper graph also visualizes the test statistic for the Zivot-Andrews (ZA) test for the asset cumulative returns in magenta, and for a synthetically generated random walk in yellow. In a random walk, each step is independent of the previous steps, and there is no systematic trend or pattern in the data. The yellow random walk data points are given here for reference and comparison purposes (i.e. benchmarking). Next the white line represents a filtered version of the magenta line through the application of a low-pass Butterworth digital filter.

In our case the ZA test is applied using a constant (intercept) and a trend component when testing for stationarity of the asset returns. Note that this test does allow for the possibility of a single structural break in the time series, which means that the characteristics of the series may have changed at some point. When a time series is non-stationary (i.e. it has a so-called unit root) it means that it exhibits a stochastic trend or a non-constant mean over time. In other words, the statistical properties of the time series, such as the mean and variance, are not constant over time. Here the null hypothesis of the ZA test is that the time series has a unit root, which means it is non-stationary. The alternative hypothesis is that the time series is stationary, i.e. it does not have a unit root. Now if the test statistic is significantly different from 0 (i.e. far from 0 by exceeding critical values), it suggests evidence against the null hypothesis. In other words, it indicates that the time series is likely stationary, as it does not exhibit a unit root. Conversely if the test statistic is close to 0, it means that there is not enough evidence to reject the null hypothesis. In this case, the time series is more likely to be non-stationary, indicating the presence of a unit root.

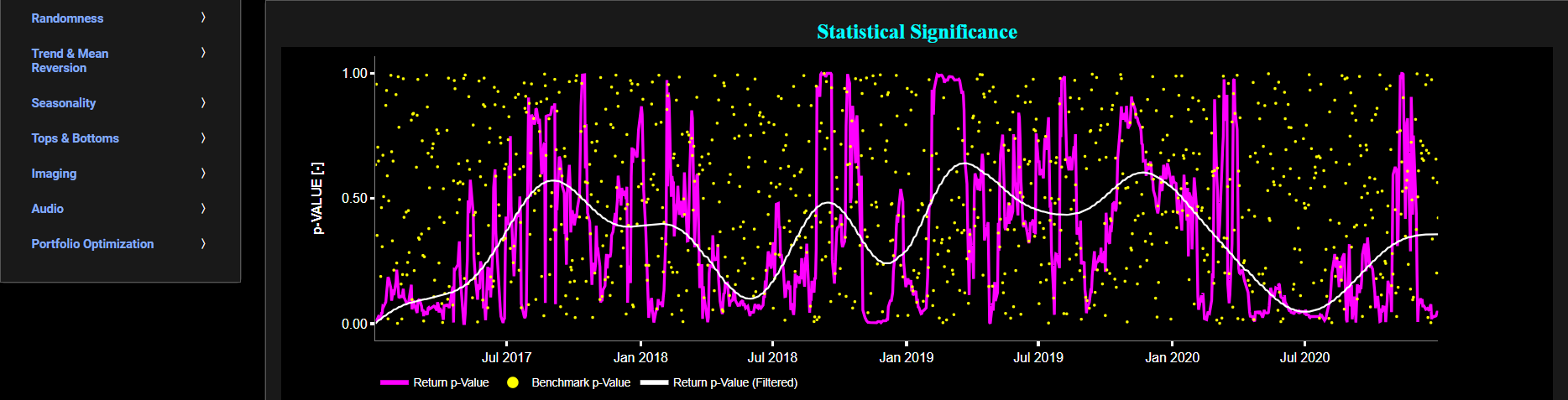

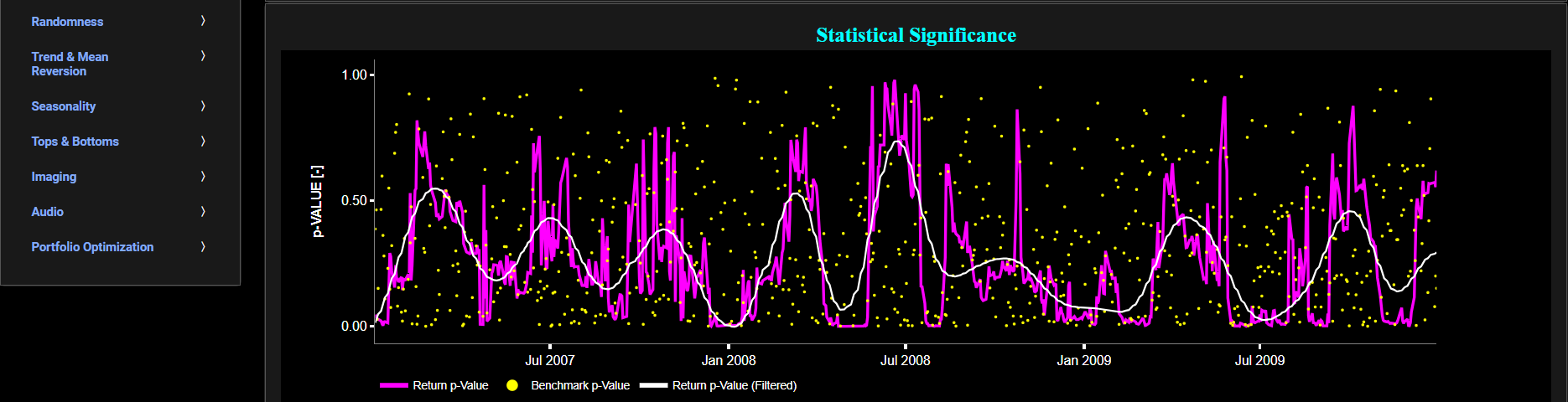

Next the lower graph visualizes the statistical significance (or so called p-value) for the ZA test and for the benchmark signal. If the p-value is less than a significance level (typically 0.05), we may reject the null hypothesis and conclude that the data is stationary. Otherwise, if the p-value is greater than the significance level, we may fail to reject the null hypothesis, hence indicating non-stationarity. Finally in the menu bar (located just above the graphs), you can also select specific values for the lookback time period on which the ZA test will be computed.

Stationarity

Dickey-Fuller Generalized Least Squares Test

This page provides 2 graphs. The upper graph visualizes historical asset prices in cyan color using either daily, weekly, or monthly close prices. In the menu bar (located just above the graphs), you can also select specific time periods to visualize and also toggle between a linear or logarithmic price scaling on the y-axis. Next to the cyan line, the upper graph also visualizes the test statistic for the Dickey-Fuller Generalized Least Squares (DFGLS) test for the asset cumulative returns in magenta, and for a synthetically generated random walk in yellow. In a random walk, each step is independent of the previous steps, and there is no systematic trend or pattern in the data. The yellow random walk data points are given here for reference and comparison purposes (i.e. benchmarking). Next the white line represents a filtered version of the magenta line through the application of a low-pass Butterworth digital filter.

In our case the DF-GLS test is applied using a constant (intercept) and a trend component when testing for stationarity of the asset returns. The DF-GLS test is a specialized modification of the Augmented Dickey-Fuller (ADF) test that may be chosen when serial correlation is a concern. The DF-GLS test is particularly useful when dealing with time series data that may exhibit serial correlation, which can lead to inaccurate results in standard unit root tests like the original Dickey-Fuller test. By using Generalized Least Squares, the DF-GLS test provides more robust results under such conditions. Now when a time series is non-stationary (i.e. it has a so-called unit root) it means that it exhibits a stochastic trend or a non-constant mean over time. In other words, the statistical properties of the time series, such as the mean and variance, are not constant over time. Here the null hypothesis of the DF-GLS test is that the time series has a unit root, which means it is non-stationary. The alternative hypothesis is that the time series is stationary, i.e. it does not have a unit root. Now if the test statistic is significantly different from 0, it suggests evidence against the null hypothesis. In other words, it indicates that the time series is likely stationary, after accounting for potential serial correlation and a deterministic trend component. Conversely if the test statistic is close to 0, it means that there is not enough evidence to reject the null hypothesis. In this case, the time series is more likely to be non-stationary, indicating the presence of a unit root.

Next the lower graph visualizes the statistical significance (or so called p-value) for the DF-GLS test and for the benchmark signal. If the p-value is less than a significance level (typically 0.05), we may reject the null hypothesis and conclude that the data is stationary. Otherwise, if the p-value is greater than the significance level, we may fail to reject the null hypothesis, hence indicating non-stationarity. Finally in the menu bar (located just above the graphs), you can also select specific values for the lookback time period on which the DF-GLS test will be computed.

Linearity

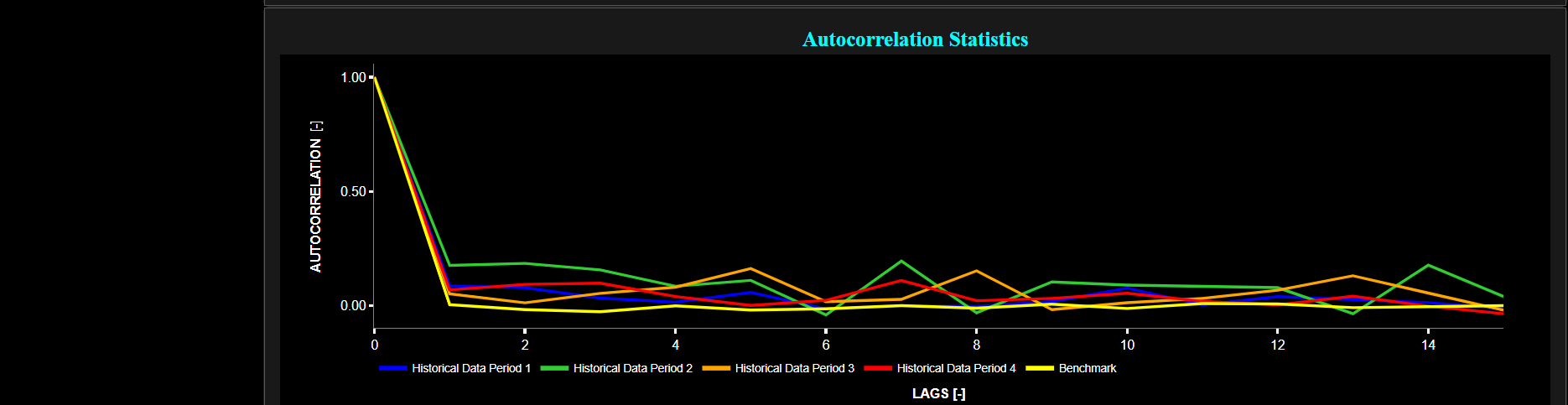

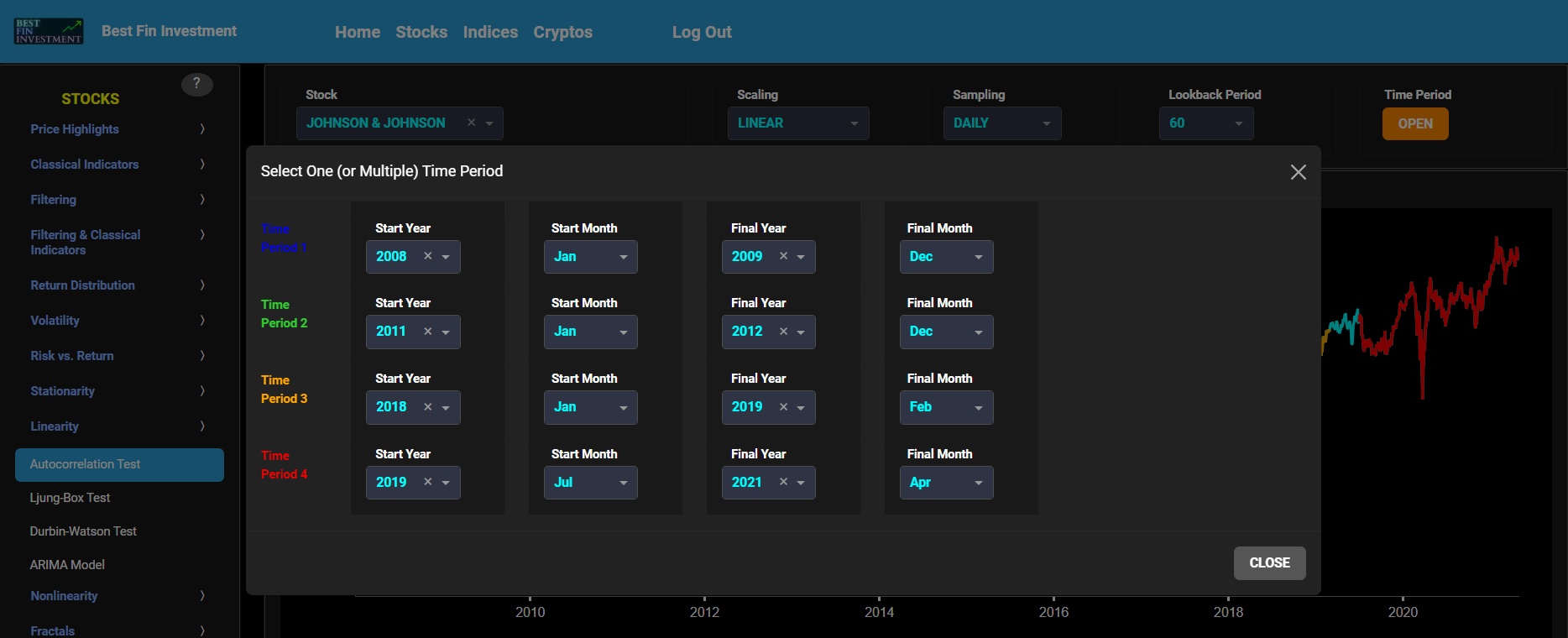

Autocorrelation Test

This page provides 3 graphs. The upper graph visualizes historical asset prices using either daily, weekly, or monthly close prices. In the menu bar (located just above the graphs), you can also toggle between a linear or logarithmic price scaling on the y-axis. Further you can select up to 4 specific time periods for further comparisons of price and return characteristics. The middle graph visualizes the historical asset return, with asset “return” defined as incremental returns (i.e. quantified as the percentage change in the asset's price between consecutive time periods). These asset returns are shown for all selected time periods using dedicated colors. Finally the lower graph analyzes the asset returns through the autocorrelation statistic. Indeed the autocorrelation is a valuable tool in time series analysis. Positive autocorrelation can indicate trends or seasonality, while negative autocorrelation might suggest a pattern of oscillation or inverse behavior in the data. The magnitude of the autocorrelation value indicates the strength of the relationship, with larger absolute values indicating a stronger relationship.

In our case we visualize the Partial Autocorrelation Function (PACF) of asset returns. The PACF is computed using a lookback time period that is selected in the menu bar (located just above the graphs). While the standard Autocorrelation Function (ACF) measures the relationship between a data point and its lagged values (including all shorter lags), the PACF measures the relationship between a data point and its lagged values while controlling for the influence of shorter lags. Therefore the PACF is better suited for identifying the direct influence of a specific lag on the current data point. Now when it comes to ACF or PACF metrics it is important to recognize the following characteristics. First, linear patterns in data can result in significant autocorrelation in the ACF and PACF plots. Hence a linear pattern is a sufficient condition for observing autocorrelation. Conversely the absence of autocorrelation guarantees the absence of linear patterns in the data. However non-linear patterns in data can also lead to autocorrelation. For instance, if a time series follows a periodic or cyclic pattern, it may exhibit autocorrelation at specific lags. Therefore, the presence of autocorrelation does not necessarily imply a linear pattern; it can also indicate non-linear dependencies (particularly when these patterns manifest themselves as spikes or oscillations in the PACF). Note that although the PACF may detect nonlinear behavior in the data, it is generally not as robust or specific as dedicated nonlinear time series analysis. Finally the absence of significant autocorrelation in the ACF or PACF does not necessarily mean that there are no non-linear patterns in the data. Non-linear patterns may not produce strong autocorrelation in these plots, especially if they are complex or irregular. Summarizing: while linear patterns will always lead to autocorrelation in ACF and PACF plots, non-linear patterns in the data may or may not lead to autocorrelation, depending on whether they involve systematic relationships between data points at different lags.

Now returning to the graphs, the lower graph also includes a benchmark time series in yellow. This benchmark signal is based upon synthetically generated, normally distributed, independent and identically distributed (i.i.d.) returns. For this benchmark signal, each step is independent of the previous steps, and there is no systematic trend or pattern in the data. The yellow data points are given here for reference and comparison purposes (hence benchmarking).

Linearity

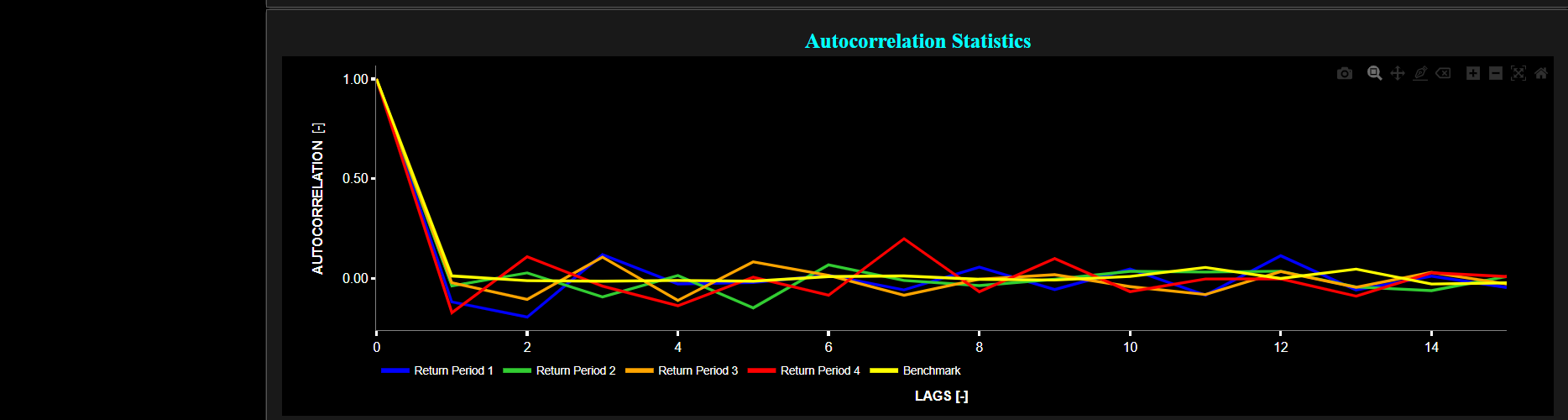

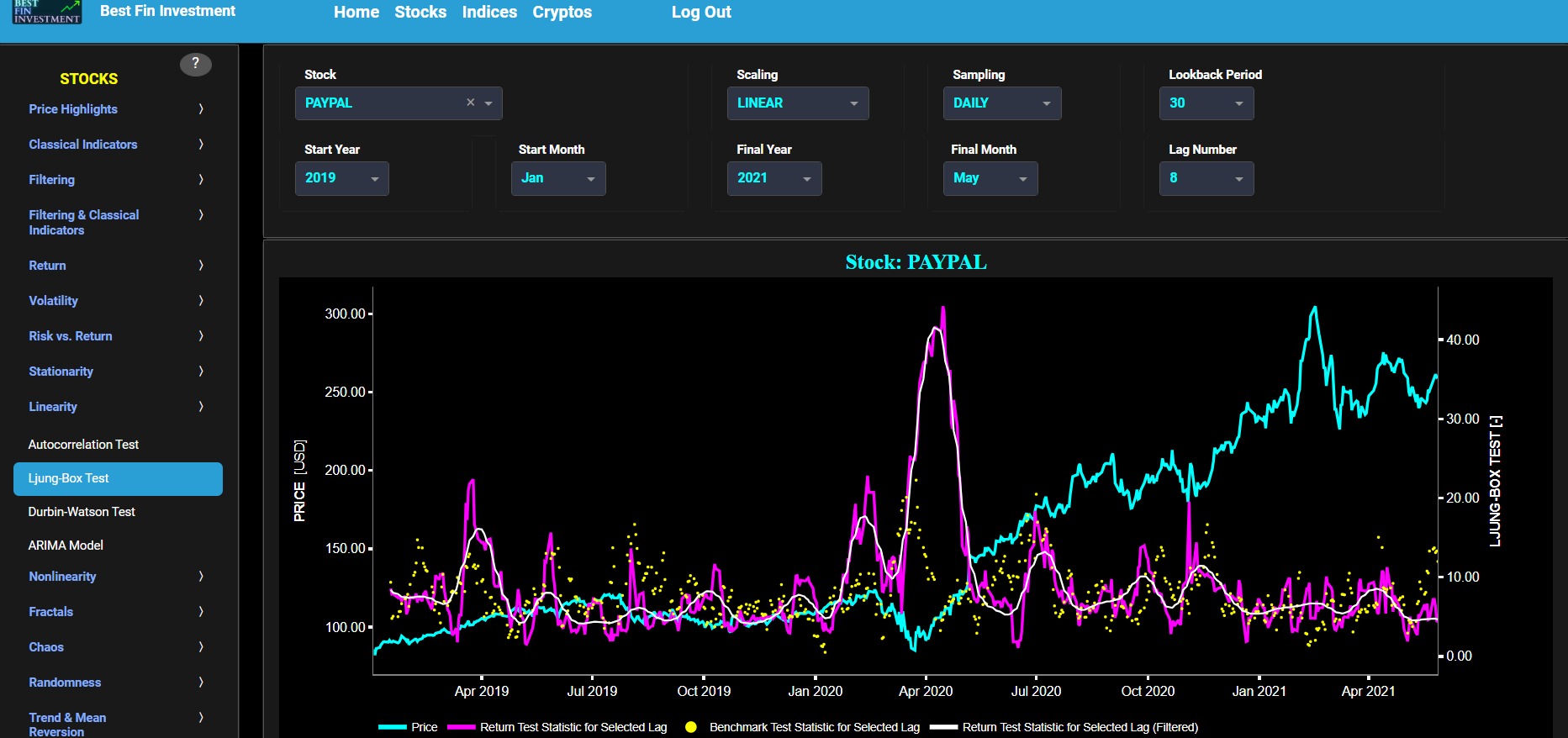

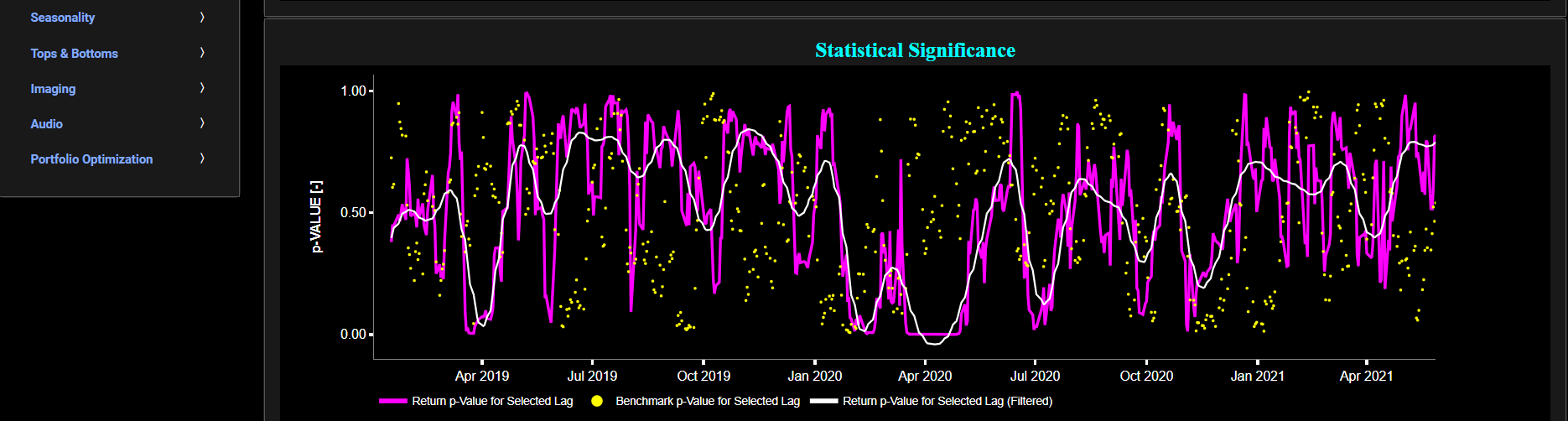

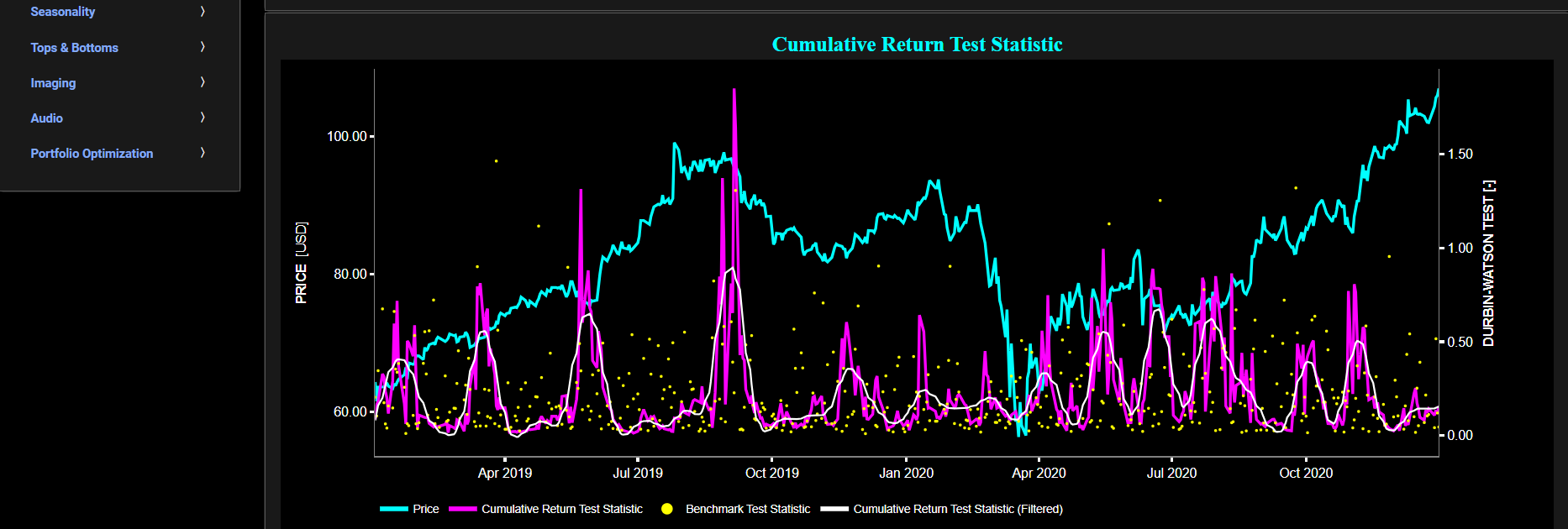

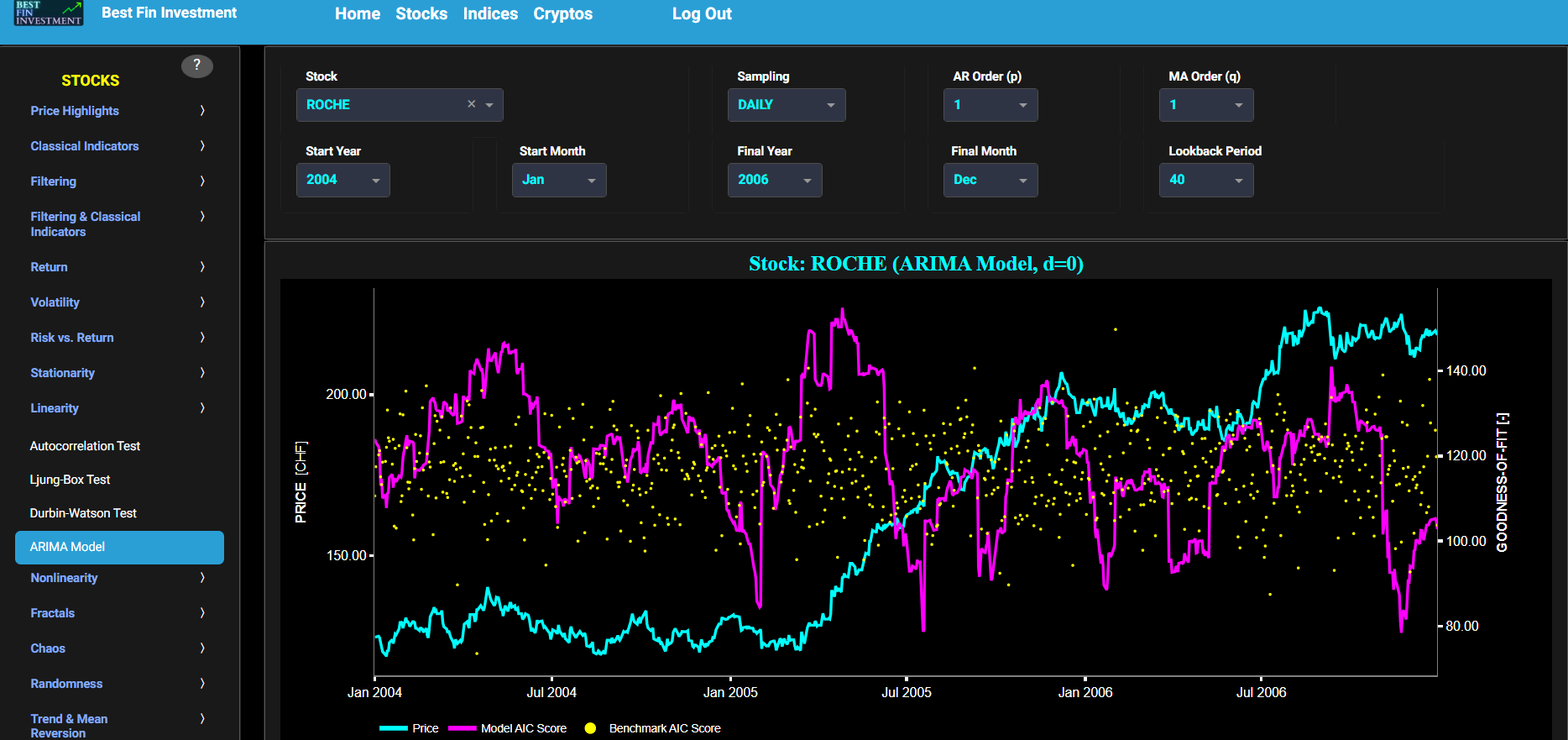

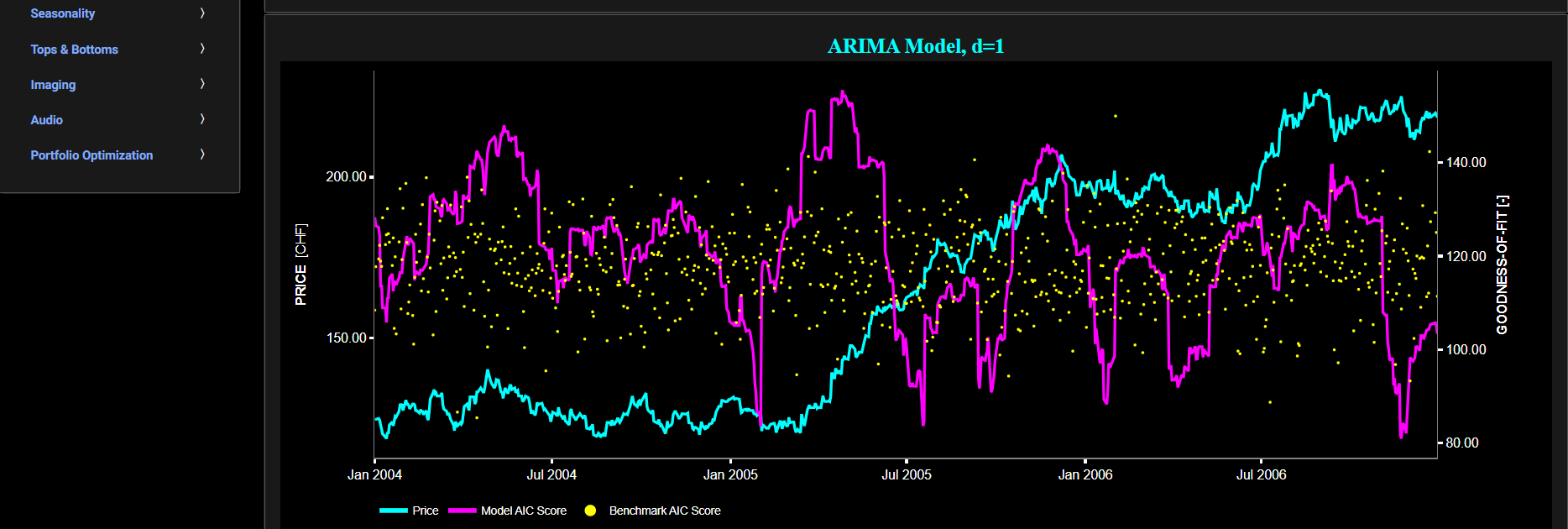

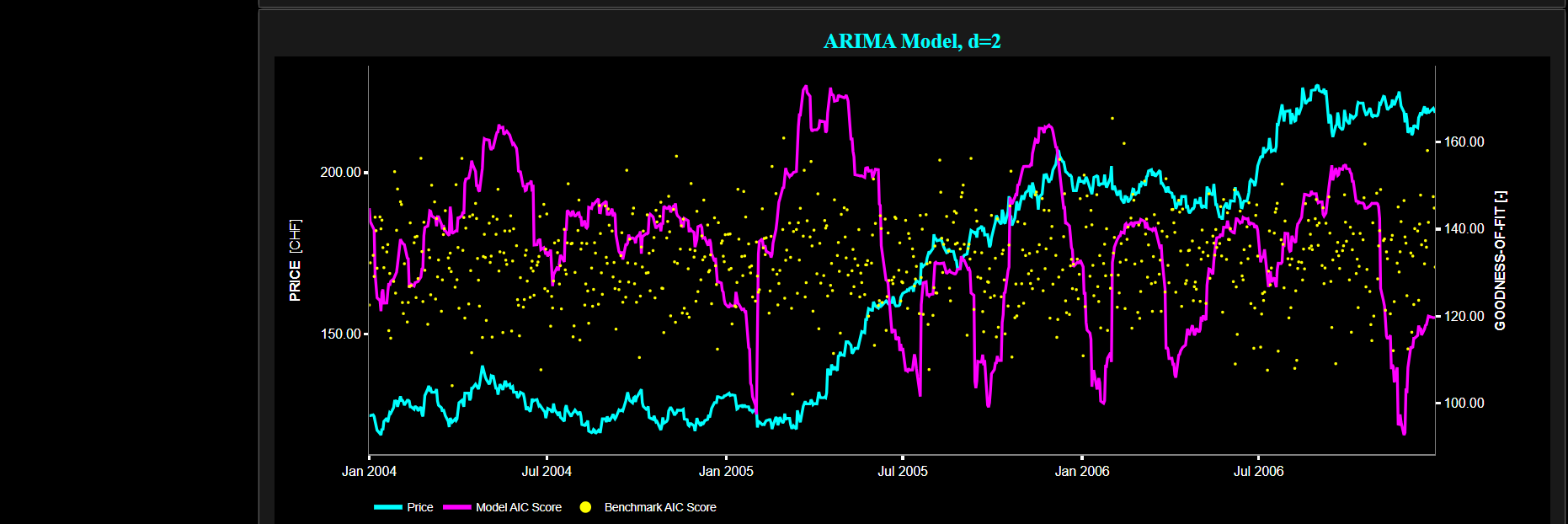

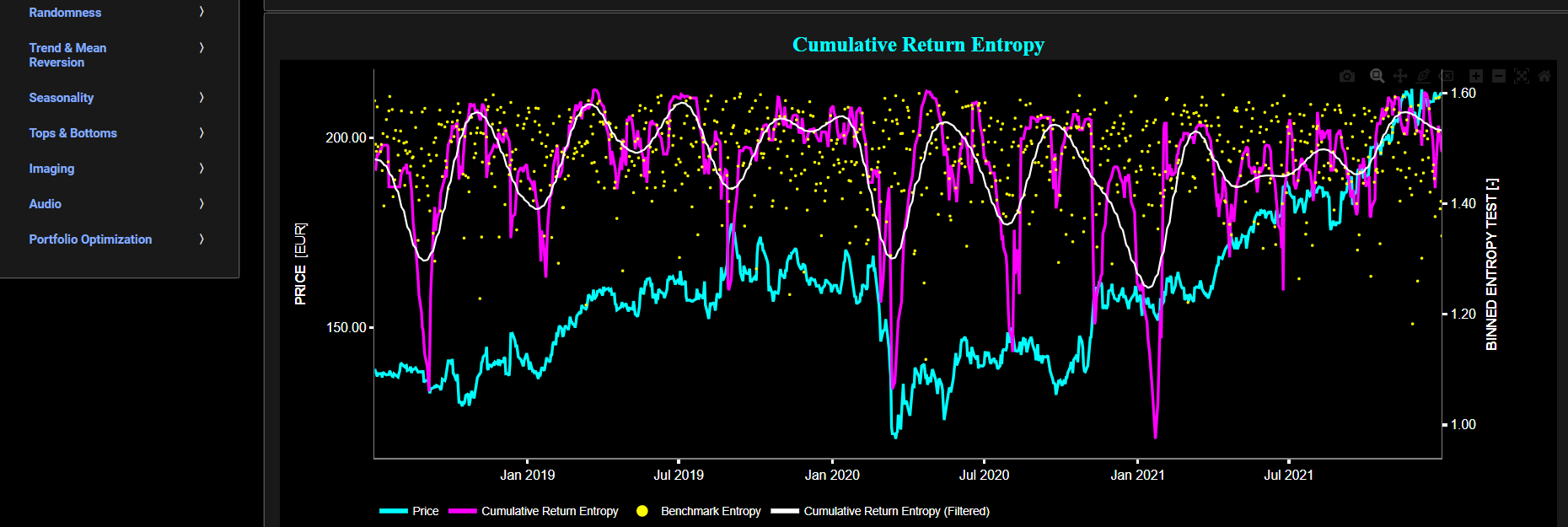

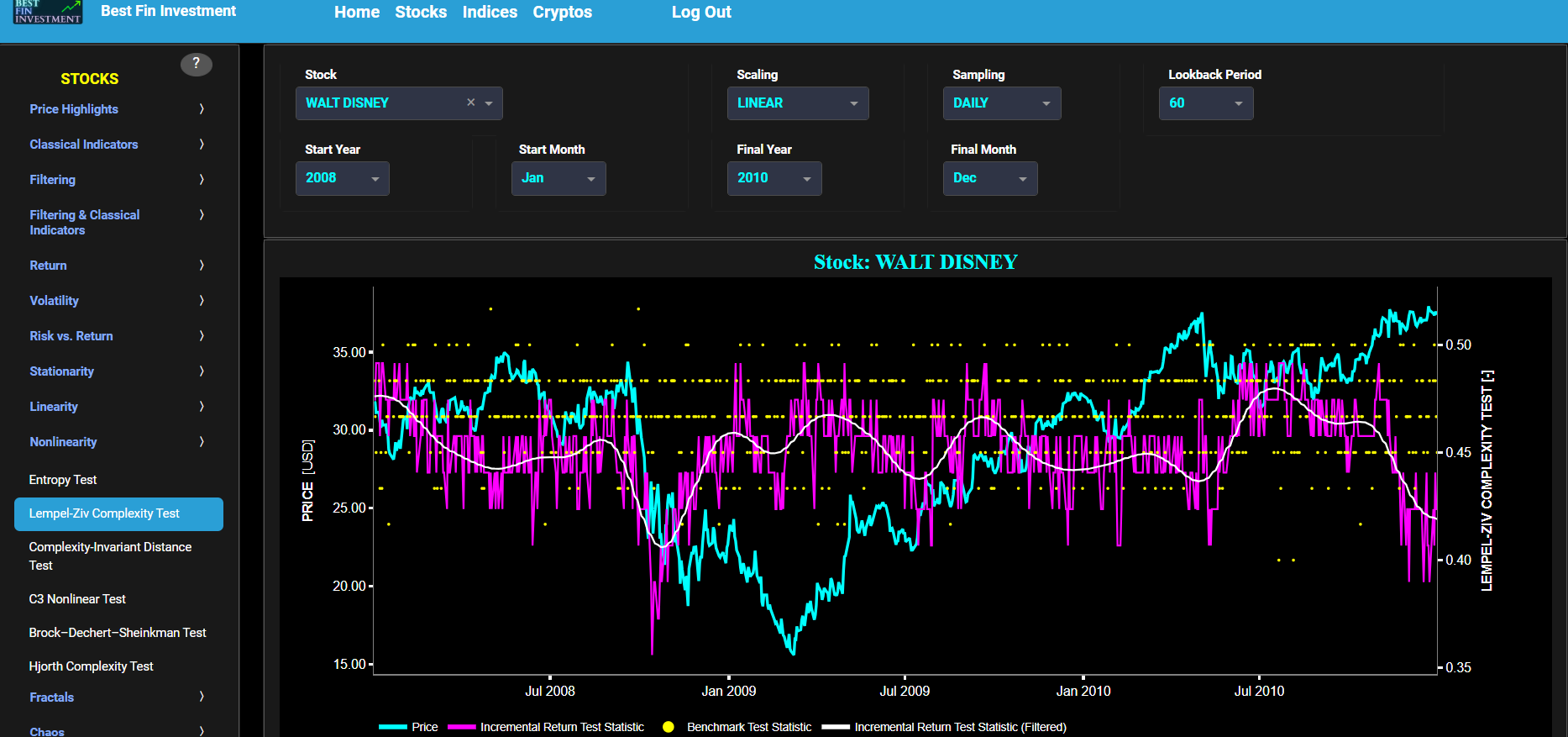

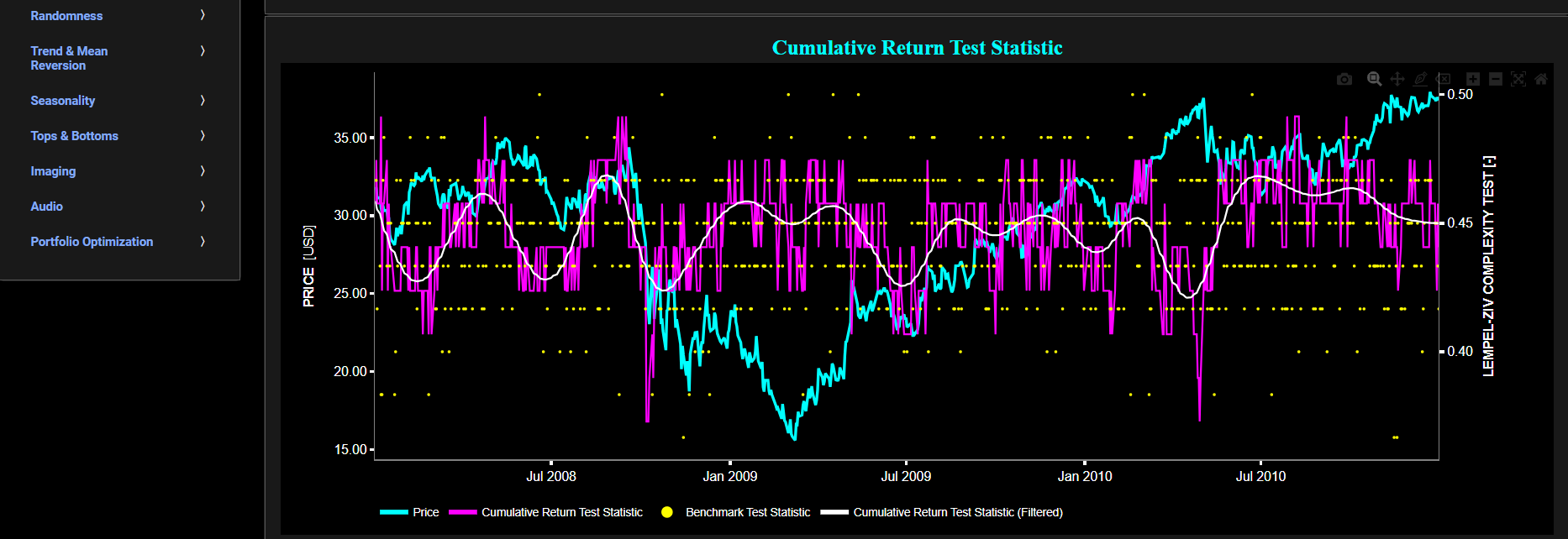

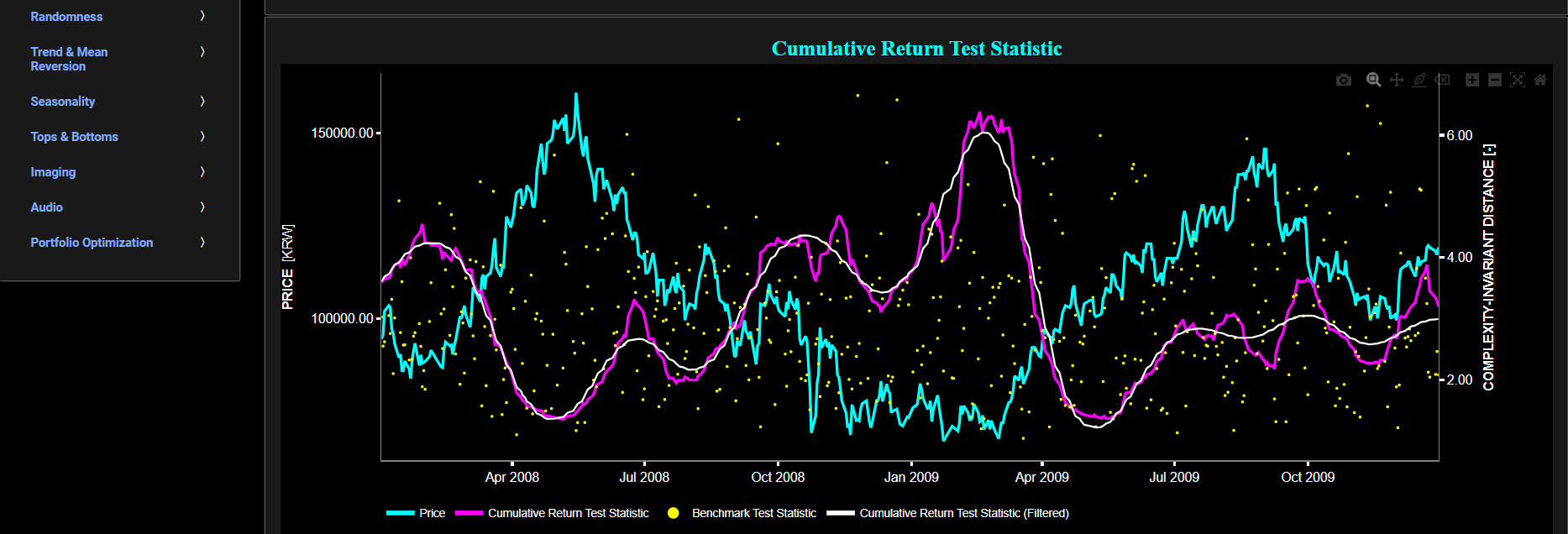

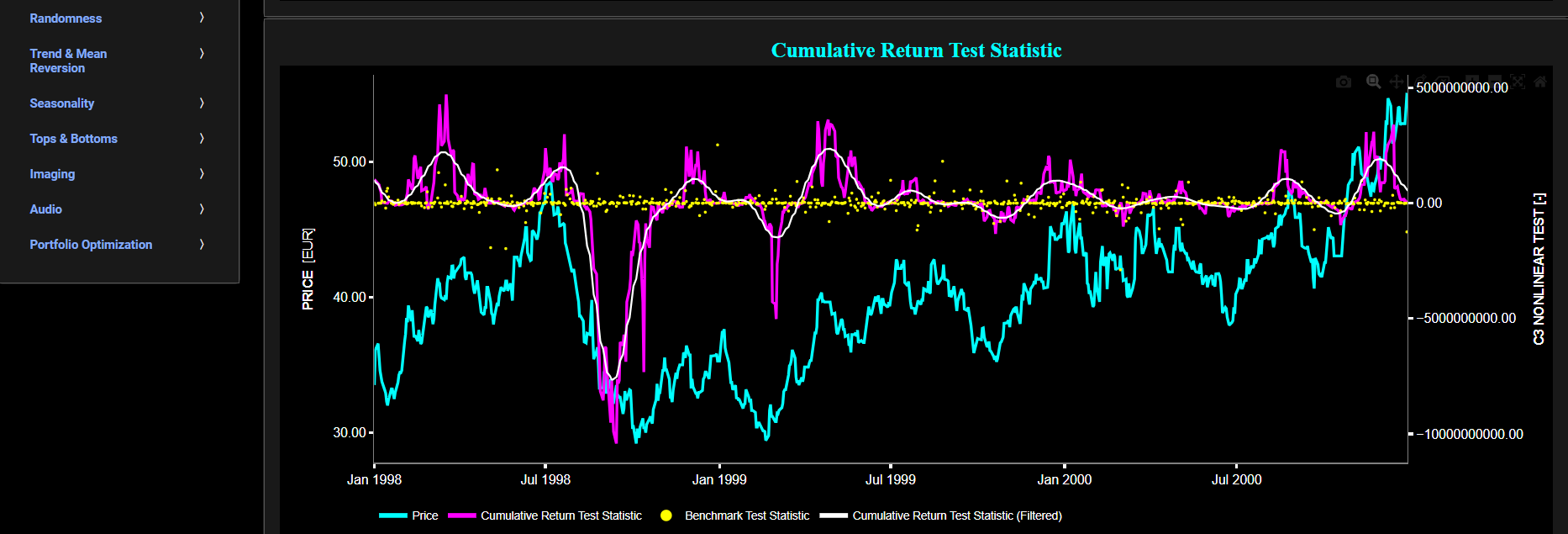

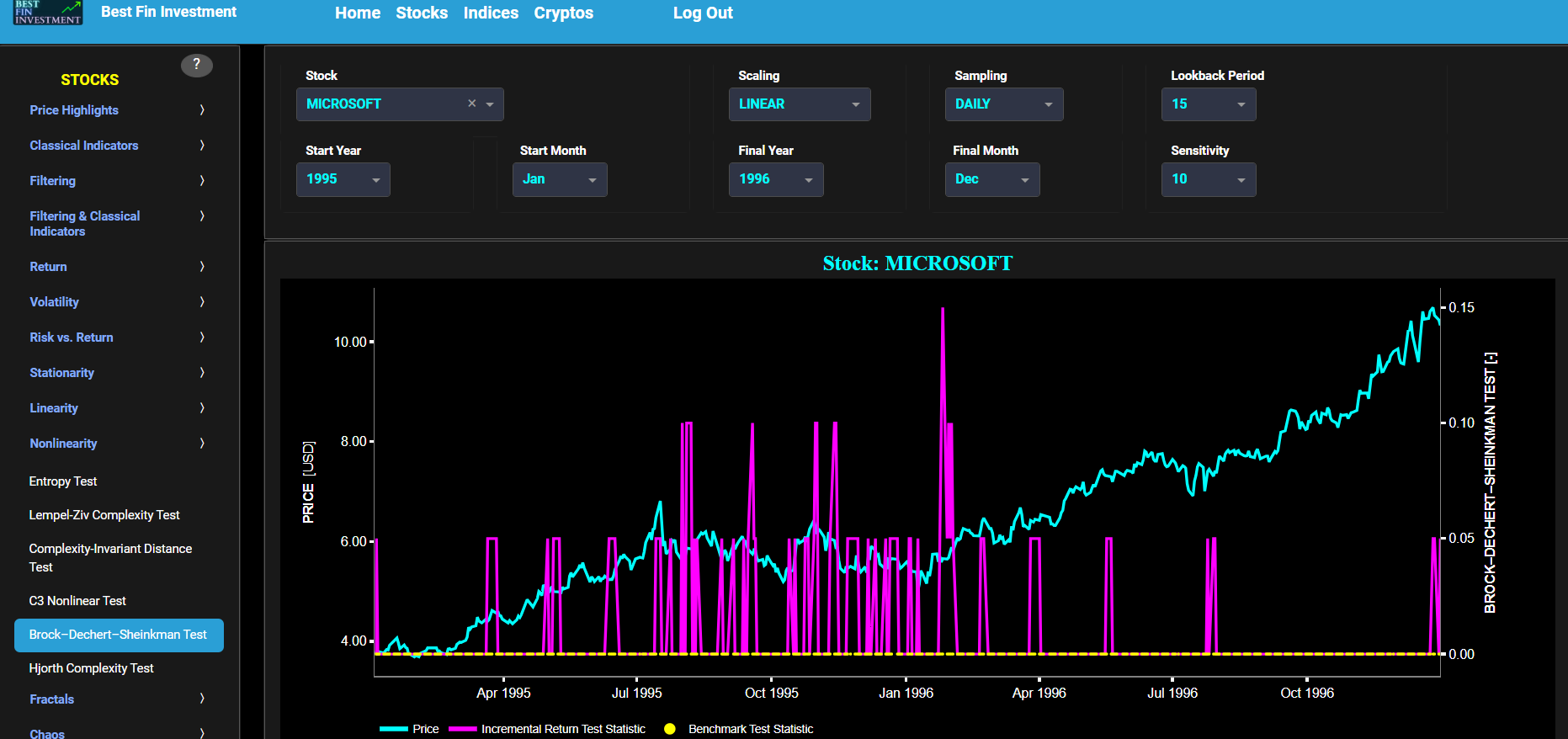

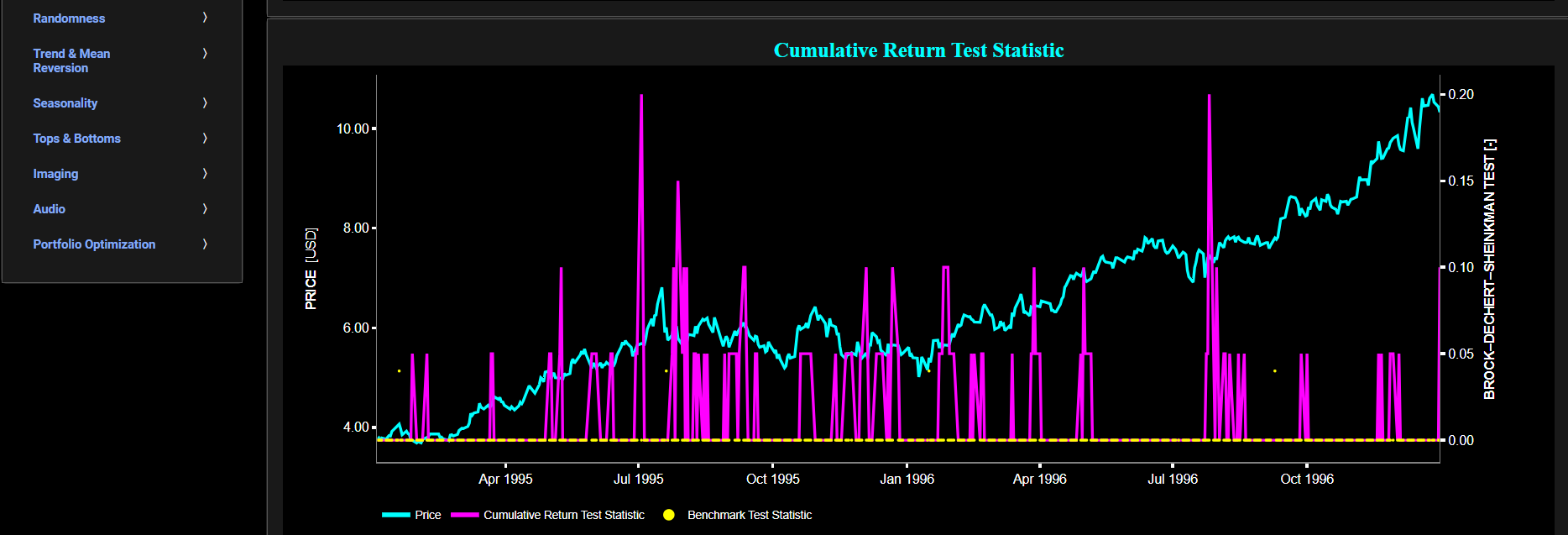

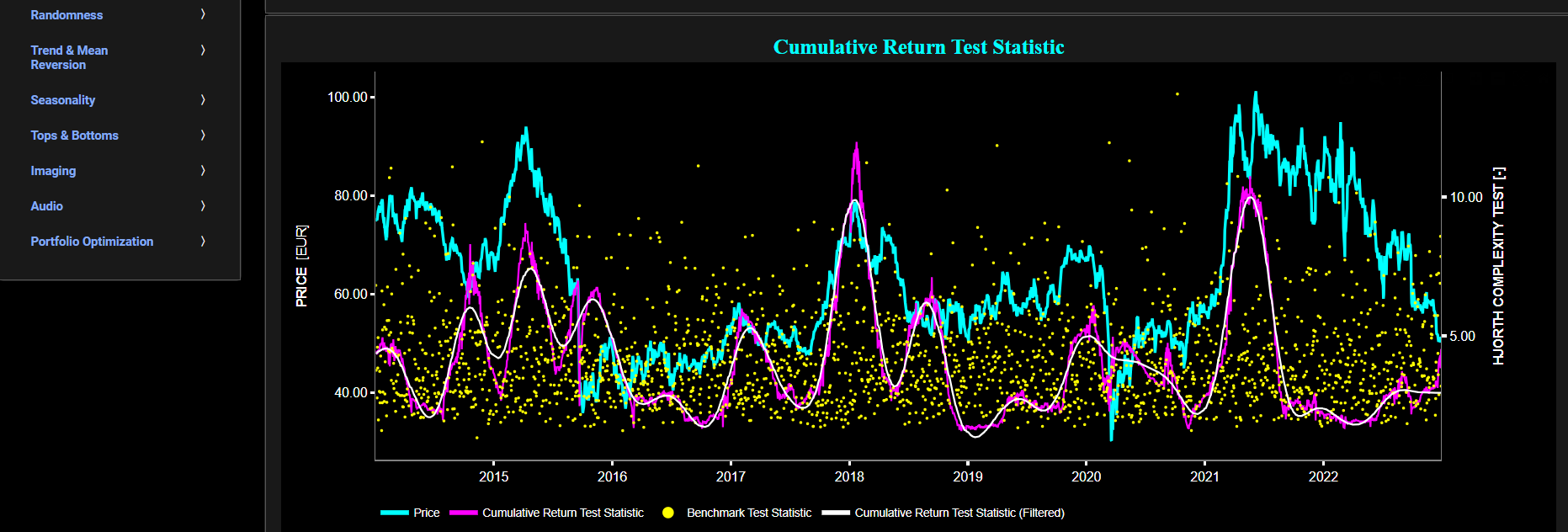

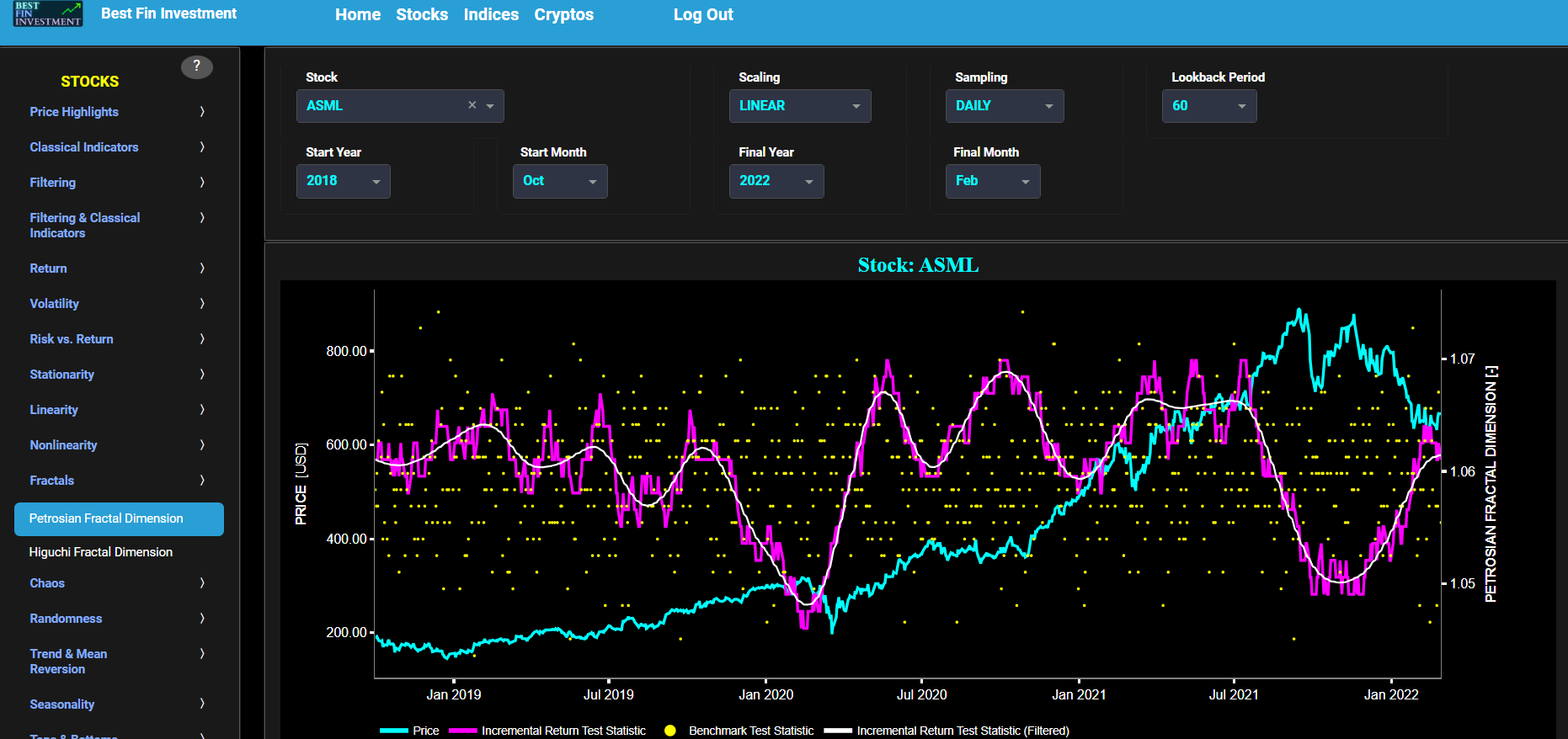

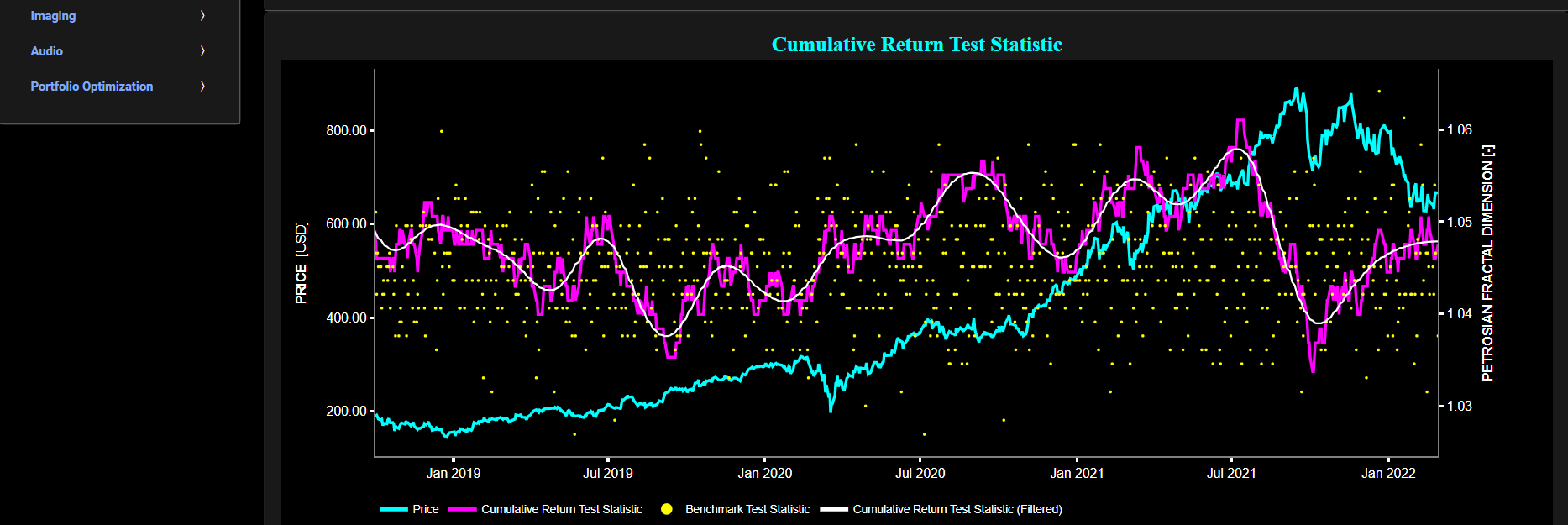

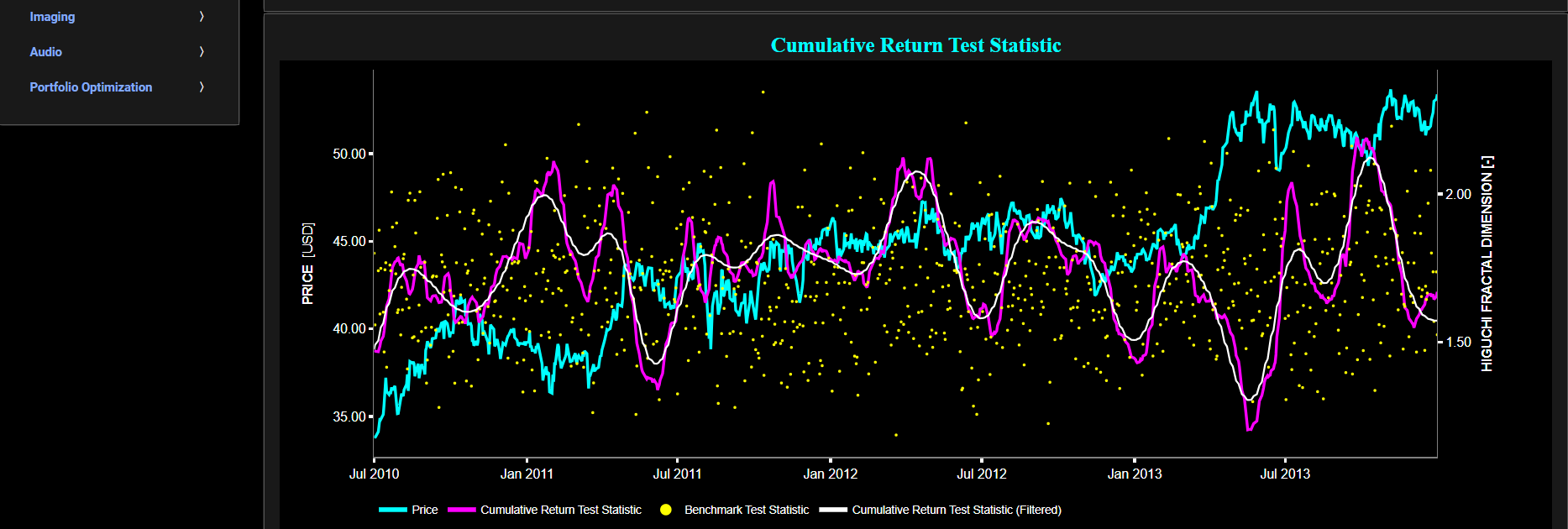

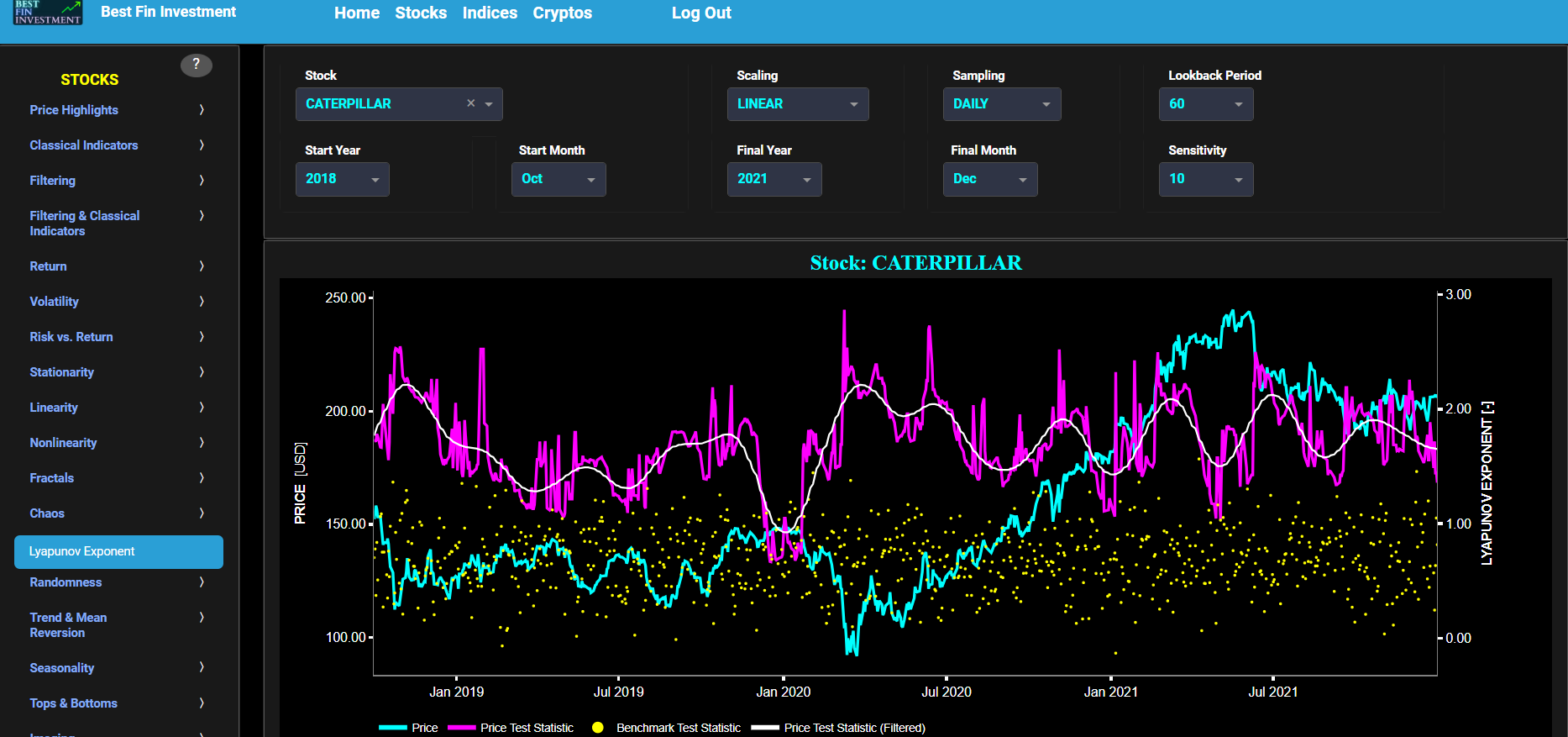

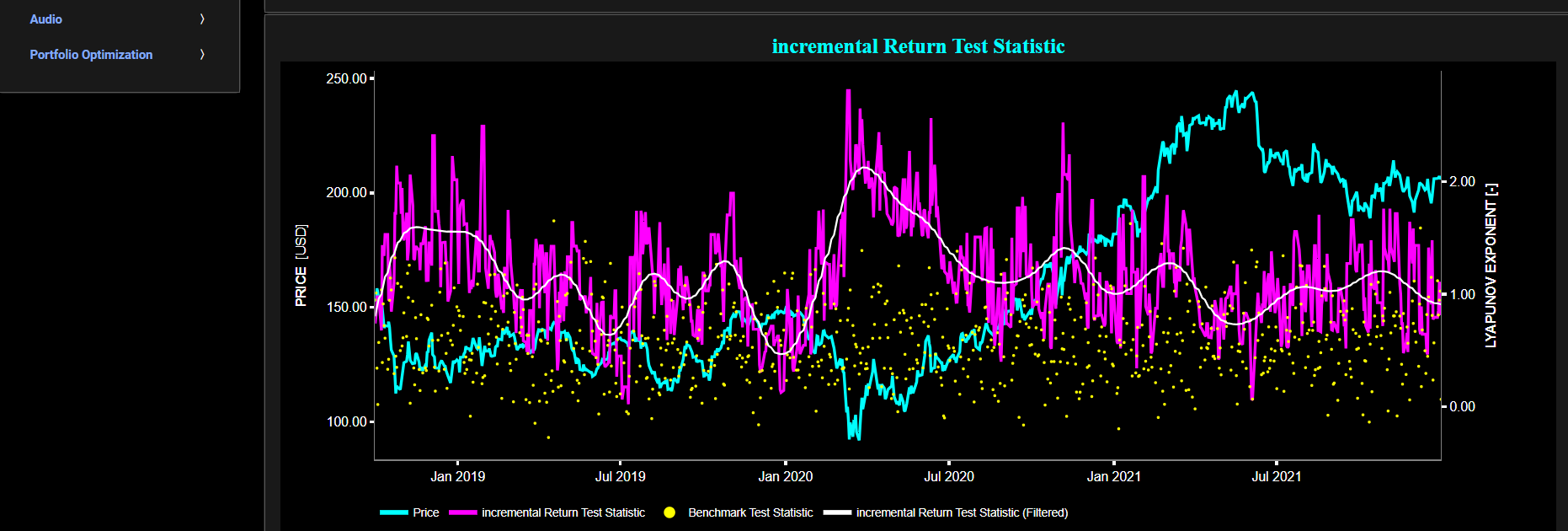

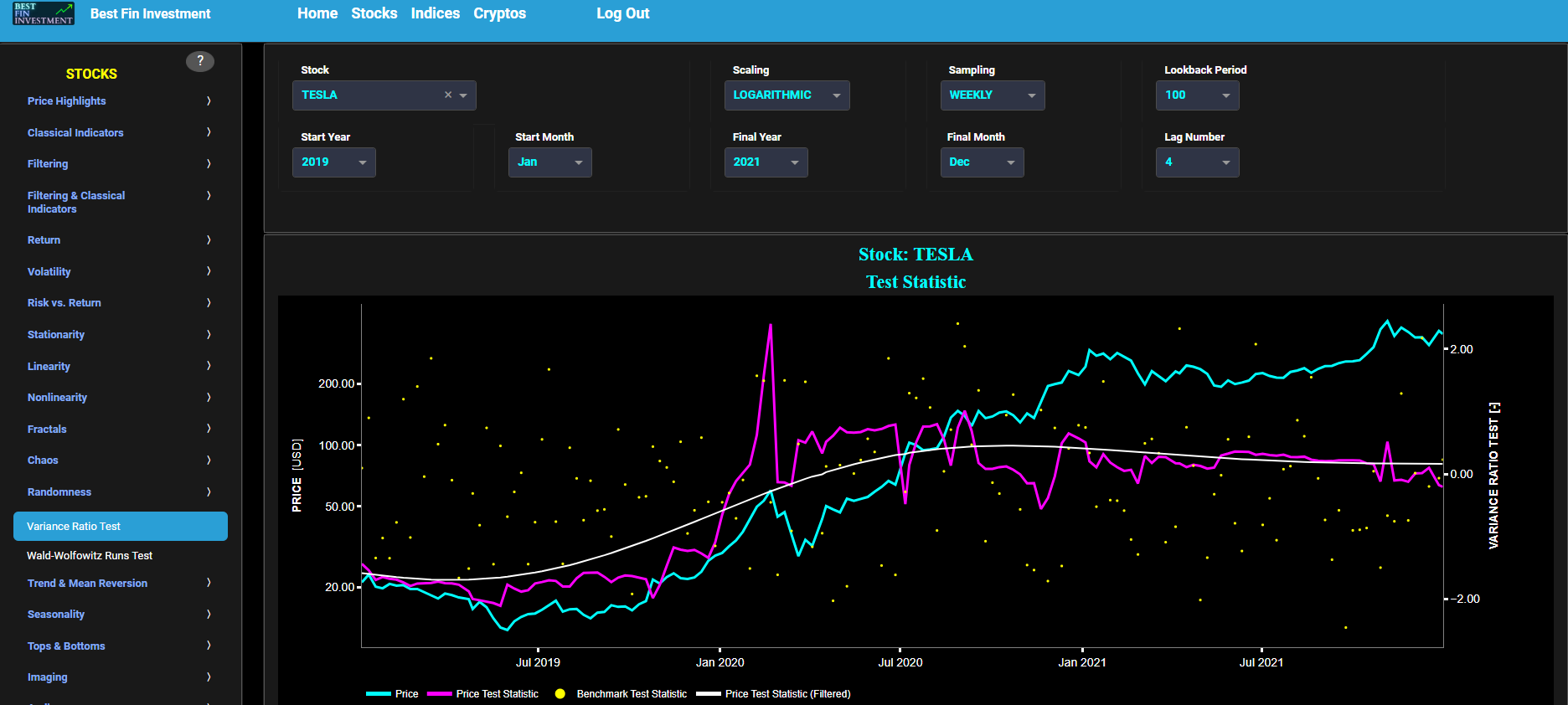

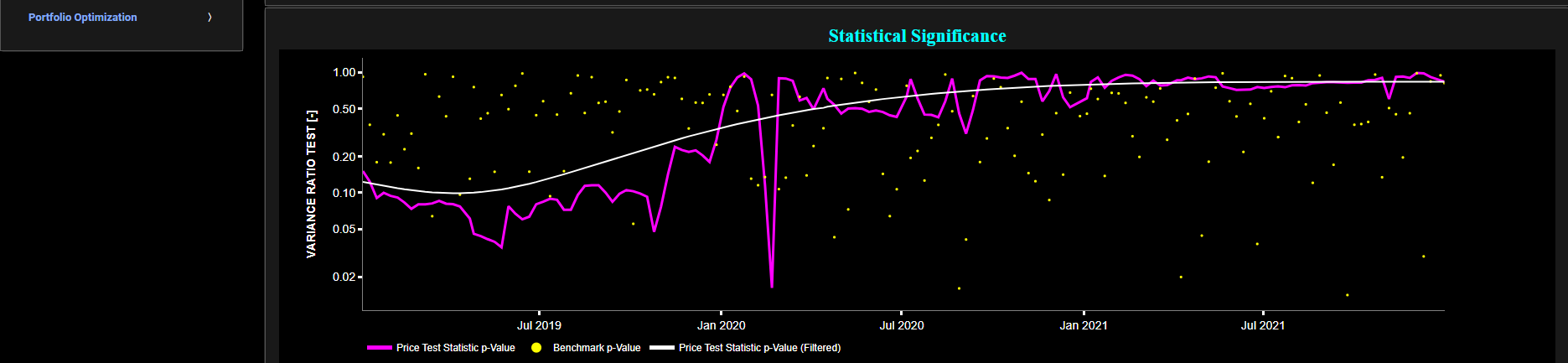

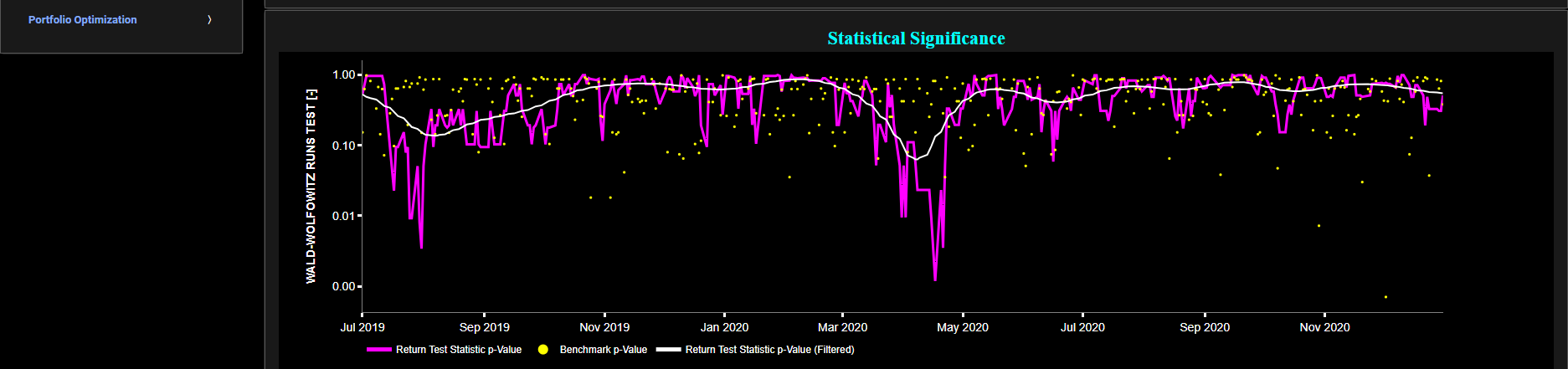

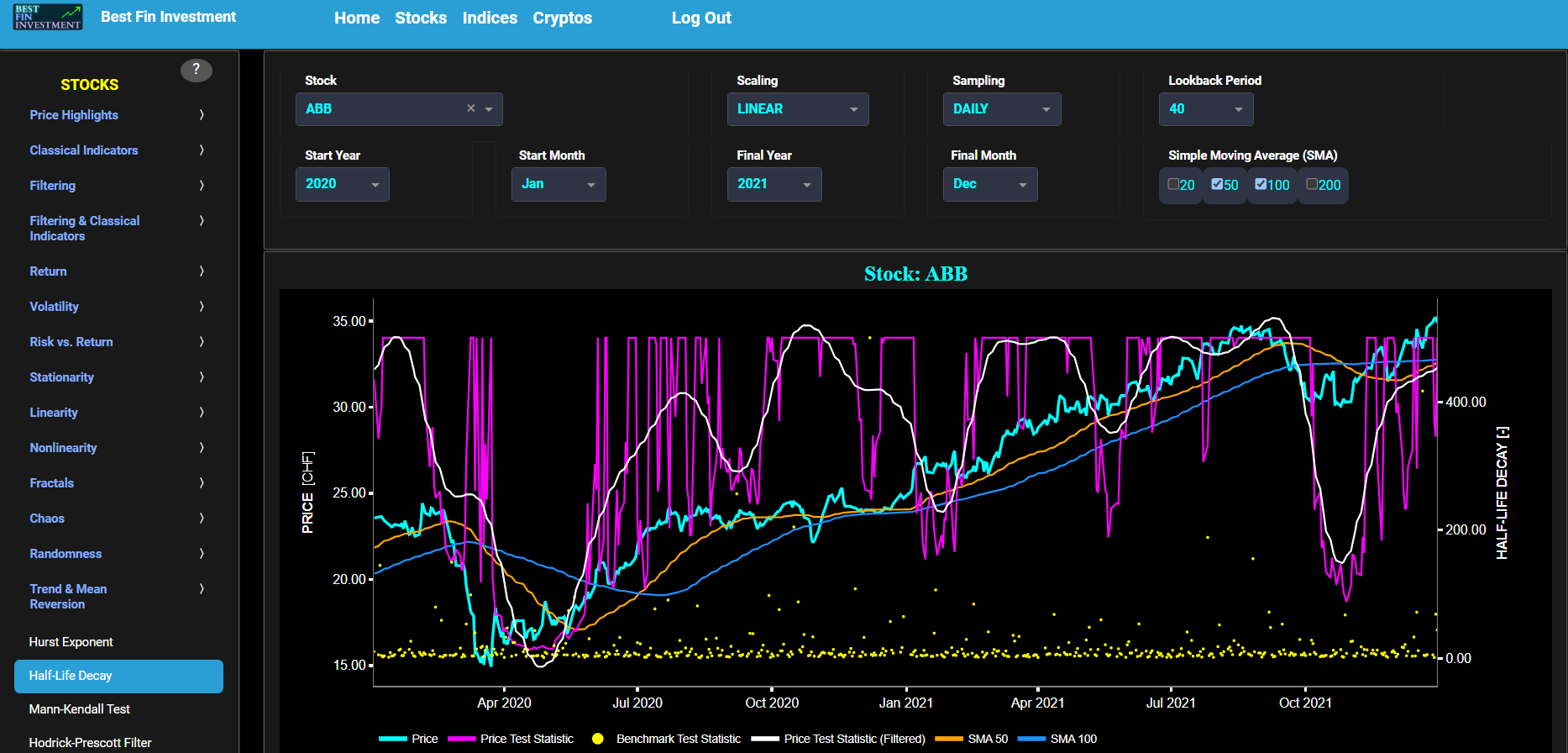

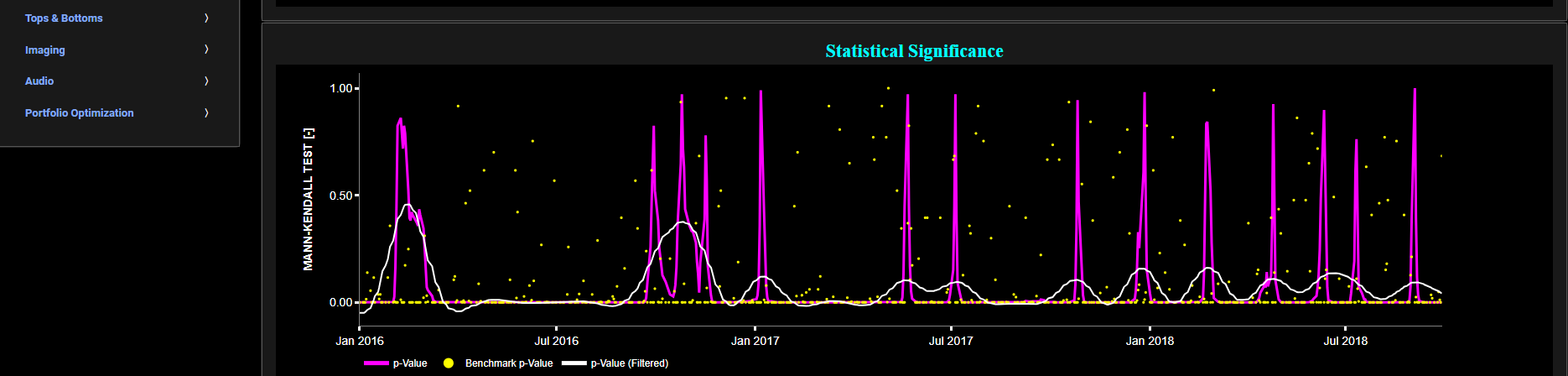

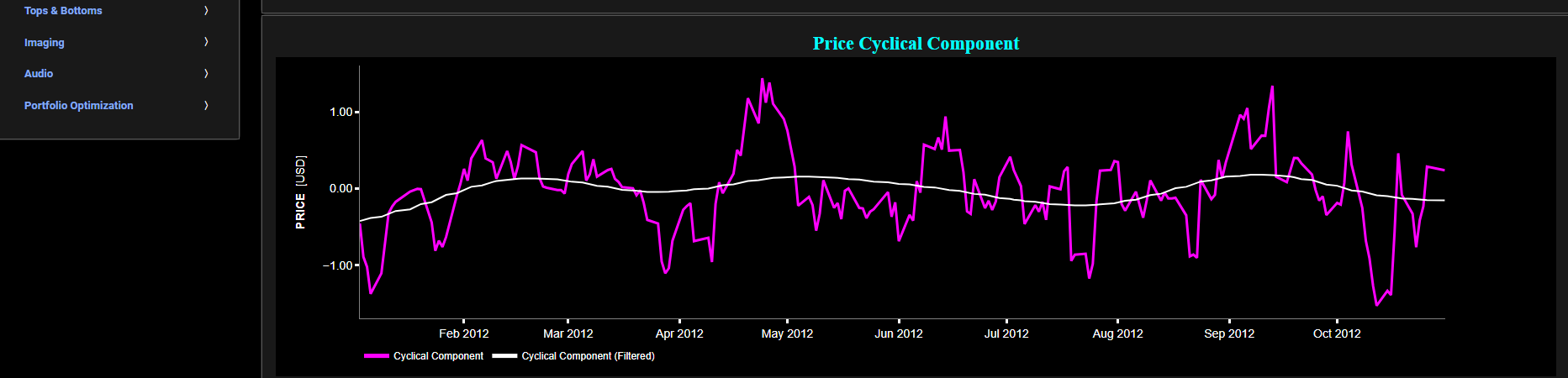

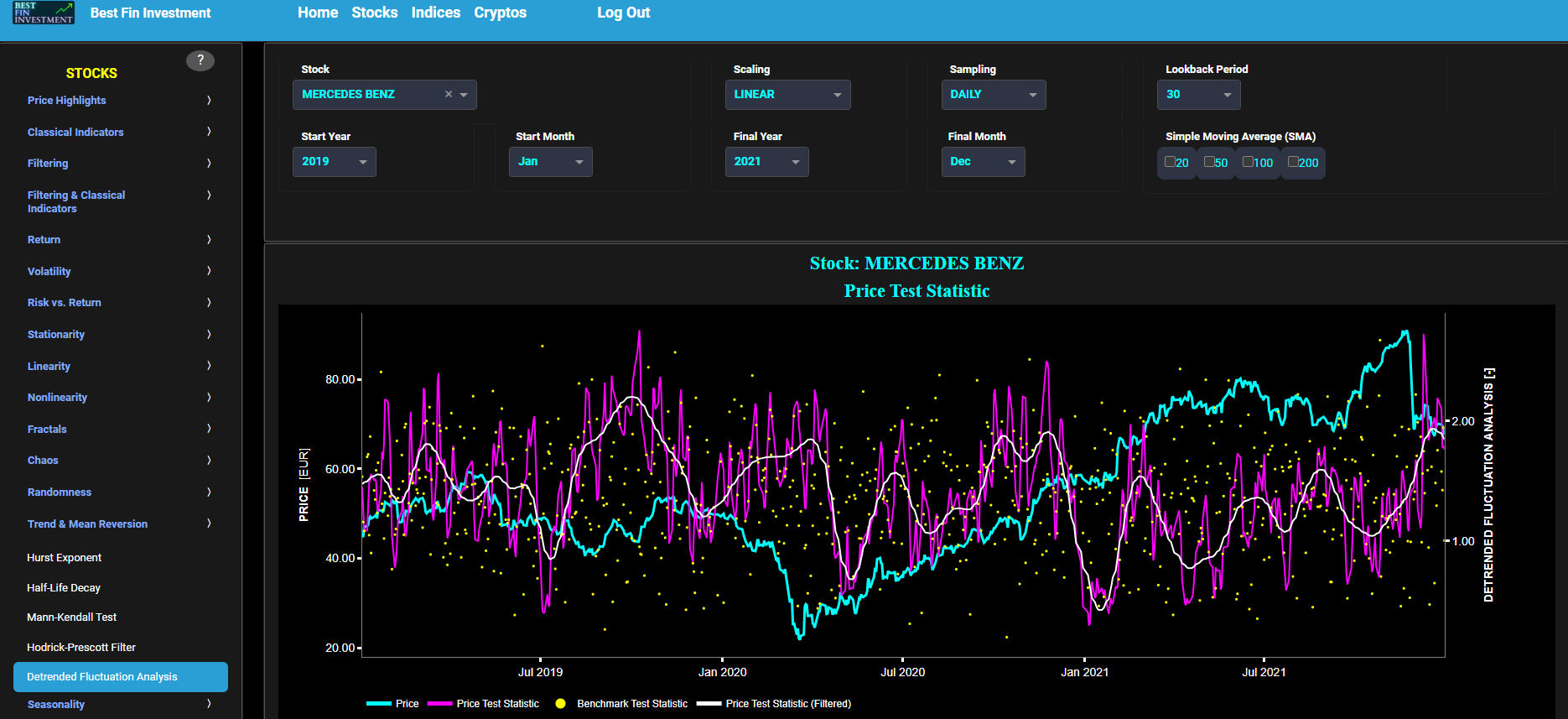

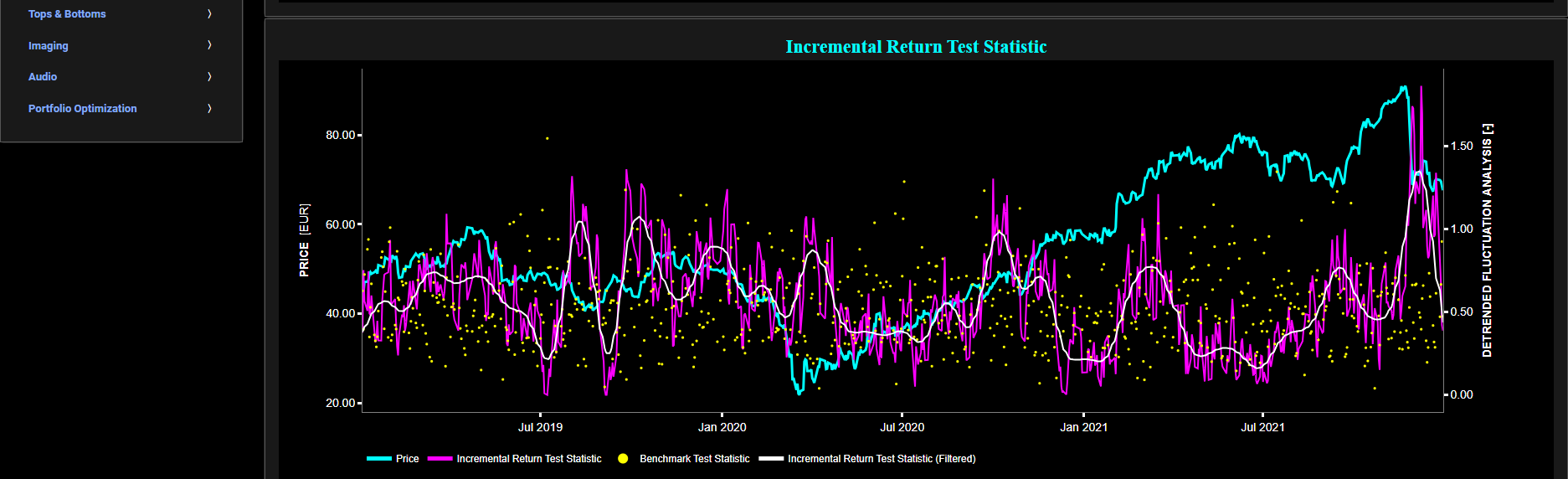

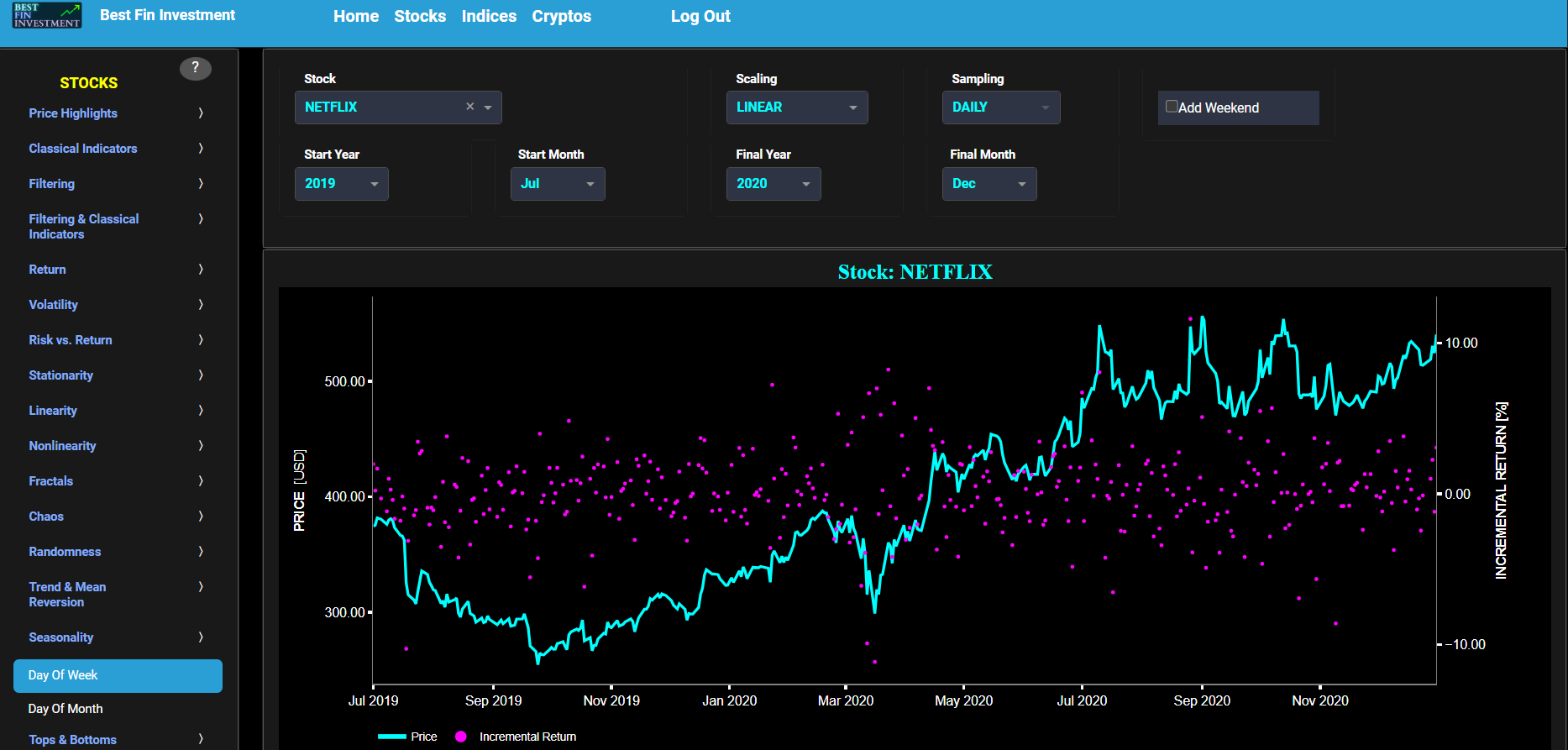

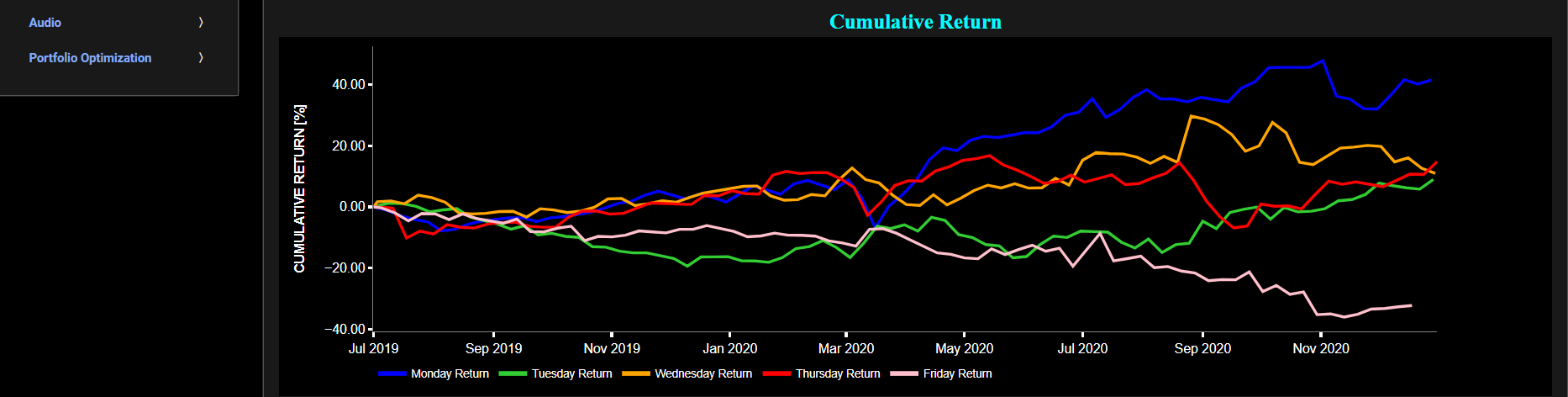

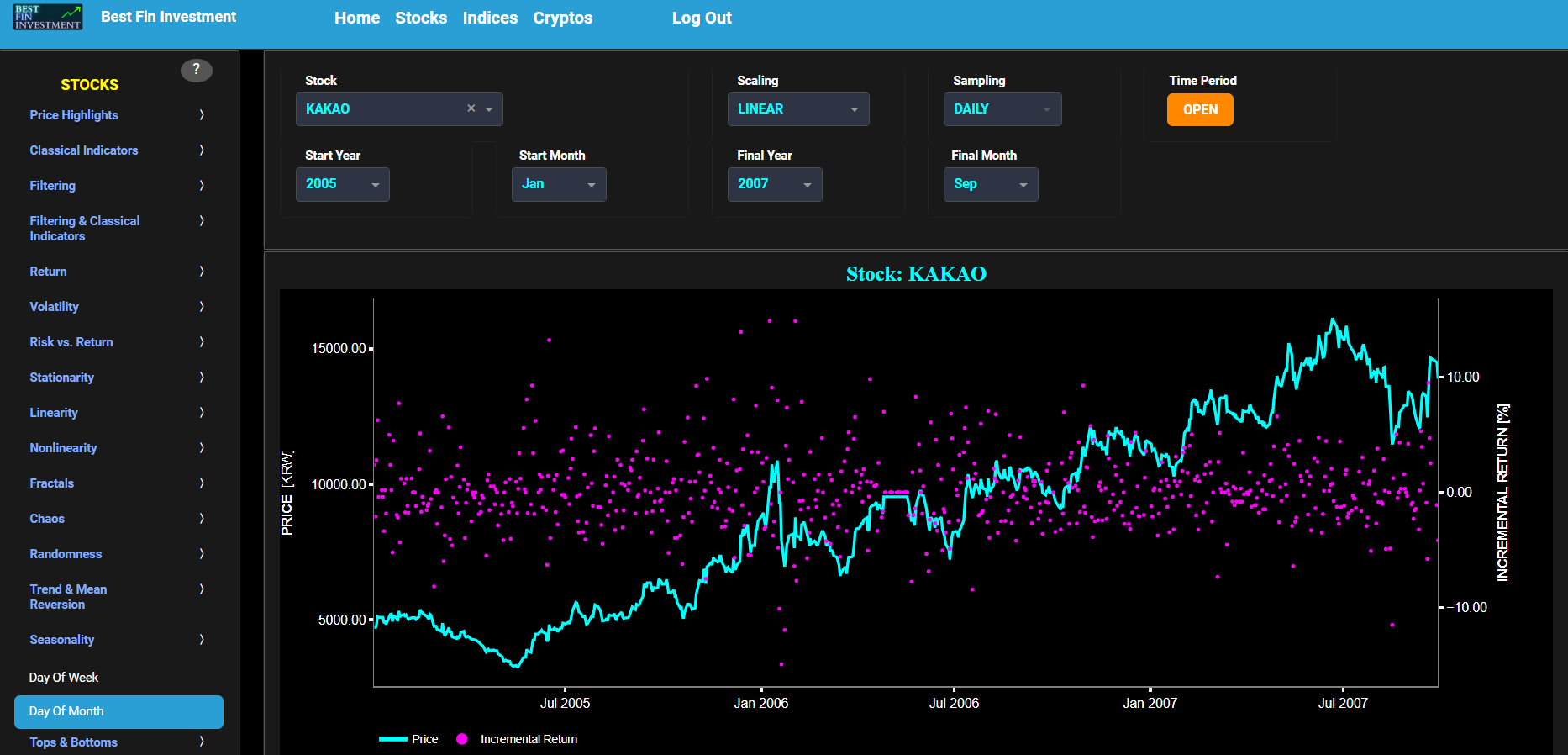

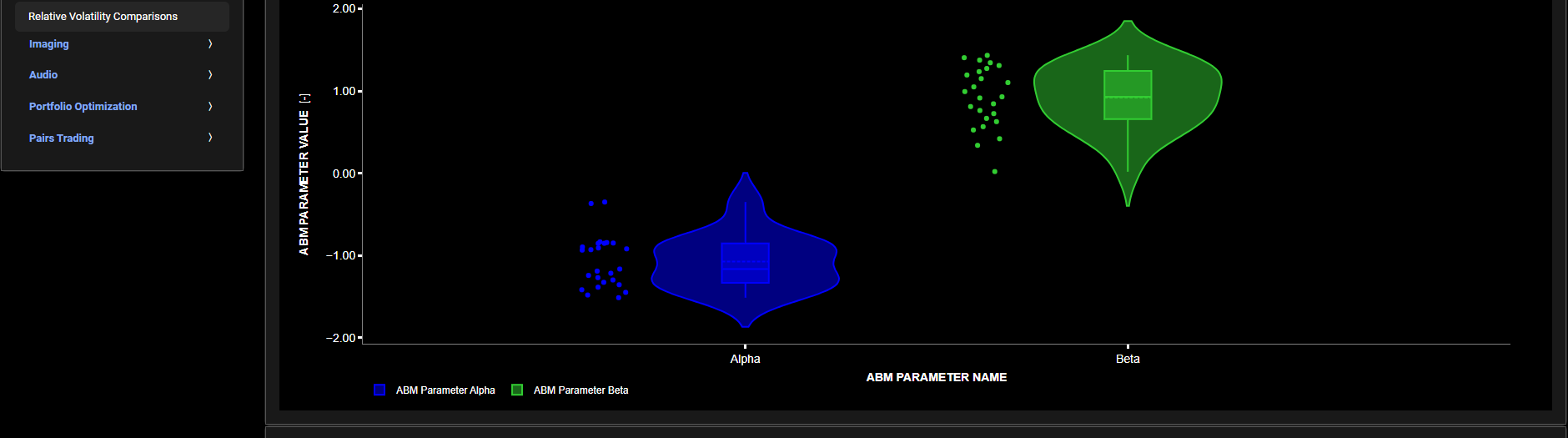

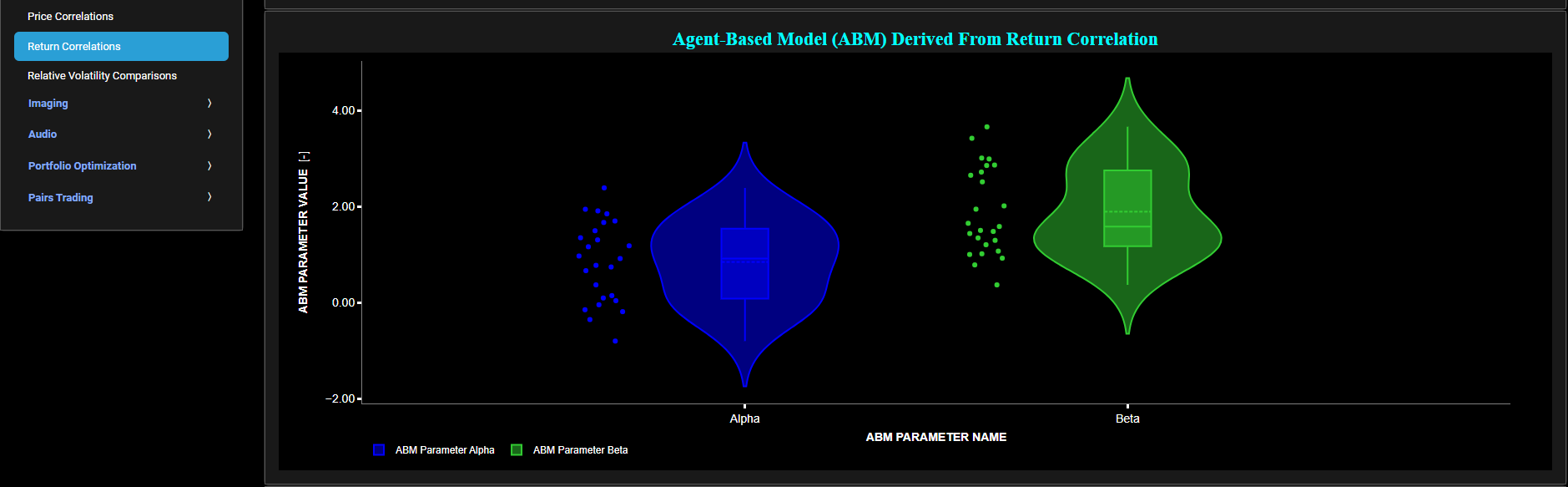

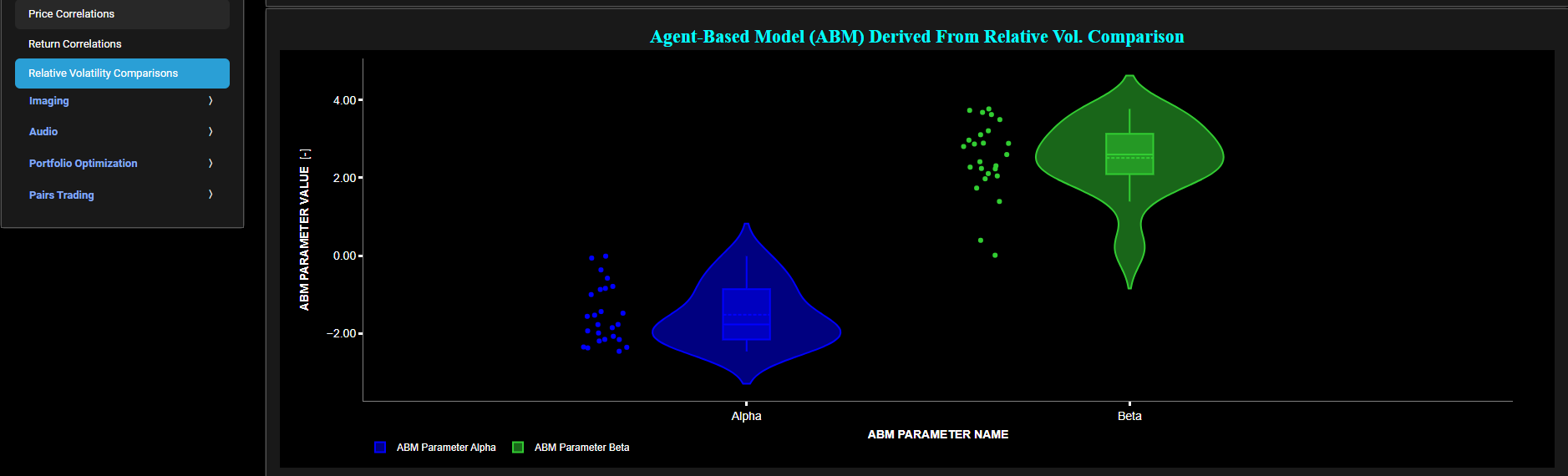

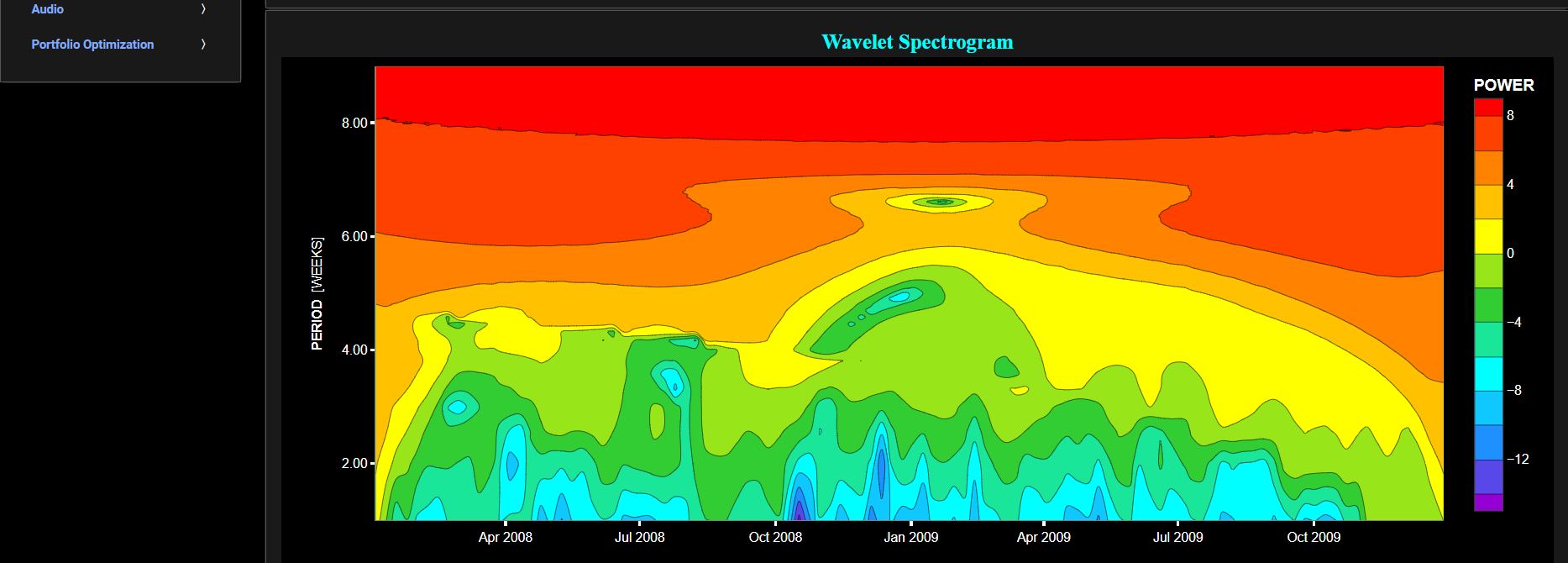

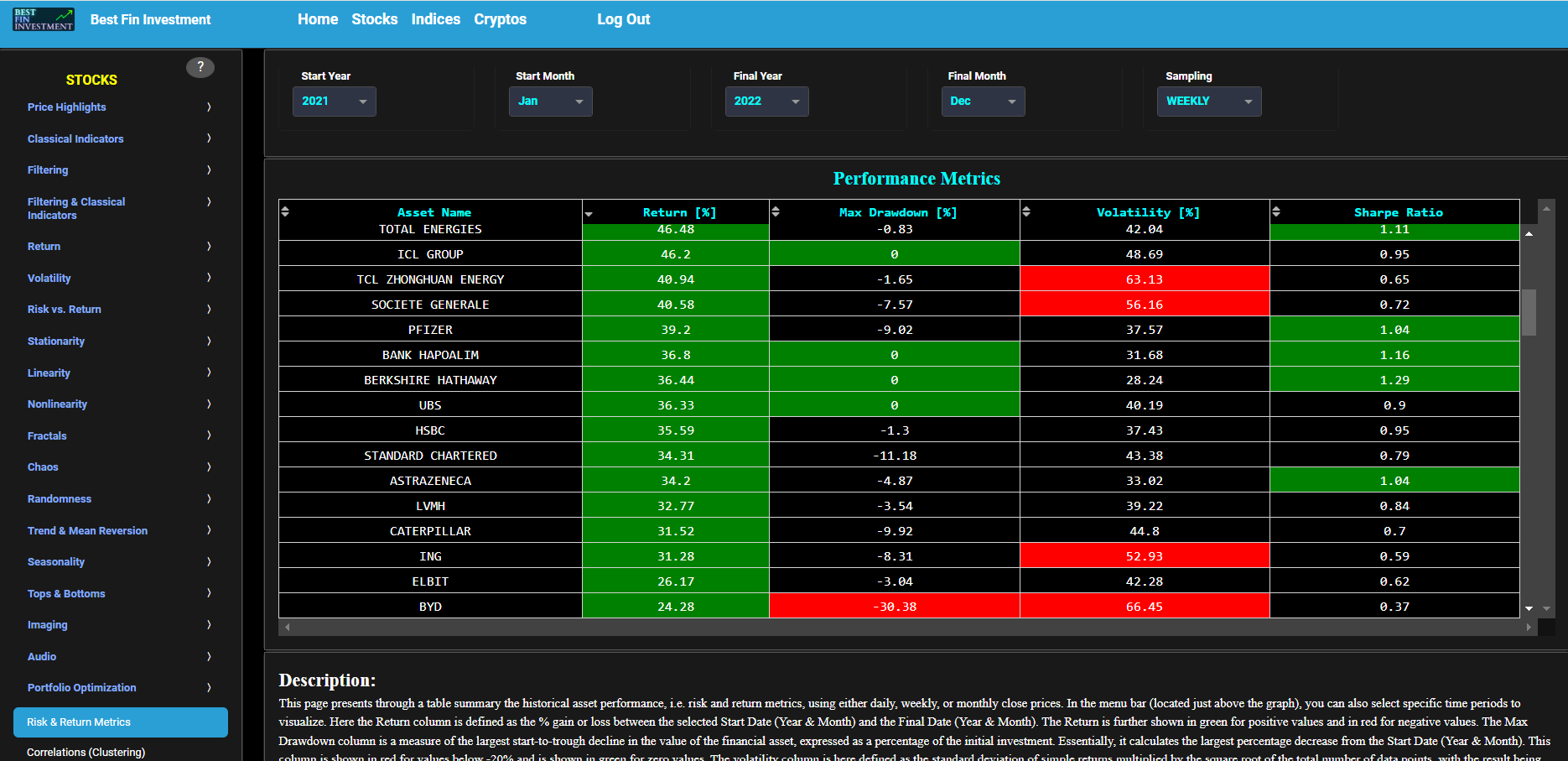

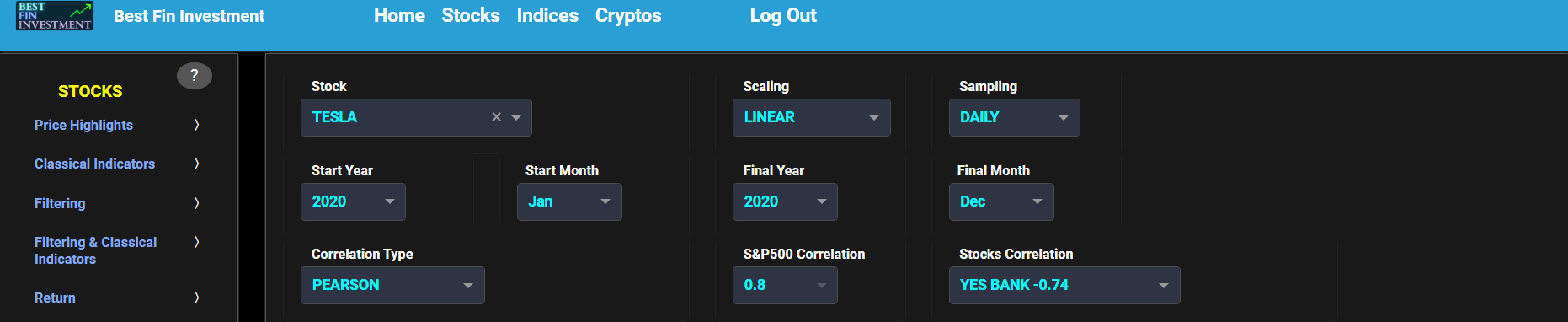

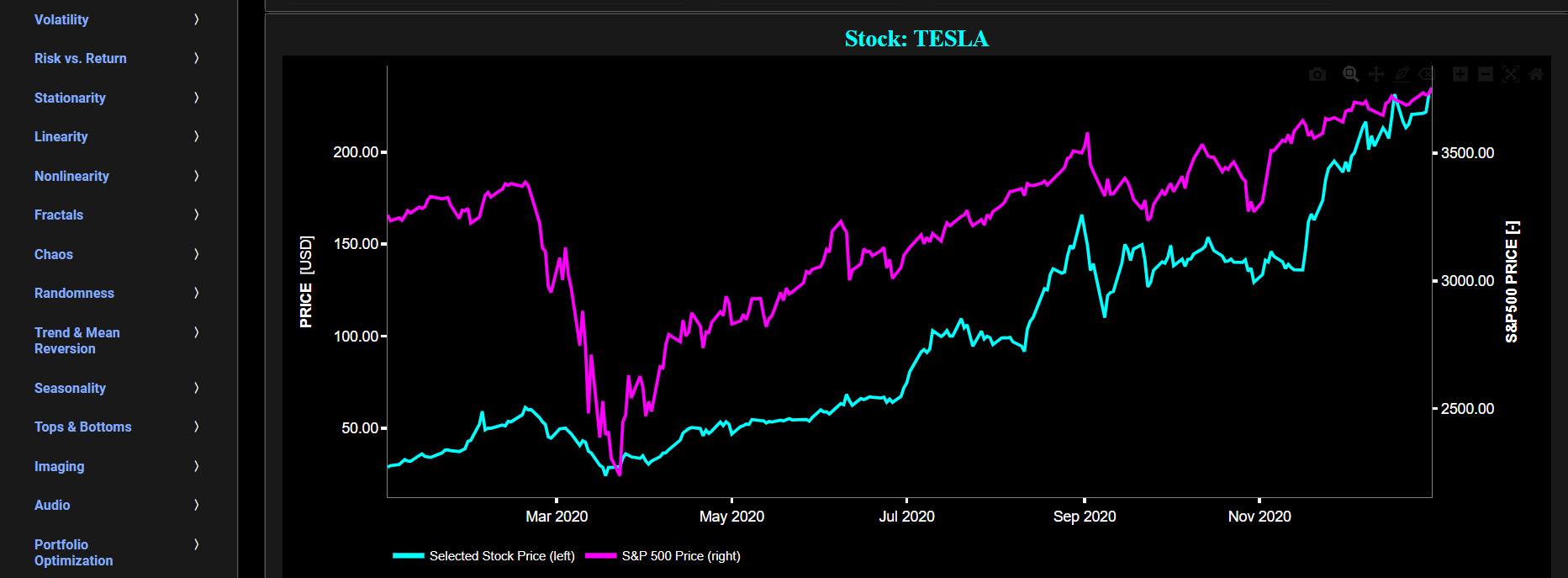

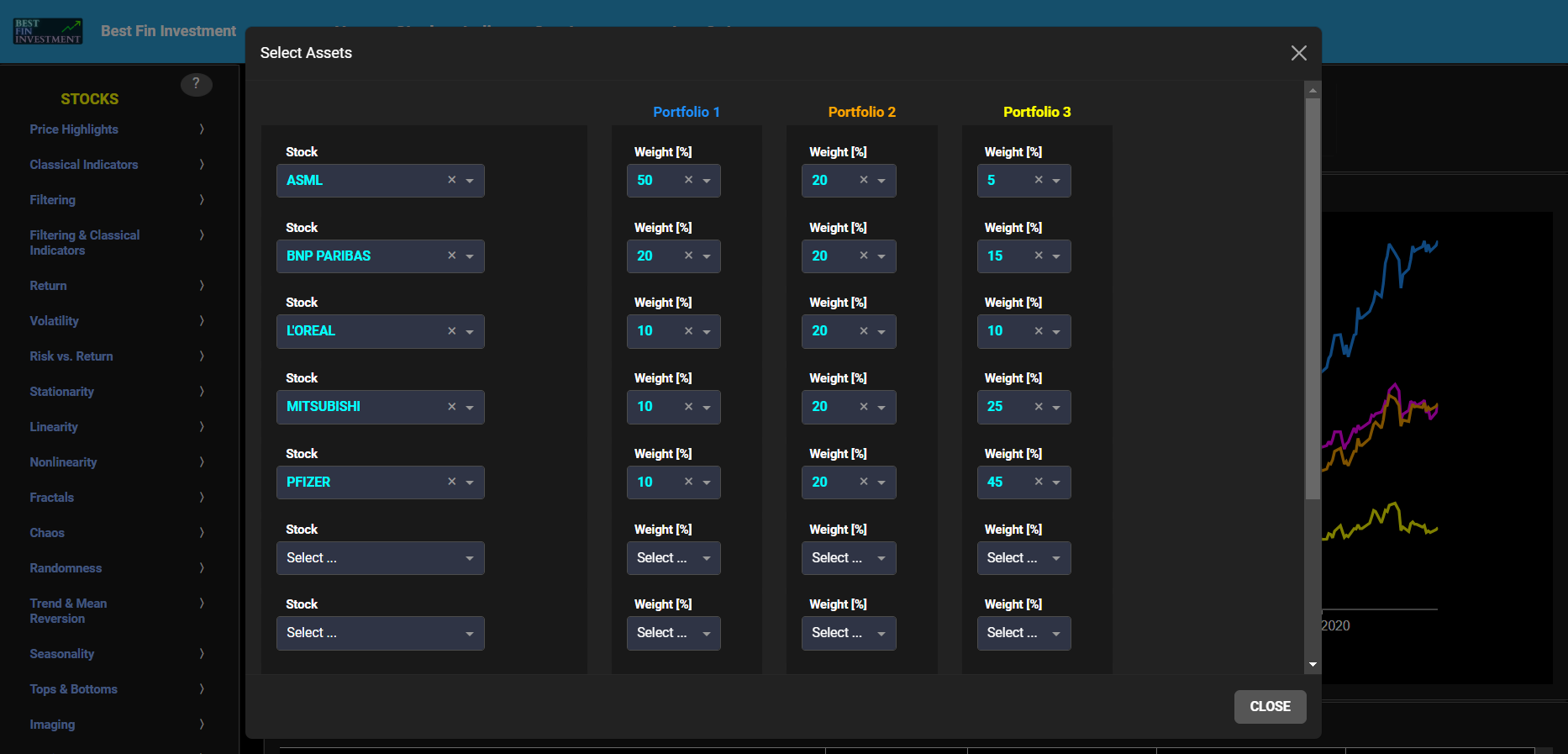

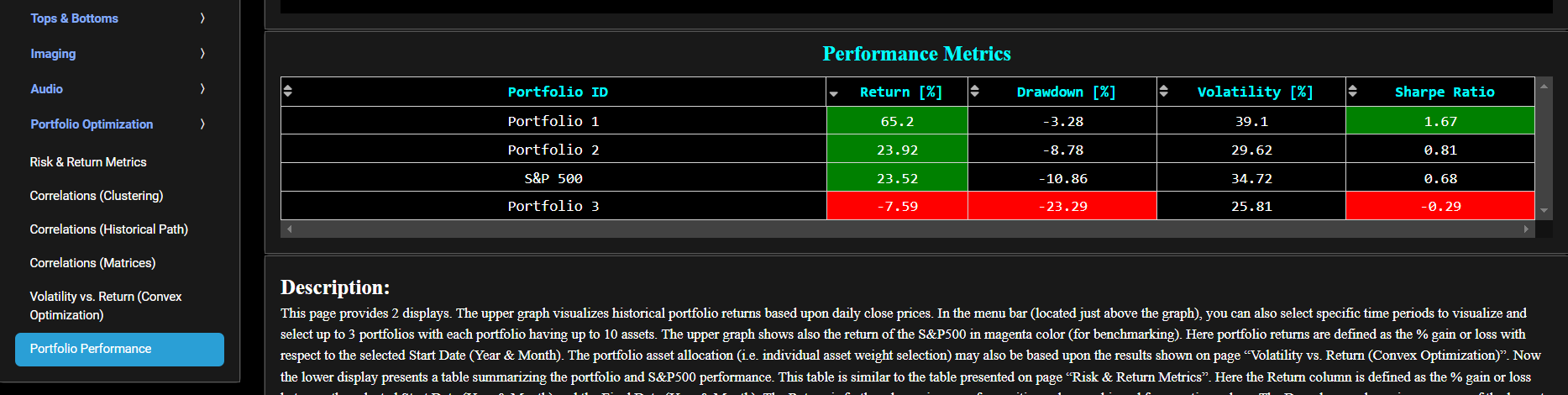

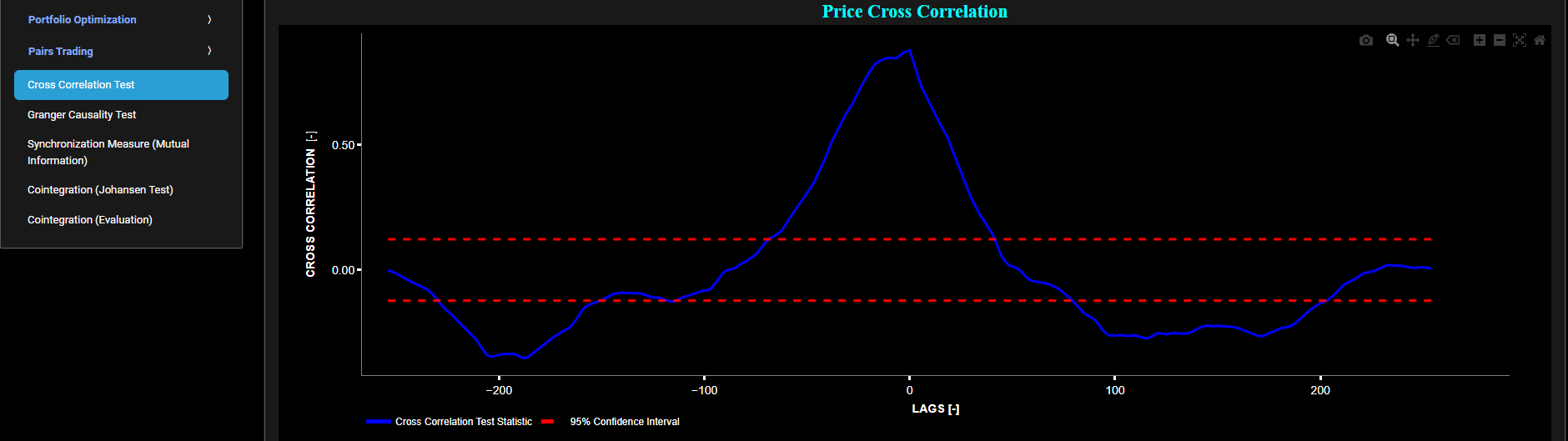

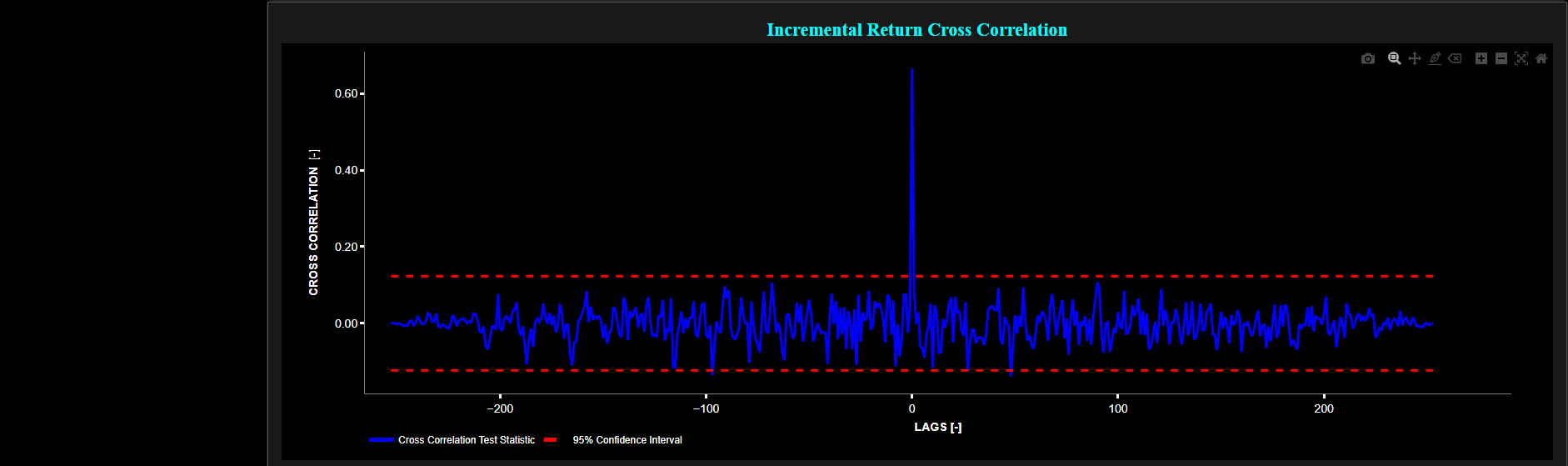

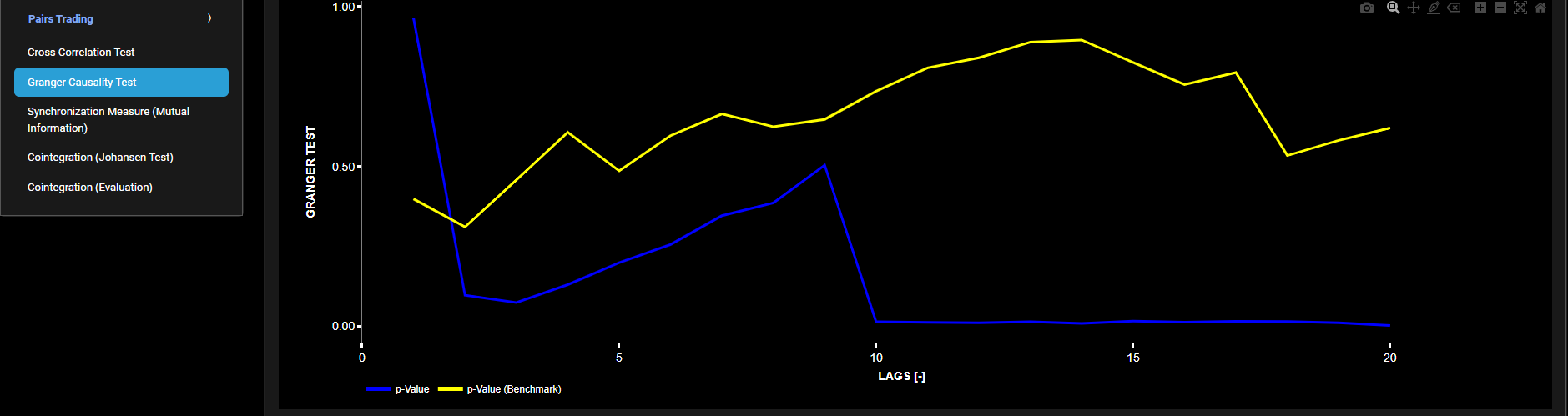

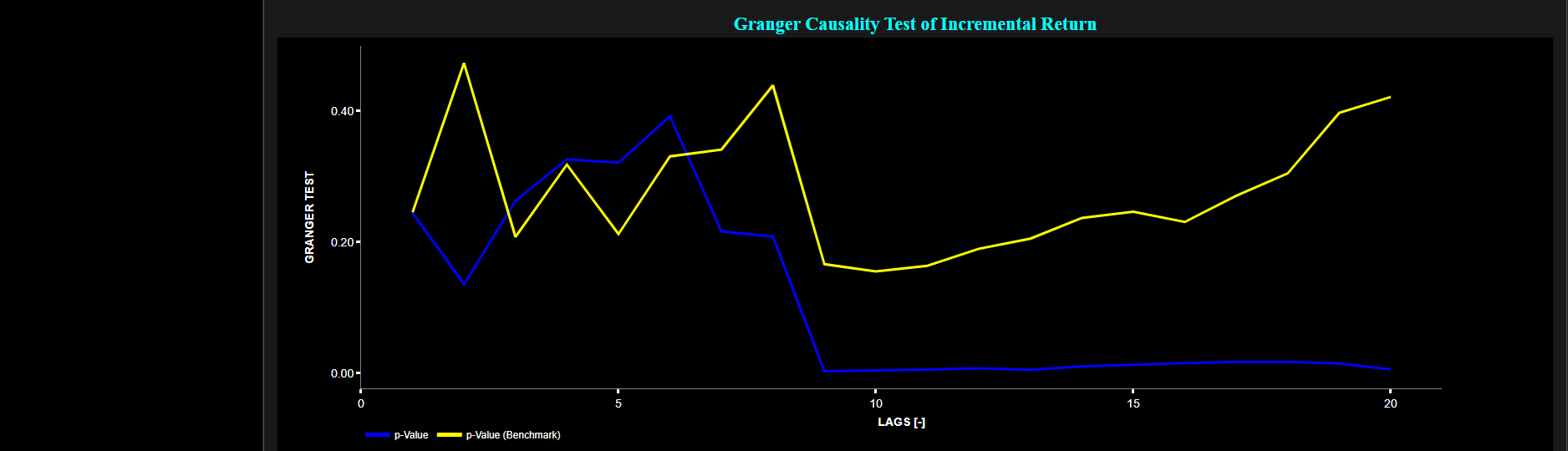

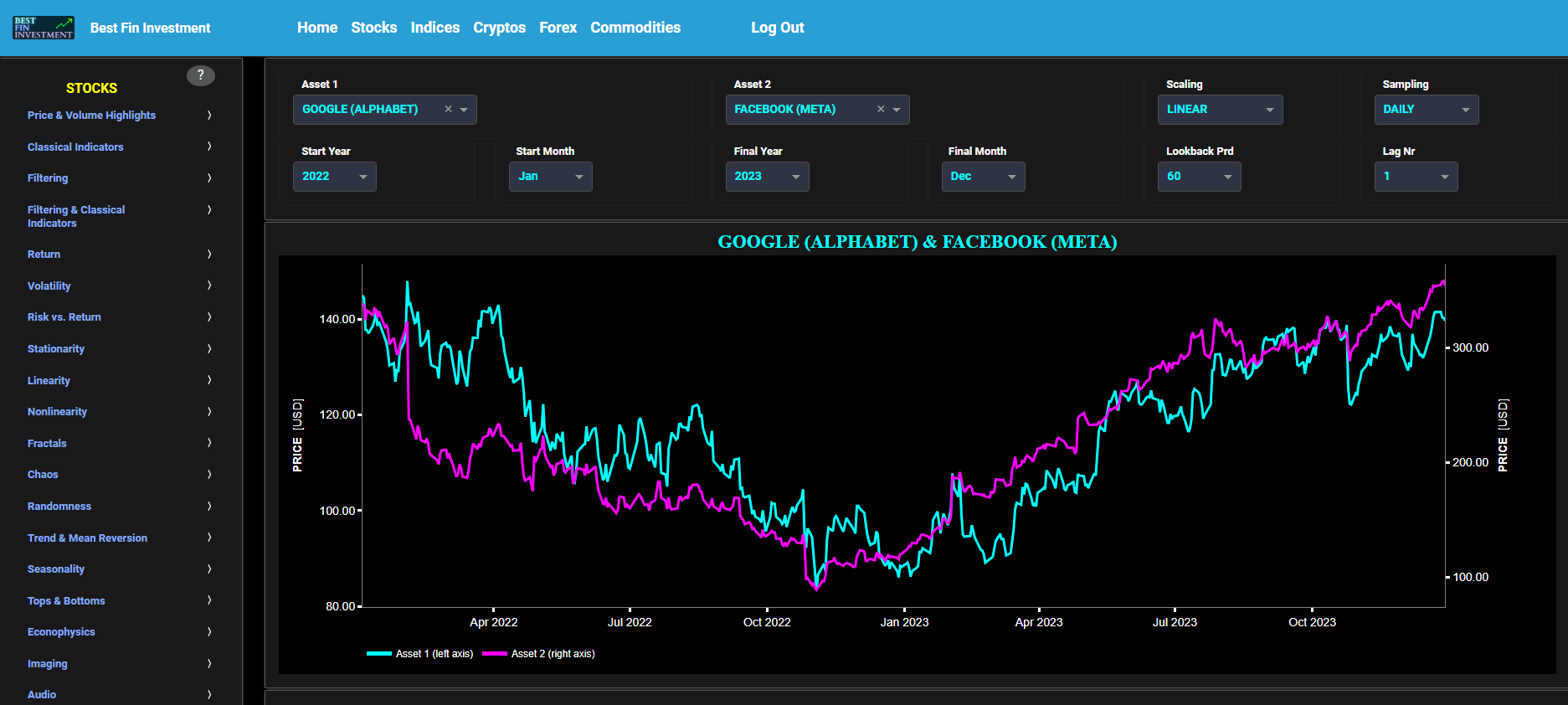

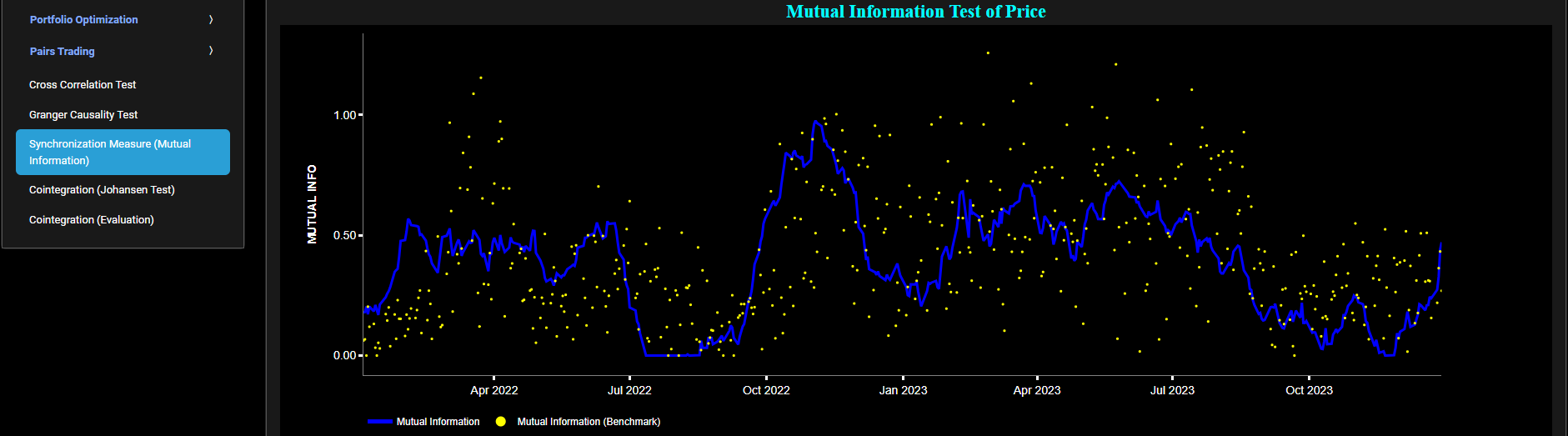

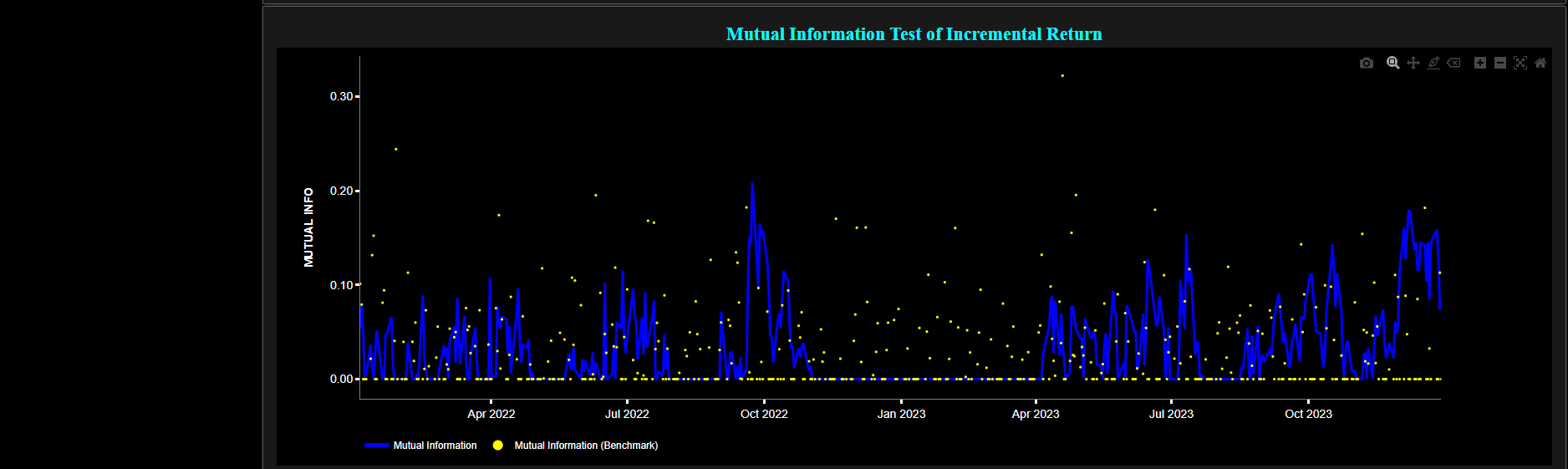

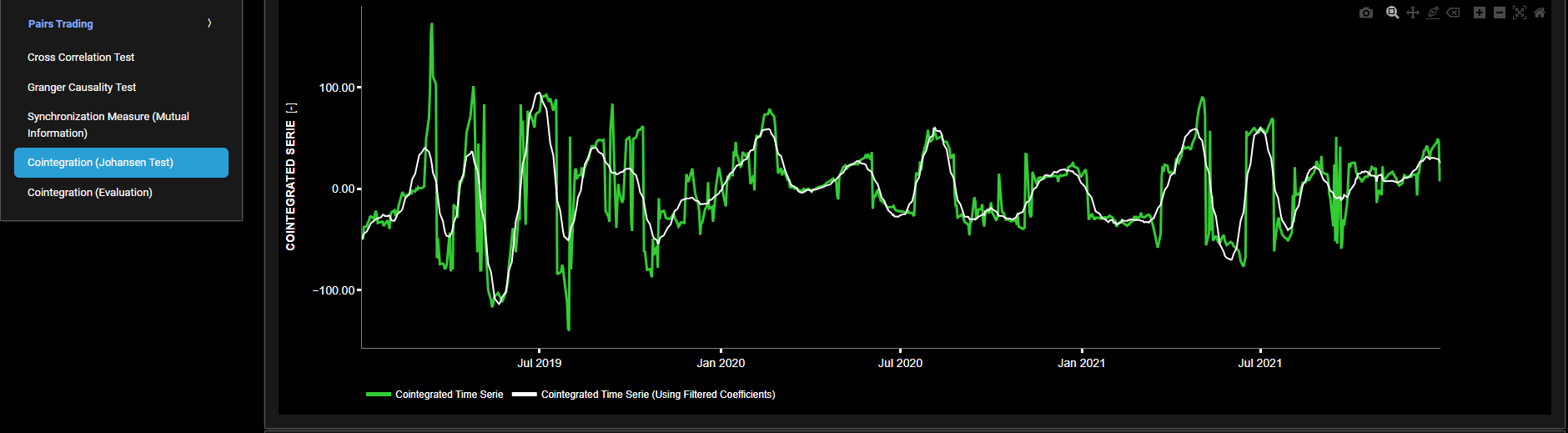

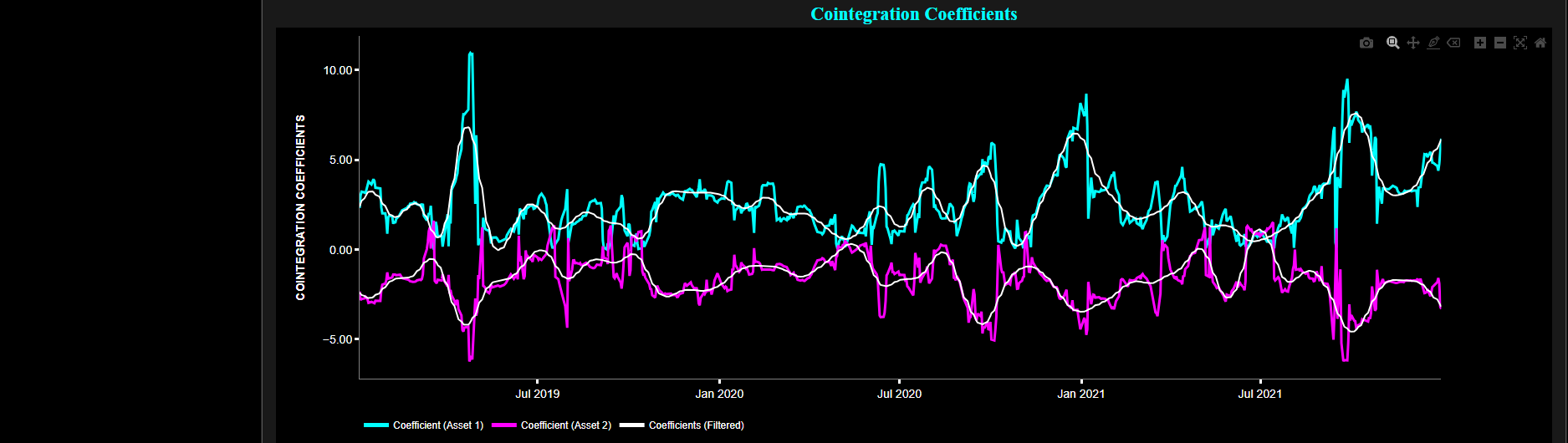

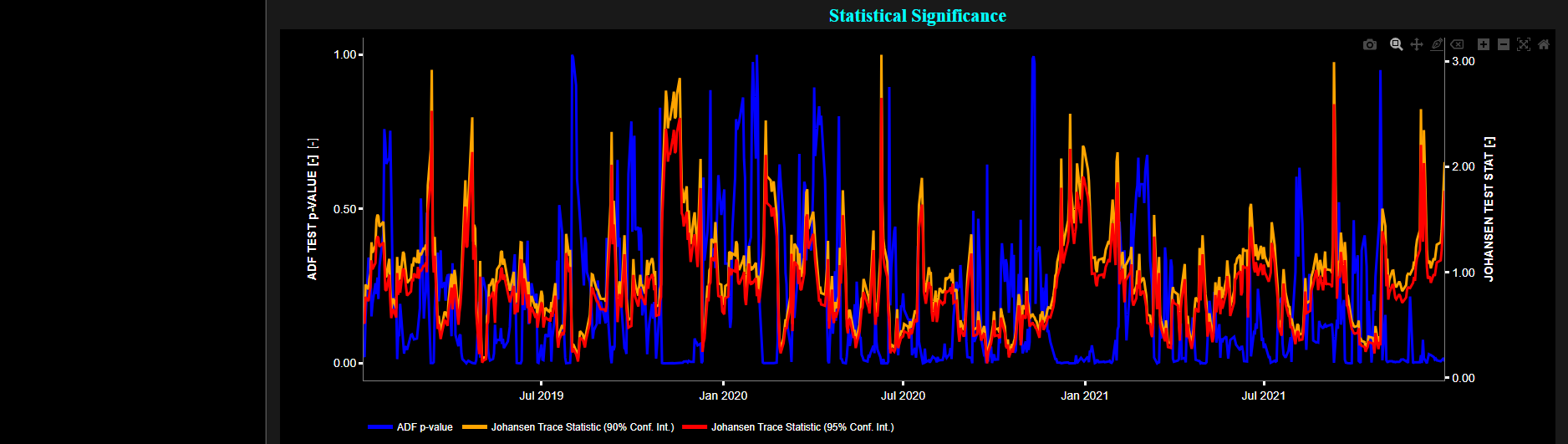

Ljung-Box Test